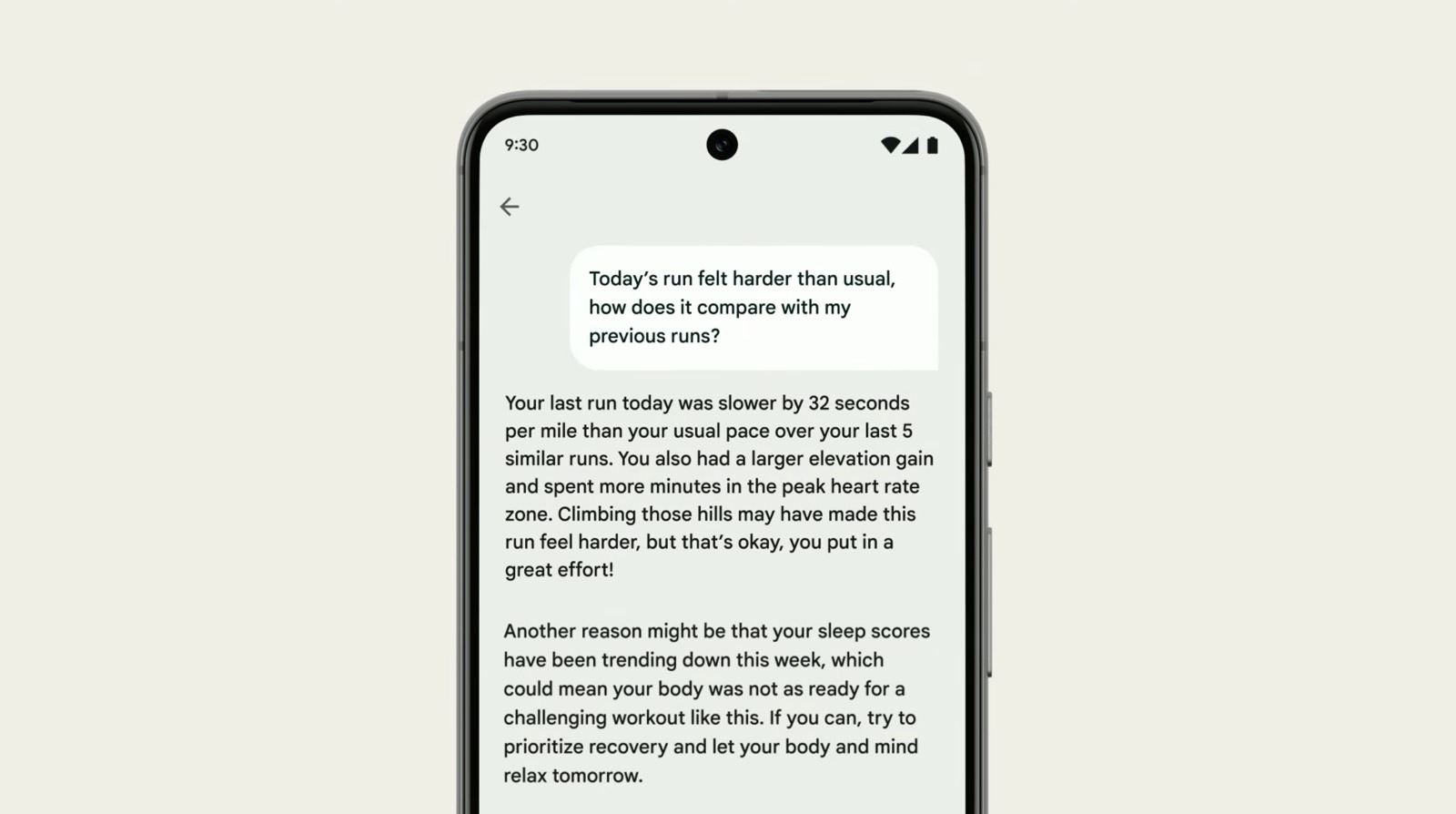

Google’s Pixel 8 and Pixel 8 Pro are up for preorder, and they come with great features you will appreciate if you’re looking to upgrade to the latest and greatest Android phones on the market. The Pixel 8 phones feature plenty of awesome AI features as well, including one that I, a longtime iPhone user, already envy. The Pixel’s Fitbit app will get a big generative AI upgrade soon, turning it into a coach that can help you understand your training performance.

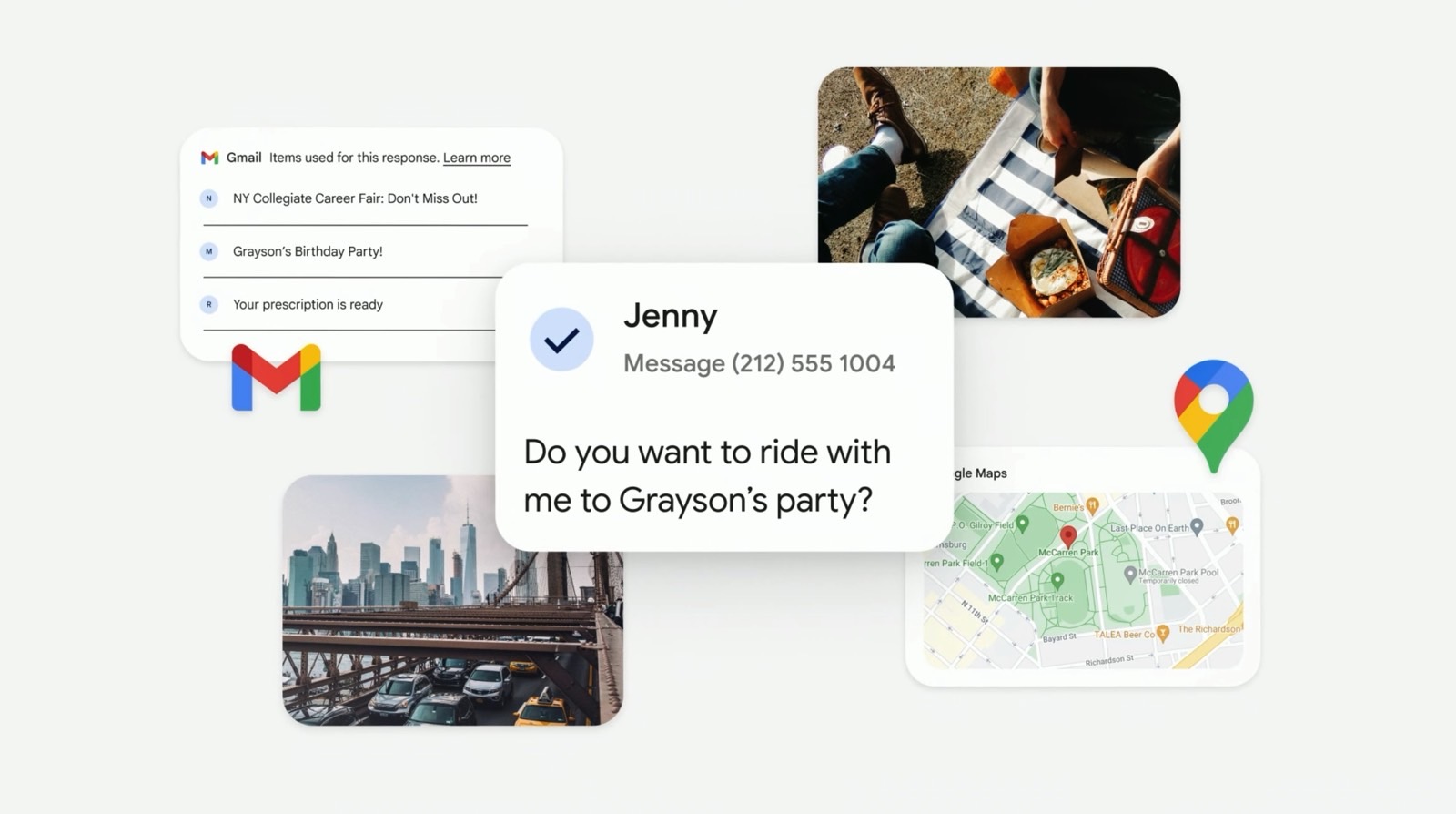

On top of that, Google unveiled an amazing Assistant with Bard feature that should further help the company build a personal AI product. Google Assistant can now access personal information from other apps like Gmail and Maps and answer complex queries that involve personal information from those apps.

Google’s event might have been about new Pixel hardware running the company’s latest AI products. But it also was very much about artificial intelligence. According to the YouTube transcript, Google said “AI” 47 times during the event. Comparatively, “Pixel 8” came up 64 times. But Google never mentioned “privacy” during the hour-long presentation despite unveiling these complex AI features that will require access to lots of user data.

I don’t want to trade my personal data for a personal AI assistant

When looking at the personal AI features coming to the Fitbit app on Pixel devices, I explained that Google never addressed the privacy aspect. The generative AI product accesses plenty of personal health and training data to provide insight into your progress.

In the example Google used, the app looked at a runner’s exercise history and recent sleep performance. Also, it might have had access to location data. It certainly used elevation information to assess the most recent run.

I said at the time that Google never explained what it does with the information. The Fitbit feature might be experimental, and Google will start testing it early next year. But users need to know what information reaches Google’s servers with those prompts and whether that information helps train the AI.

The best scenario would be having the personal AI run on-device so that data never has to leave. I wouldn’t want to trade personal information to get a free personal AI

But we don’t know that because Google never mentions user privacy during that segment. This prompted me to see how often Google said “privacy” during the event. And I was surprised not to see a single mention.

That’s strange, considering that Google started to make a big deal about privacy a few years ago in what was a direct answer to Apple. The iPhone maker turned privacy into a big iPhone feature, setting itself apart from Google, Facebook, and other companies that deal in user data.

Also, remember that privacy is a big issue for generative AI products like ChatGPT and Google Bard. Stricter privacy rules in Europe prevented Google from launching the early version of Bard in the EU when the generative AI got its big international rollout in May.

Assistant with Bard will need careful privacy considerations

Google’s Assistant with Bard demo warrants the same privacy questions as the Fitbit one. In the examples Google uses, the generative AI can read one’s Gmail to surface information. It also has access to Maps and Photos data. The same questions apply. What happens with that data, and where it’s processed? Also, will any of that personal data be used for better target ads?

It’s very likely Google will not use personal data for ads or AI training. Google doesn’t address user privacy during the presentation explicitly. We need to be certain of that.

I went one step further and looked at Google’s blog announcements for the event. Google has a collection of 10 releases for the Made By Google event. Only the Assistant with Bard blog post includes a privacy mention. But even that one isn’t good enough:

On Android devices, we’re working to build a more contextually helpful experience right on your phone. For example, say you just took a photo of your cute puppy you’d like to post to social media. Simply float the Assistant with Bard overlay on top of your photo and ask it to write a social post for you. Assistant with Bard will use the image as a visual cue, understand the context and help with what you need. This conversational overlay is a completely new way to interact with your phone. And just like both Bard and Assistant, it’ll be built with your privacy in mind — ensuring that you can choose your individual privacy settings.

The privacy mention does little to explain what happens to the personal data Google uses to give you personal AI features. They’re all the more important, considering that Google doesn’t have a perfect track record at protecting user privacy.

Android 14 and AppleGPT

I will point out that Google does a much better job addressing privacy features in its separate Android 14 launch announcements. Android is the environment where all the AI features happen on Pixel devices, so it’s great to see Google’s commitment in action.

However, we still need Google to have clear, explicit privacy rules about what personal AI will mean to personal data.

I would have the same questions with an AppleGPT product that would power similar personal AI features for Siri. However, I would be more likely to trust Apple, given the work the company has done to promote user privacy and security.

On that note, I did go back to the iPhone 15 Pro event to see how many times Apple mentioned “privacy.” It happened twice. Once in connection to the Neural Engine built into the A-series chips and once when addressing Find My capabilities.

How many times did Apple say “AI”? That would be zero. Apple did use “machine learning” six times as an alternative for AI.