Many people worry about generative AI products like ChatGPT, both online and in real life. It’s not just regular ChatGPT users who wonder whether we’re in the early days of AI-generated human extinction. Many of the brilliant minds behind some of the coolest AI products in the wild already warn that regulation is needed.

The latest warning comes in the form of a short 22-word statement whose signatories include OpenAI CEO Sam Altman. As most are aware, that’s the company that created ChatGPT and released it into the wild:

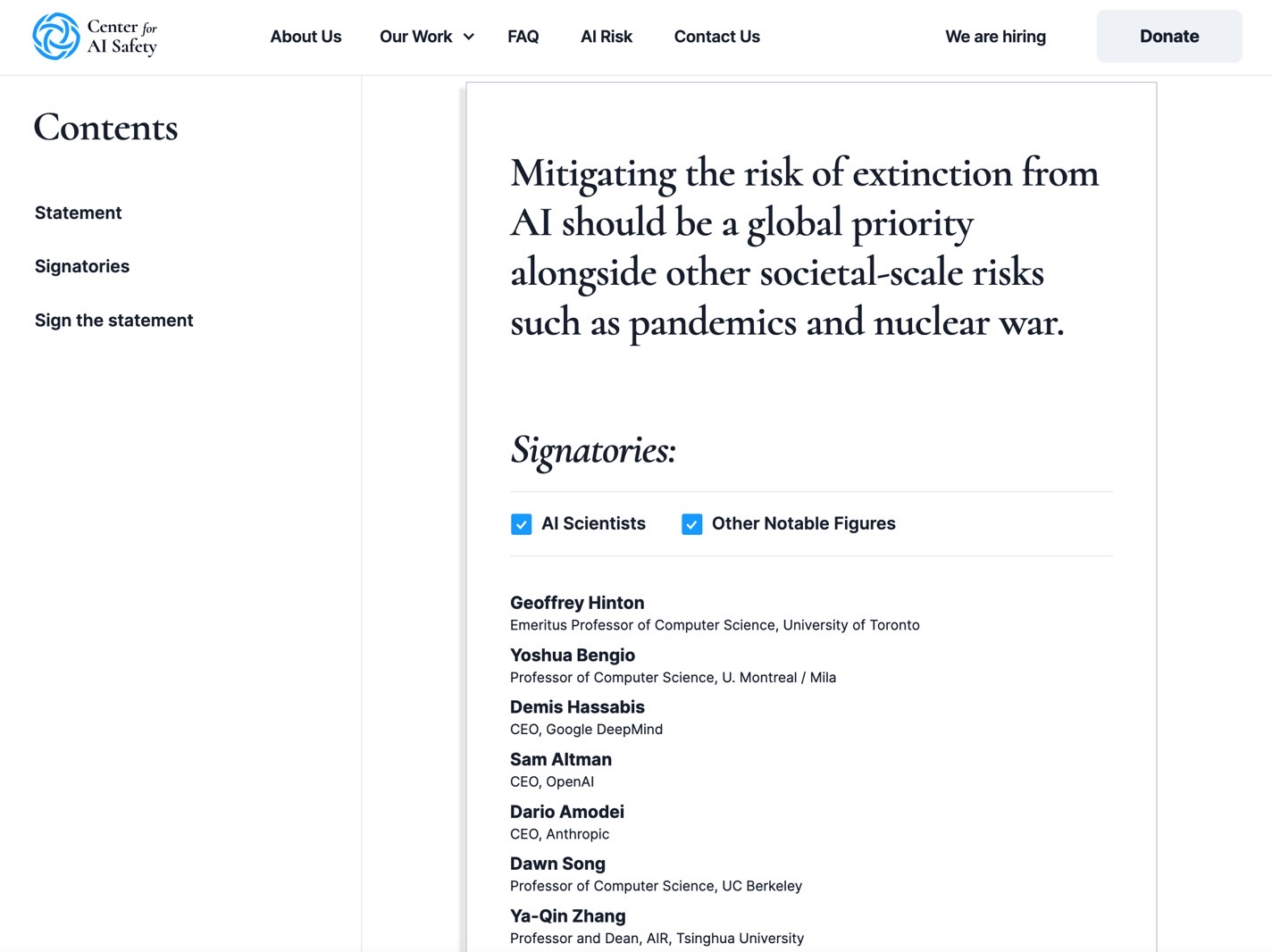

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

That sounds great in theory. In practice, I don’t think we can put the ChatGPT AI genie back in the bottle now that it’s out, no matter how many words the world’s most brilliant minds use to issue warnings about the end-of-days. We can’t stop the development of such technologies. And yes, AI will probably reach a point where we won’t be able to control it. But that doesn’t have to lead to our extinction.

The 22-word statement above is available on the website of the Center for AI Safety, a San Francisco-based non-profit. Anyone can put their name on that list, be it an AI researcher or a notable figure. The 22-word ChatGPT AI statement will make the rounds. You can expect it to pop in news bulletins and references around the world.

You’ll find Geoffrey Hinton, the Godfather of AI, on the list of signatories. He just quit Google to talk about the dangers of ChatGPT and similar products freely and openly. Google DeepMind CEO Demis Hassabis is also on the list.

Anyone who is anyone in AI research will want to sign this grim warning. You’ll want to have your name in there even though your research brought us to this moment, where we worry that a smarter version of ChatGPT might lead to extinction. The same people who issue these warnings are currently working on even faster, smarter, and more efficient AI variants that will be available on our devices in the coming years.

It’ll be a great achievement to say that you supported this 22-word statement when it was first issued.

But the ugly truth is that we can’t stop AI research and development. Nobody will stop making smarter ChatGPT products. The competition will drive that innovation. If OpenAI doesn’t develop a model better than GPT-4, Google will. Or someone else entirely. Even after AI regulation takes effect, which will certainly happen.

Take OpenAI, which threatened to take ChatGPT out of Europe if the local AI laws are too strict. Or Google’s tacit admission that bringing Bard to the EU is impossible given the region’s tech regulation. Google isn’t in a place where it can adhere to strong EU privacy features for Bard and other AI products.

But say that regulators do take this 22-word warning at face value. And strong anti-AI laws start to appear. It’ll still be too late. Anyone can use one of the open-source ChatGPT-like products out there as a framework for their own private, generative AI. They could then train that AI to become the scary, villainous figure that leads to humanity’s collective demise.

We won’t know we’ve crossed that threshold until it’s too late. Regardless of whether it’ll be a private company, the military, or a smart developer in their garage who comes up with the first AI product that’s indistinguishable from a human. Even if we were to know the exact software update that creates AGI (artificial general intelligence), we still couldn’t put a stop to it.

Then there are the governments of the world. They must be both excited and terrified about this new resource/problem. Each one must develop smarter AI so their enemies can’t beat them with AI. It might sound like sci-fi movie-inspired paranoia. But think about the atomic bomb.

After Hiroshima and Nagasaki, the world didn’t stop making nuclear bombs. If anything, research and development skyrocketed. Nobody has used an atomic button again since World War II, but these weapons became more sophisticated and destructive.

The AI threat is even bigger than that because anyone with a decent enough computer can create an extinction-inducing AI threat. But nobody can make a nuclear weapon in their basement. Of course, the AI threat is still just theoretical at this point, whereas the nuclear threat is very real.

I’m not scared about AI even though we’ll inevitably achieve AGI, And nothing will change my mind. It’s quite the opposite: I’m excited about what AI can deliver in its next stages. After we’ve solved reliability and privacy matters, that is. Even if that means AI will eventually transform humanity into something better… or destroy it completely.