Since releasing iOS 13 a few weeks ago, Apple has been pumping out updates at a furious pace. And with good reason, the initial incarnation of iOS 13 was a bit buggy, to say the least. Most recently, Apple earlier today rolled out the first beta of iOS 13.2, an update that finally delivers the long-awaited Deep Fusion mode that will enable iPhone users to take incredibly sharp and crisp photos in medium-light environments.

When Apple introduced Deep Fusion at its annual iPhone unveiling last month, Phil Schiller boasted that the feature — which relies upon the Neural Engine of Apple’s A13 bionic processor — was akin to “computational photography mad science.” In short, Deep Fusion in medium-light environments might almost be as impressive as what Night mode can do in low-light environments.

Notably, Deep Fusion isn’t a mode that can be toggled on and off. Rather, it operates quietly in the background and only exercises its computational muscle when the lighting environment calls for it.

With iOS 13.2 having now been out for a few hours, we’re already starting to see a few examples of what the feature brings to the table. Suffice it to say, it’s impressive.

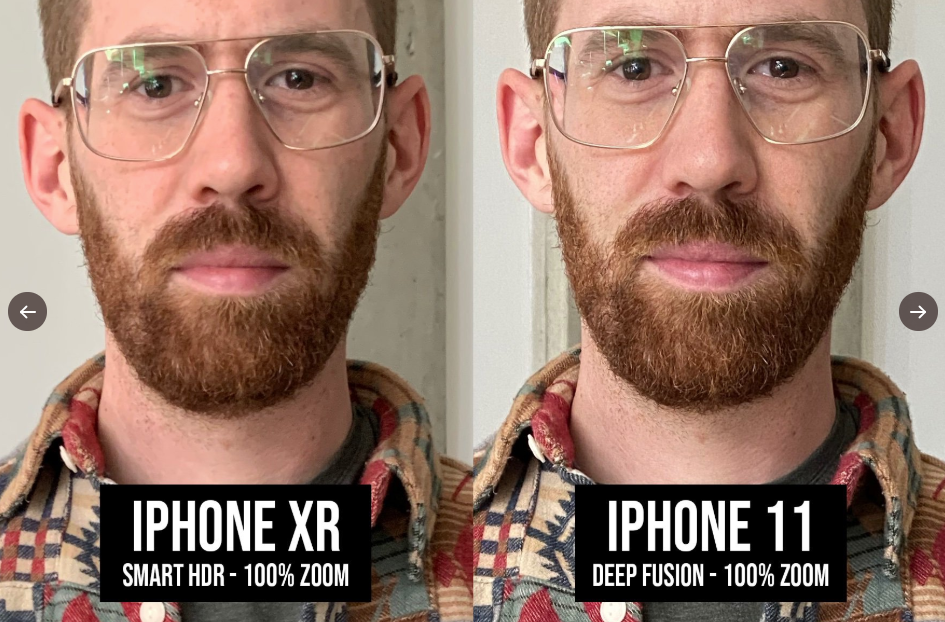

The samples below were posted to Twitter by Tyler Stalman and showcase an iPhone XR with Smart HDR vs an iPhone 11 with Deep Fusion. While this doesn’t provide us with a look at what iPhone 11 photos look like before and after Deep Fusion, the photographs themselves are instructive if you’re on the fence about upgrading.

Apple boasts that Deep Fusion “uses advanced machine learning to do pixel-by-pixel processing of photos, optimizing for texture, details and noise in every part of the photo.” The photos above, though far from a representative sample, suggest that Apple’s characterization is spot-on.

Stalman’s full Twitter thread featuring even more Deep Fusion photos can be seen below:

Very first tests of #DeepFusion on the #iPhone11 pic.twitter.com/TbdhvgJFB2

— Tyler Stalman (@stalman) October 2, 2019