Reviews are an essential part of the Google Maps navigation experience when it comes to discovering new businesses. They can give Google Maps users an idea of what to expect from a place they’ve never been before and decide whether to try it out. But people can abuse any online review system. Or, better said, people can try to manipulate it by intentionally leaving negative or positive reviews. That will make users question the reviews they see online, whether they’re on Google Maps, Amazon, Yelp, or any other service that accepts reviews.

It starts with content policies

Google published a blog post this week that explains how Google Maps reviews work. Google also details the steps it takes to police the reviews.

The company explains that it employs an elaborate system that aims to keep inappropriate content from reaching the Google Maps platform. This involves machine learning that can quickly identify patterns of non-compliance. There’s also a team of human operators that can provide an additional layer of scrutiny to Google Maps reviews.

Google explains in the blog that it has strict content policies in place to ensure that Google Maps reviews are based on real-world experiences. Also, the guidelines should “keep irrelevant and offensive comments off of Google Business Profiles.” These policies evolve in accordance with events in the real world, like the COVID-19 pandemic:

For instance, when governments and businesses started requiring proof of COVID-19 vaccine before entering certain places, we put extra protections in place to remove Google reviews that criticize a business for its health and safety policies or for complying with a vaccine mandate.

Google turns those content policies into training material for human operators and machine learning.

How Google Maps reviews work

Once someone posts a Google Maps review, the moderation system takes over. The first line of defense consists of machine learning algorithms that can identify patterns. In turn, they can tell whether a review is genuine or whether it’s part of an attempt to abuse reviews. If it’s the latter, the review disappears before anyone can even see it.

Google offers a few examples of patterns that Google Maps machines employ:

The content of the review: Does it contain offensive or off-topic content?

The account that left the review: Does the Google account have any history of suspicious behavior?

The place itself: Has there been uncharacteristic activity — such as an abundance of reviews over a short period of time? Has it recently gotten attention in the news or on social media that would motivate people to leave fraudulent reviews?

The human eye comes into play for more nuanced Google Maps review content that machines might have trouble with:

For example, sometimes the word “gay” is used as a derogatory term, and that’s not something we tolerate in Google reviews. But if we teach our machine learning models that it’s only used in hate speech, we might erroneously remove reviews that promote a gay business owner or an LGBTQ+ safe space. Our human operators regularly run quality tests and complete additional training to remove bias from the machine learning models

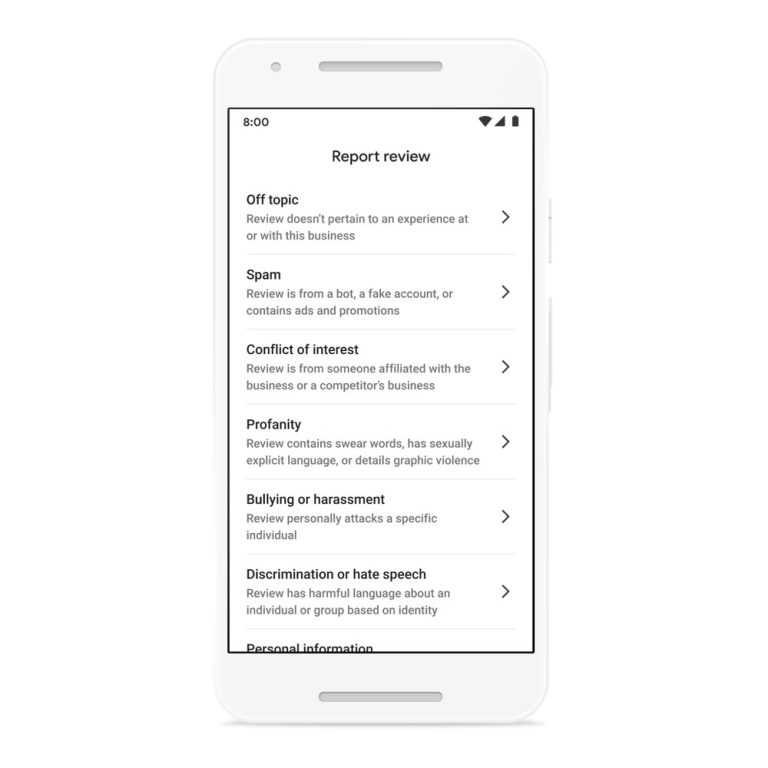

Finally, Google Maps lets users flag suspicious reviews. If that happens, the company will check the flagged content against its content policies. The company can then remove the flagged reviews from the app, suspend the user account or even pursue litigation.

Google also released the following video that goes over the Google Maps reviews approval process: