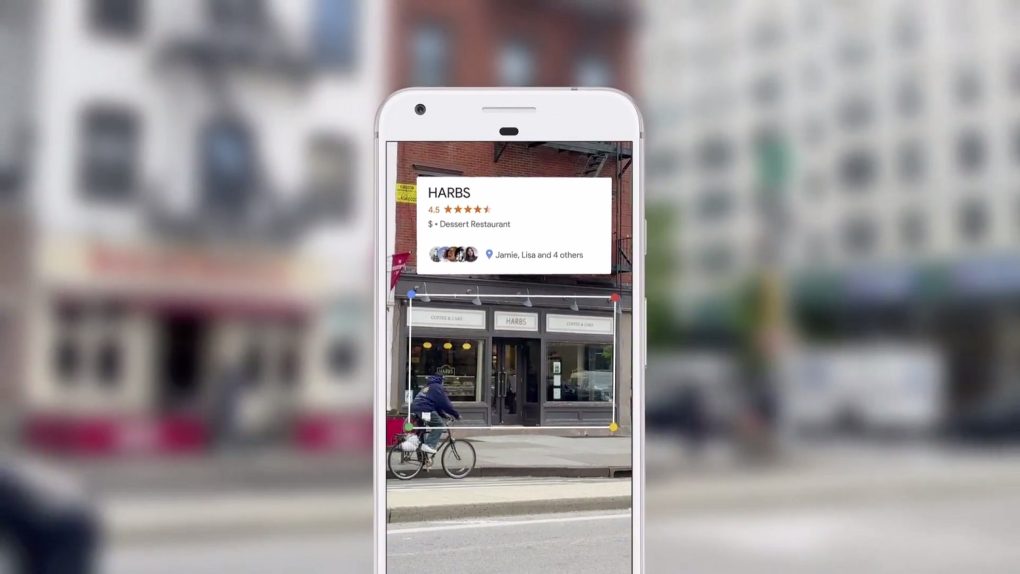

Google Lens is one of those neat that Google keeps coming up with. Many Android users are probably familiar with it, especially Pixel owners, as the app can quickly help out when you need information about various things around you and you don’t want to perform a tedious search that involves typing words into a website. Google Lens uses the phone’s camera to identify animals and plants, scan codes, conduct searches for related products, or recognize contact information including phone numbers and addresses. iPhone users can now enjoy the features as well.

Google Lens was available on iPhone before, but users were required to snap a picture for the app to perform its magic. Now, the feature works live, which means you only have to point your camera to your target for Google Lens to start doing its thing. You can still analyze photos with Lens, just like before, in case that’s something you want to do.

You’ve always wanted to know what type of 🐶 that is. With Google Lens in the Google app on iOS, now you can → https://t.co/xGQysOoSug pic.twitter.com/JG4ydIo1h3

— Google (@Google) December 10, 2018

The app will require access to the camera on iPhone, but as soon as that’s done, the live viewfinder feature of Google Lens should “just work.”

Once the camera is live, all you have to do is tap on your target, whether it’s an animal or object that you want to explore, or whether it’s text that you want to use.

Details about the selected object will appear at the bottom of the live visual search, allowing you to expand your search further. After all, Google Lens isn’t a standalone app for iPhone. The feature is built into the Google app on iOS, and the video above shows you exactly how you’ll go about launching Lens from the app.

If you already have Google preloaded on your iPhone or iPad, make sure you’re running the latest version to get the live Lens feature.