Microsoft’s latest generative AI product just blew my mind by doing something I didn’t think was possible. VASA-1 can combine a single image with one audio clip and turn it into a video of a person talking. It’s not just the lips moving to match the audio… it’s the entire face. The head movements, the changes in gaze, even the facial expressions you’d expect from someone telling a story — they’re all there.

Considering where we are with genAI, I always knew that a tool like this was imminent. After all, OpenAI has a text-to-video product that looks incredible in demos. That’s Sora, which will be available to the public until later this year. OpenAI also developed technology that uses AI to replicate the voice of someone after listening to it for only a few seconds.

It was only a matter of time before a company came up with a way to turn a portrait image or a selfie into a video of someone talking. The animated person in the video can be made to say anything you want in any voice, as long as you have an audio clip to train the AI.

I know what you are thinking, and it was the first thing that crossed my mind, too. This AI technology is incredible, but it’s also very dangerous. It invites anyone to generate misleading videos. Thankfully, Microsoft makes it clear from the get-go that VASA-1 will not become a publically-available product like ChatGPT or Copilot. That is, you won’t be able to impersonate celebrities and have them say whatever you feel like. At least, not with VASA-1.

Microsoft also says it has no plans to commercialize VASA-1 in the near future:

Our research focuses on generating visual affective skills for virtual AI avatars, aiming for positive applications. It is not intended to create content that is used to mislead or deceive. However, like other related content generation techniques, it could still potentially be misused for impersonating humans. We are opposed to any behavior to create misleading or harmful contents of real persons, and are interested in applying our technique for advancing forgery detection. Currently, the videos generated by this method still contain identifiable artifacts, and the numerical analysis shows that there’s still a gap to achieve the authenticity of real videos.

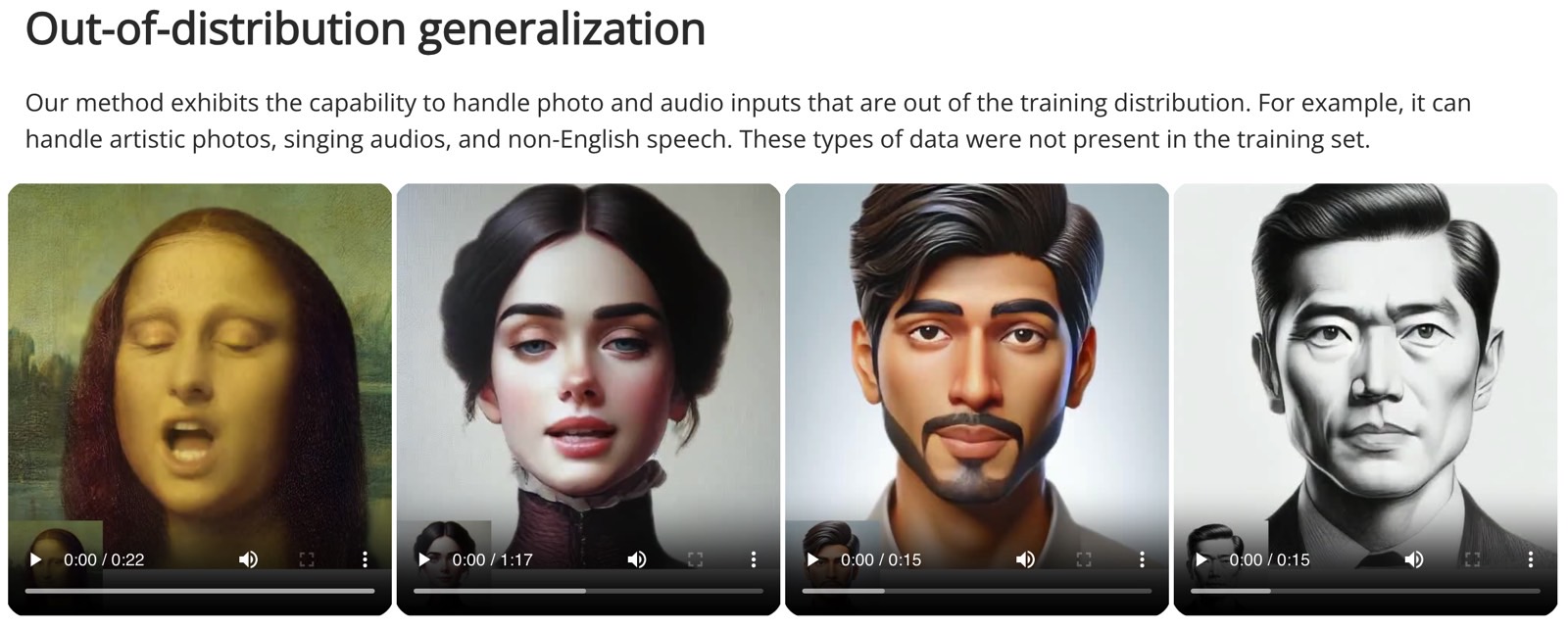

Moreover, all the images used to test the VASA-1 framework are of virtual people. They were generated with AI products like StyleGAN2 or Dall-E 3. The one “celebrity” exception is the Mona Lisa. Yes, Microsoft also used VASA-1 to animate the painting.

VASA-1 is only a research project for now. A proof of concept that shows this kind of AI functionality is possible. But if Microsoft has developed it, others must be working on similar technology. As the company points out, this type of tech has a great future. “It paves the way for real-time engagements with lifelike avatars that emulate human conversational behaviors.”

Microsoft concedes that it might go forward with a commercial product, but not until is is “certain that the technology will be used responsibly and in accordance with proper regulations.”

VASA-1 can give products like ChatGPT a face. Or it can help companies like Apple develop better spatial Personas for spatial computers like the Vision Pro. I’m only speculating here, of course. But I’m sure Microsoft isn’t the only big tech company exploring such genAI products.

How VASA-1 works

So what is VASA-1? It’s Microsoft’s first model for “generating lifelike talking faces of virtual characters with appealing visual affective skills (VAS), given a single static image and a speech audio clip.”

Microsoft is able to generate “high video quality with realistic facial and head dynamics but also supports the online generation of 512×512 videos at up to 40 FPS with negligible starting latency.”

The images in this post are all screenshots from Microsoft’s short VASA-1 announcement. But watching the samples makes it much easier to understand what the company has achieved here.

Microsoft set up a page at this link where you can watch plenty of demos of virtual subjects talking about all sorts of topics. The clips vary in length from a few seconds to a minute, and they’re incredible. If I showed you some of these clips and did not mention anything about VASA-1 or AI, you’d think these are real humans having a conversation.

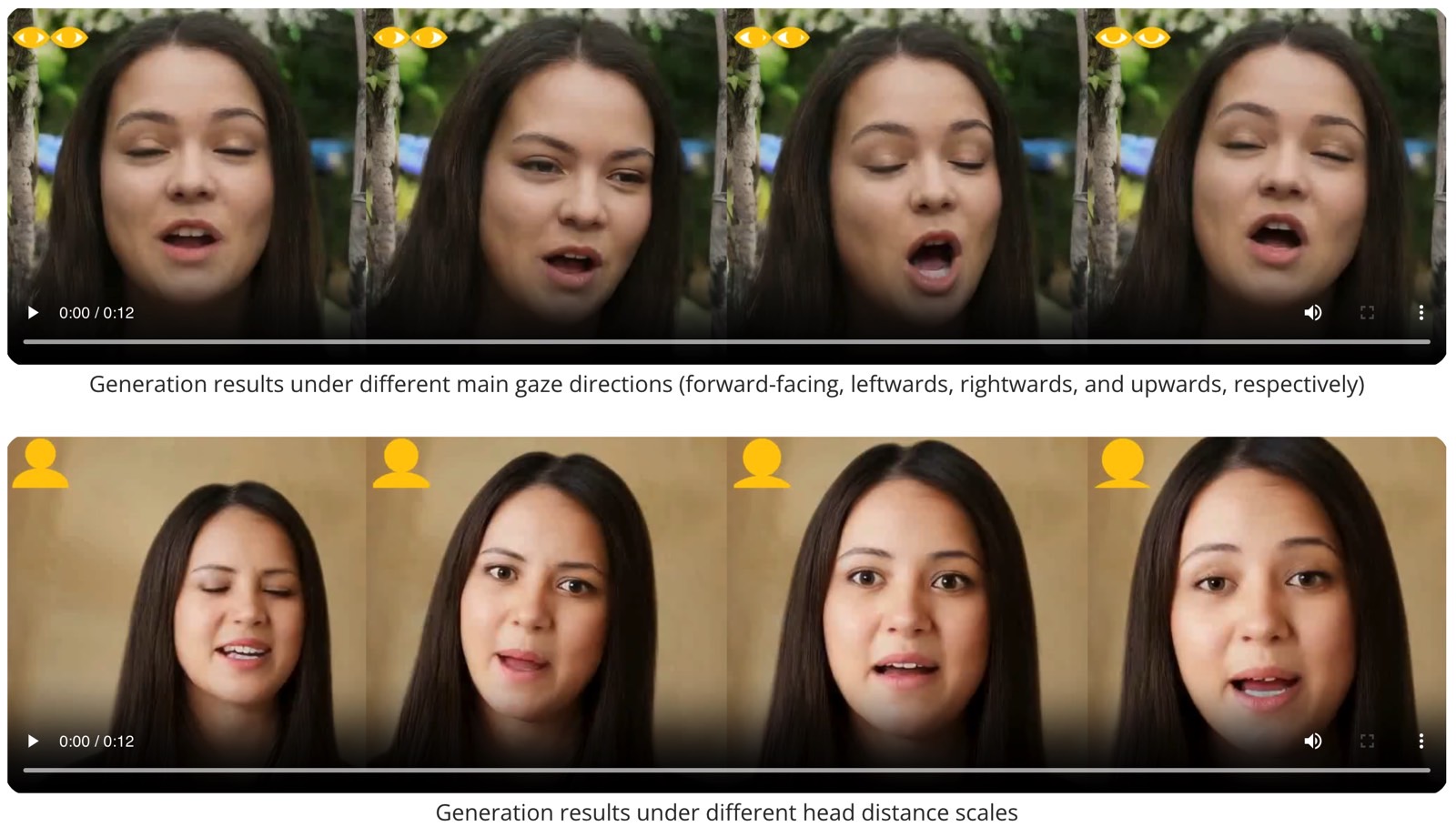

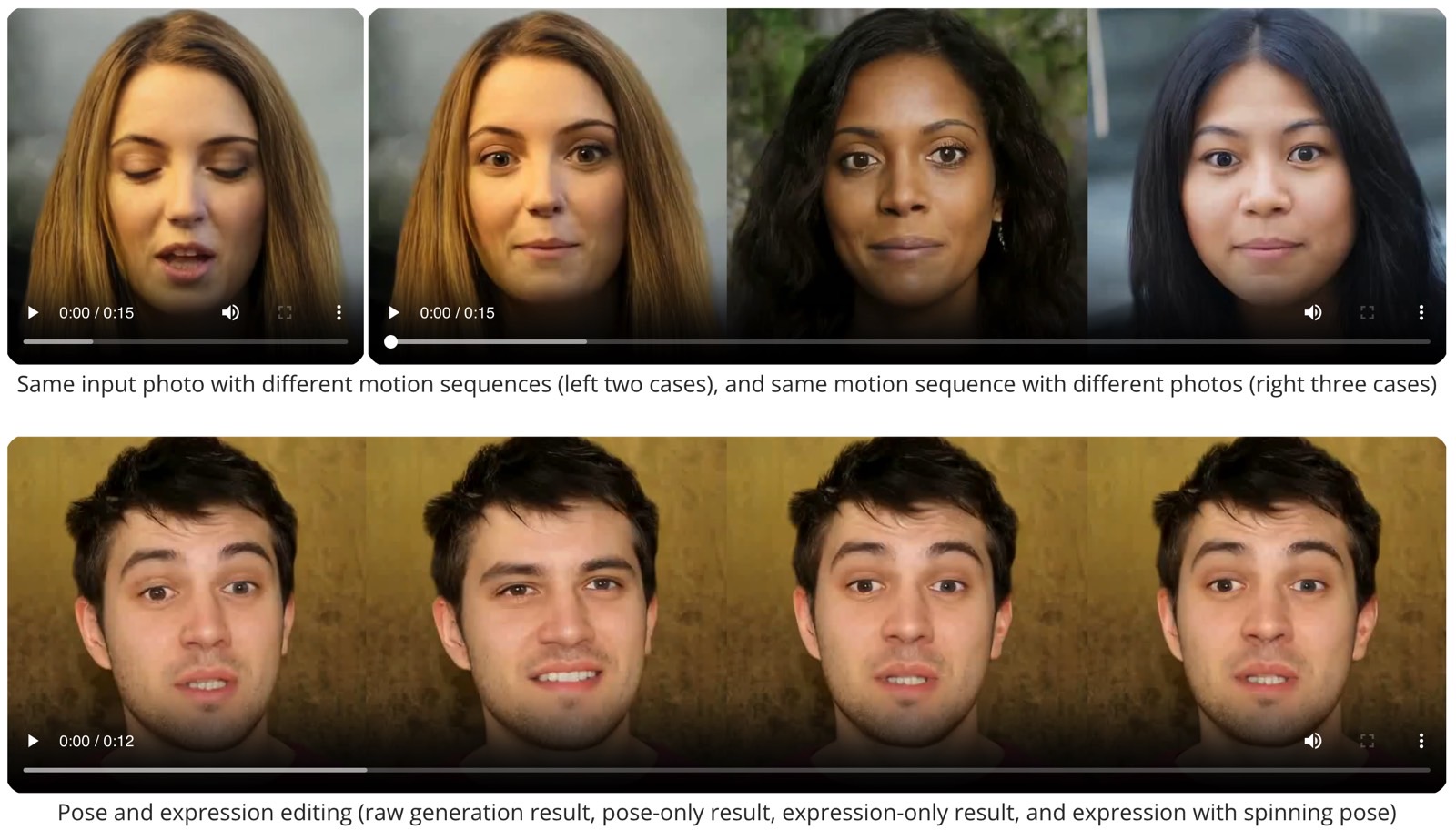

The demos also show that VASA-1 can make all sorts of changes to the portrait image that starts the process. You can change the position of the head, the direction of the gaze, and zoom in and out.

Furthermore, you can apply specific emotions to match the content of the audio file and the required tone. This is absolutely insane AI technology, which I’m sure will power commercial products in the not-too-distant future once we have regulations in place to safeguard against impersonation and misleading content.