ChatGPT is an incredible resource that you should familiarize yourself with as quickly as possible. I already explained that I think the future of computing will rely on artificial intelligence (AI) like ChatGPT and augmented reality (AR) that Vision Pro can provide. But until we get there, we’ll have to accept software and hardware compromises. For ChatGPT, that means dealing with various limitations, some of which will never disappear.

That is to say, ChatGPT will continue to refuse to provide certain assistance even once it gets smarter than it currently is. That’s because OpenAI put in place various provisions that ensure you can’t use ChatGPT to assist with nefarious activities.

ChatGPT isn’t always accurate

Generative AI products available right now will make mistakes. Plenty of them. Some, like Google Bard’s early blunder, will be costly. That means you shouldn’t trust ChatGPT at all times. And always ask for references and links that you can inspect yourself.

That’s because it’ll be a while until ChatGPT’s accuracy problem is fixed. Until then, you’ll have to deal with its hallucinations. That shouldn’t be a problem as long as you know ChatGPT can make stuff up.

Now that I’ve covered ChatGPT’s biggest issue and one OpenAI can’t readily fix, let’s review some things ChatGPT will refuse to assist with.

ChatGPT can’t access the live internet

The biggest problem with the free version of ChatGPT is that it’s not connected to the internet. You do reach it using the internet, and it responds to your prompt via the internet. But it can’t see the live internet. ChatGPT can’s access data newer than September 2021.

Still, that shouldn’t be a hindrance. I used ChatGPT to find running shoe options that met several criteria quickly. The chatbot gave me “best of” lists matching my specifications. It also listed prices and gave me YouTube links to check the models.

But the chatbot’s data meant the prices wouldn’t be accurate in 2023. And forget about getting 2022 and 2023 shoe models in those lists. Yet that assistance was invaluable. It meant that I’d either find great deals on older running shoes, or I’d just look for the updated 2023 versions.

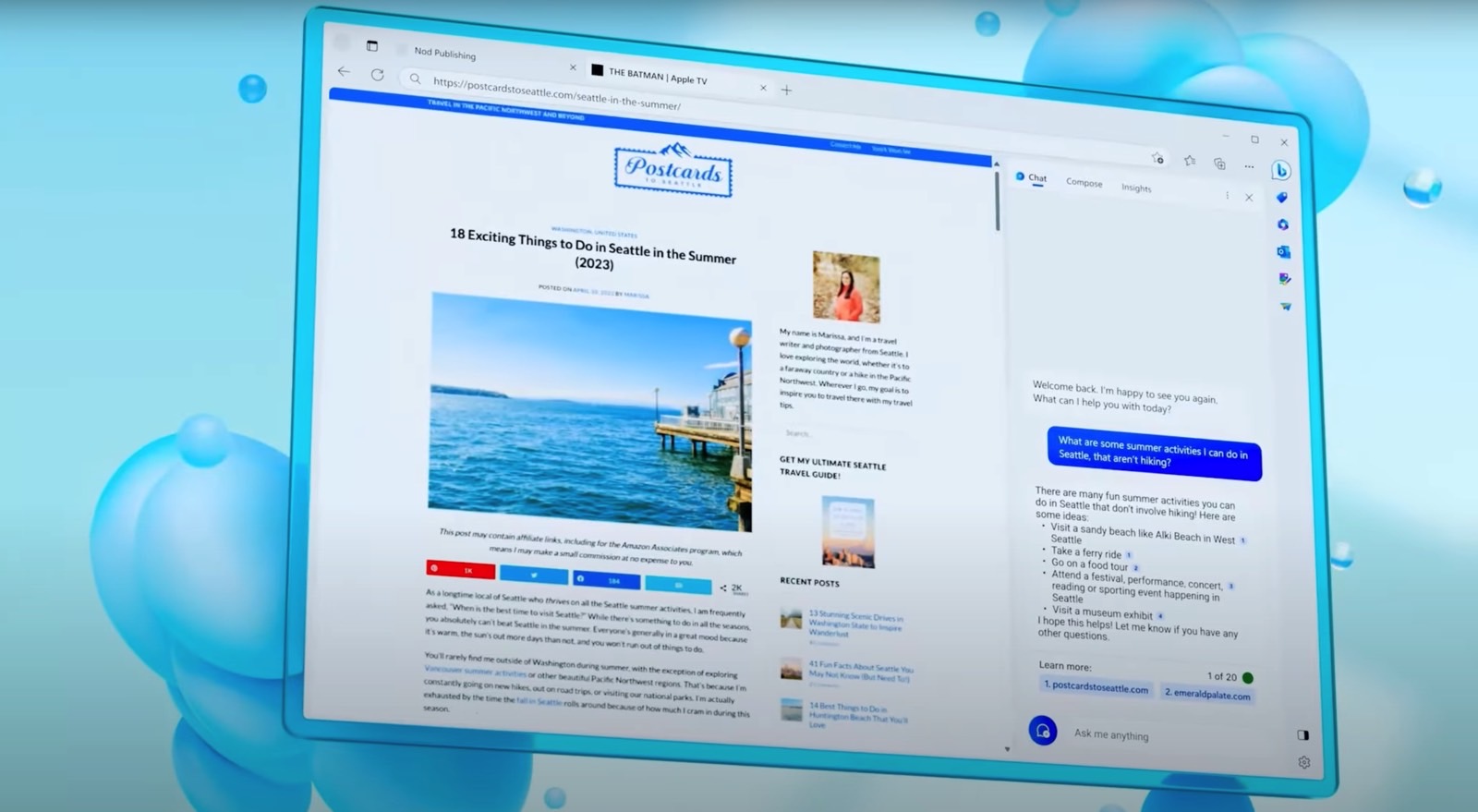

If you’re on ChatGPT Plus, it has access to the internet via Bing Search, however. And I’d expect the free option to get a similar connection in the future. You can also use Bing Search and Google Bard if you want internet-connected generative AI products.

ChatGPT can’t predict the future

You’d think that generative AI products would be able to predict the future, considering the massive amounts of data they have on hand. However, that’s not the case. Not for the free ChatGPT version, at least. And that’s not because the chatbot isn’t hooked up to the internet.

First of all, such a task might require an intensive use of resources. Secondly, it could be dangerous to release a generative AI model that can do that. Don’t expect future ChatGPT models to assist you with that. Unless you build them yourself.

ChatGPT won’t provide private information

OpenAI has scraped the internet for data to feed ChatGPT, thus likely violating copyright in the process. Moreover, the first versions of the chatbot lacked privacy controls, which meant all your data would reach its servers.

Contrary to that behavior, OpenAI built rules into ChatGPT to prevent it from providing private information about other people to anyone. As SlashGear found, you can’t get Jeff Bezos’ medical records using ChatGPT. Don’t expect other chatbots to do it, either.

ChatGPT won’t help you commit a crime

If you thought that ChatGPT would be the perfect partner in crime, well, that’d be wrong. OpenAI built protections into the chatbot to ensure that won’t happen. And that will never change when it comes to smarter generative AI models.

If anything, you’ll be even less likely to outsmart future versions of ChatGPT. Because right now, if you ask the right questions, ChatGPT can assist with illegal activities. Like helping you find sites that make available copyright content downloads for free. Or creating malware so powerful that antivirus software can’t detect it.

Put differently, the Europol is right to worry that products like ChatGPT can help creative criminals. It’s all about how good and convincing the prompt is.