Once WWDC 2025 rolls around, it’ll mark a year of Apple Intelligence on iPhone, iPad, and Mac. Unfortunately, there’s not much to get excited about right now, especially when you compare it to what AI tools like ChatGPT and Gemini can do.

A year ago, nobody really saw this coming. Sure, we knew Apple Intelligence might be delayed, but we expected it to eventually ship. Instead, Apple had to hold back its key feature, the smart Siri that could learn from your iPhone data and control apps.

Apple still has time to catch up, and I’m sure the enhanced Siri experience they envisioned last year is on the way. WWDC 2025 should also reveal new Apple Intelligence 2.0 features likely coming to iOS 19, iPadOS 19, and macOS 16.

In the meantime, there is one promising development on the Apple AI front, though it’s unclear how or when it might be integrated into Apple Intelligence. Apple researchers have introduced a model called Matrix3D that can create 3D scenes using just three photos of an object.

That’s a pretty big breakthrough, as it could have immediate applications in Apple’s software. However, it’s still uncertain whether Apple plans to incorporate the tech into its apps soon.

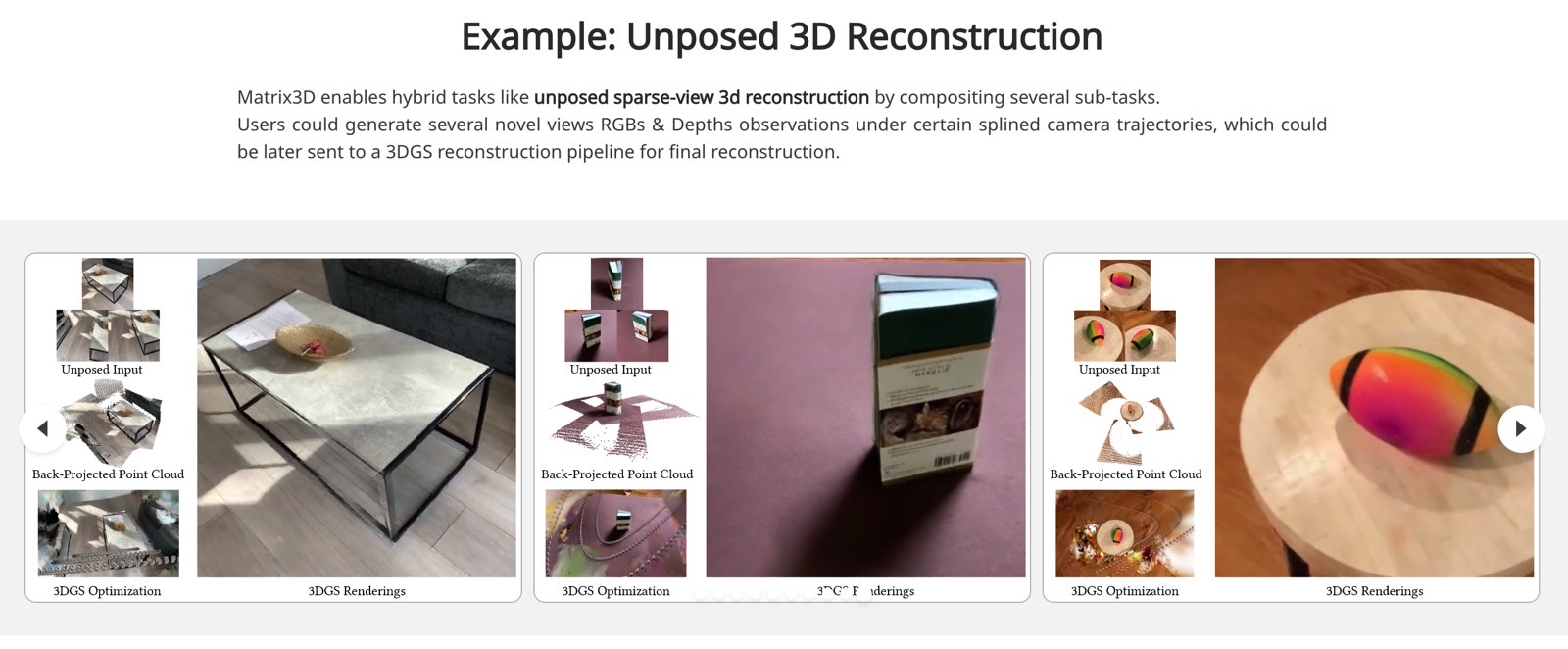

Apple researchers, in collaboration with scientists from Nanjing University and the Hong Kong University of Science and Technology, developed a way to generate 3D scenes from still images using AI. As shown in examples shared on GitHub, the results are stunning. They look like real videos, as if someone moved a camera around the object while filming.

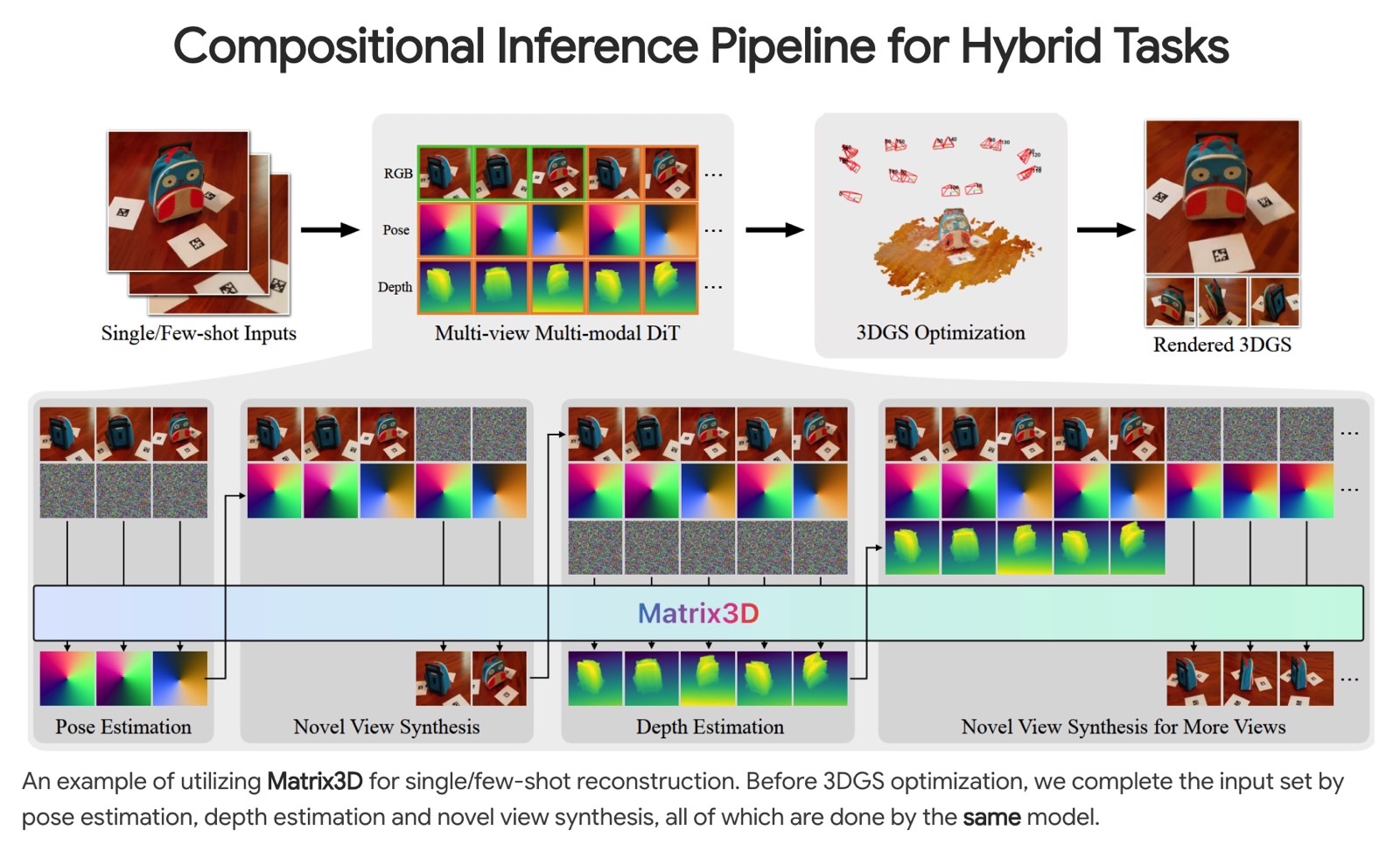

The 3D scenes are generated by analyzing the real photos for each example. Matrix3D figures out where the camera was positioned, predicts pixel depth, and then generates new angles to create the 3D view a user might want to see.

What’s also interesting is how Matrix3D was trained. Researchers gave the AI images of objects, masked some of the extractable data, and let it work through the rest on its own.

The team used several dozen million images and depth frames from six public datasets to teach the AI how to build 3D scenes from standard photos.

So, how does this help the average iPhone user who wants to take advantage of Apple Intelligence? That’s a question Apple will need to answer as it releases more AI research. What’s the point of impressive AI breakthroughs if they never find their way into real products? It’s a bit like showing off potential iPhone patents without saying if any of them will make it into the actual devices.

That said, it’s not hard to imagine practical uses for Matrix3D within Apple Intelligence. Users might be able to edit their photos with AI, generating alternate views or repositioning objects in the scene.

Apple already lets iPhone users shoot Spatial Photos on devices like the iPhone 16 series, which are best experienced on the Vision Pro headset.

Matrix3D could also be a powerful tool for developers working on AR/VR content for Vision Pro. The same goes for game developers building for iPhone, iPad, and Mac. Instead of relying on resource-heavy methods to build 3D assets, they could use Matrix3D to streamline the process.

There’s also the massive shopping app market on iPhone. AI tools like Matrix3D could let users see 3D views of products, such as furniture, before making a purchase.

Since Apple has published both the research and the code online, other AI companies might start building their own versions of Matrix3D.

This is all speculation for now, but I’m hopeful that Apple will bring some of its AI research into actual products through Apple Intelligence.

In the meantime, check out the Matrix3D page on GitHub and browse the 3D scene samples Apple shared, including ones you can interact with.