In the age of AI, new software releases might come when you least expect it. There’s no fixed schedule, with AI firms launching new models and features whenever they feel they are ready for commercial deployment.

Gemini is the best example of that, with Google announcing a few major upgrades and new features in the past few days. The Gemini 2.0 Flash reasoning model now powers Deep Research and Personalization features, for example.

Gemini also received Canvas support similar to ChatGPT’s. It also added NotebookLM’s Audio Overviews feature, which lets you turn summaries into podcasts. OpenAI doesn’t have that sort of AI functionality in ChatGPT.

But OpenAI gave ChatGPT an upgrade of its own, launching the o1-pro upgrade that joins the likes of o1, o3-mini, and o3-mini-high. ChatGPT o1-pro might be even better for reasoning jobs than the others, but it’s also the most expensive of the bunch. As such, you and I can’t use it unless we pay extra.

ChatGPT o1-pro was released for ChatGPT Pro, the premium tier that costs $200/month to use, last December. A few months later, OpenAI is now ready to give developers access to o1-pro, as long as they can afford the higher price.

OpenAI announced the ChatGPT o1-pro developer release on X, teasing the model’s better performance, but also the increased costs:

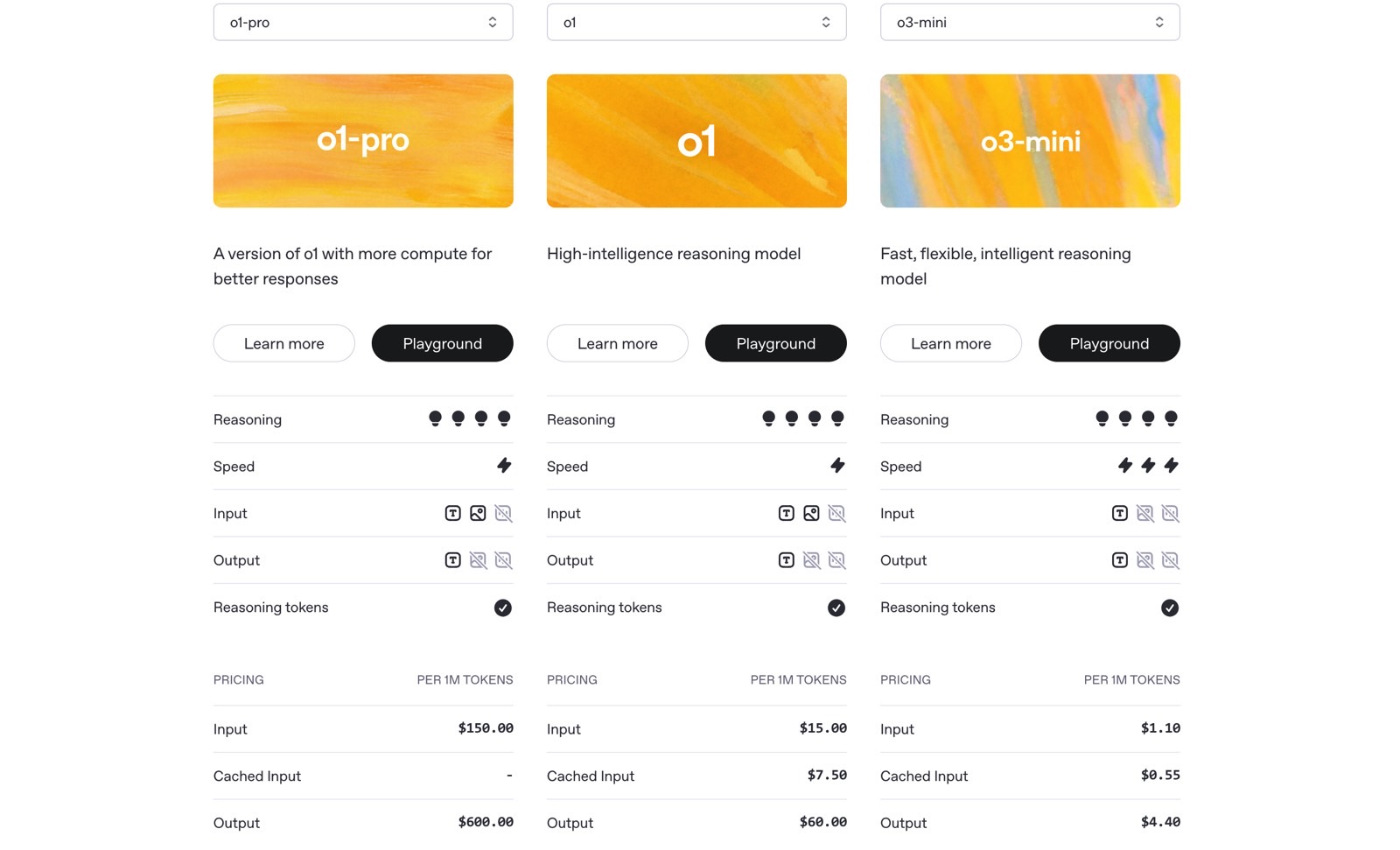

It uses more compute than o1 to provide consistently better responses. Available to select developers on tiers 1–5. Supports vision, function calling, Structured Outputs, and works with the Responses and Batch APIs. With more compute, comes more cost: $150 / 1M input tokens and $600 / 1M output tokens.

One million tokens account for about 750,000 words. Similarly to o1 and o3-mini, o1-pro has a context window of about 200,000 tokens.

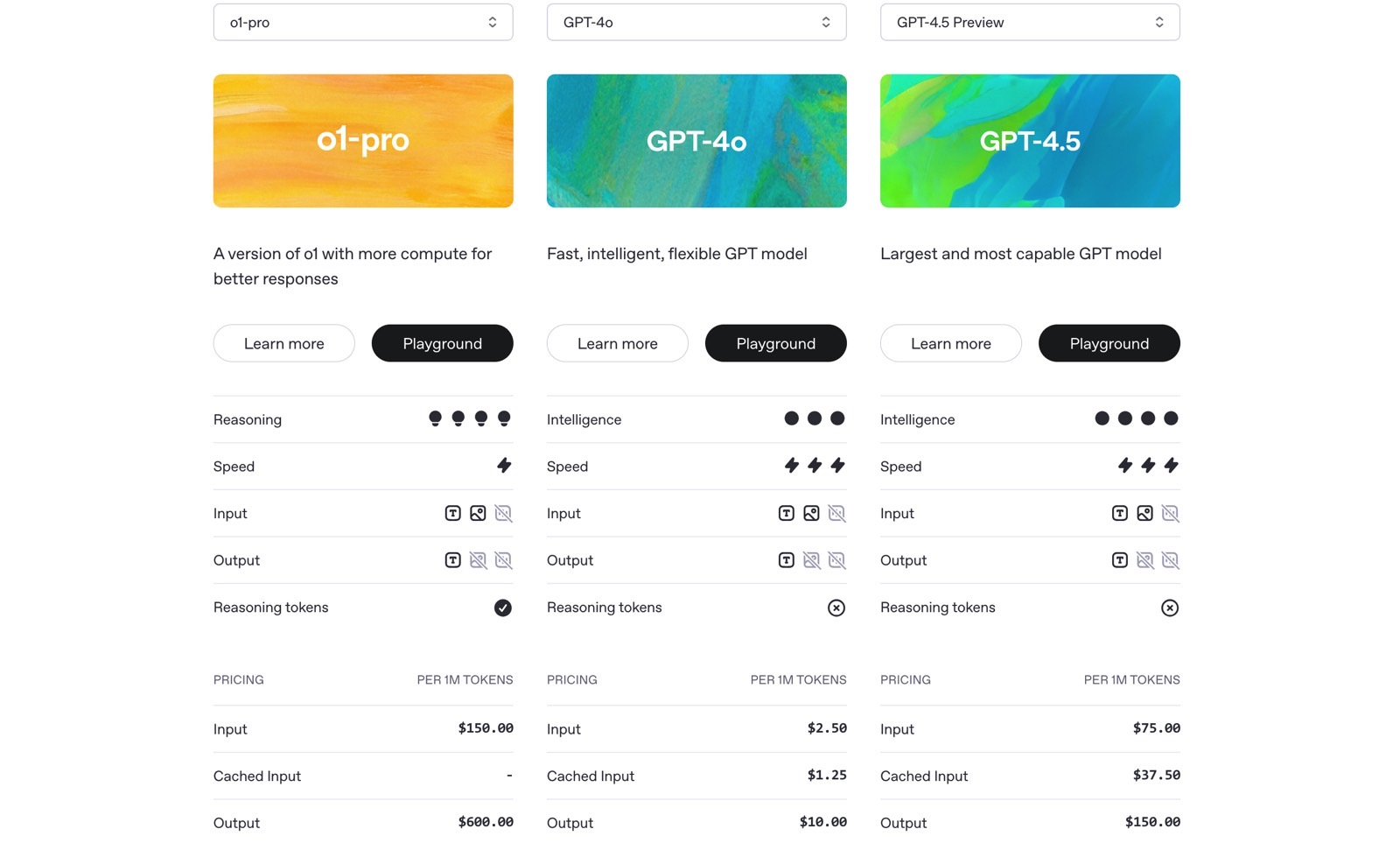

A comparison on OpenAI’s developer platform shows that o1-pro is 10 times more expensive than ChatGPT o1 and 136 times more costly than o3-mini.

Compared to GPT-4.5, which is available in preview mode, o1-pro is twice (input) and four times (output) more expensive (input).

These costs will not mean anything to ChatGPT users who are not developers. You’ll use ChatGPT via the Free subscription or pay a fixed fee for the Plus and Pro premium tiers. It’s developers who will have to worry about the costs OpenAI advertises.

As for the o1-pro reasoning performance, an OpenAI spokesperson gave TechCrunch a similar response as the teaser above, hyping the improved performance o1-pro will offer:

o1-pro in the API is a version of o1 that uses more computing to think harder and provide even better answers to the hardest problems. After getting many requests from our developer community, we’re excited to bring it to the API to offer even more reliable responses.

However, as the blog points out, o1-pro performance wasn’t always spectacular for ChatGPT Pro users who got access to it in December. Even some internal OpenAI benchmarks showed that o1-pro does ony slightly better than o1 on coding and math problems. However, the more expensive ChatGPT model is more reliable.