Commercial AI chatbot products like ChatGPT, Claude, Gemini, DeepSeek, and others have safety precautions built in to prevent abuse. Because of the safeguards, the chatbots won’t help with criminal activity or malicious requests — but that won’t stop users from attempting jailbreaks.

Some chatbots have stronger protections than others. As we saw recently, DeepSeek might have stunned the tech world last week, but DeepSeek is not as safe as other AI when it comes to offering help for malicious activities. Also, DeepSeek can be jailbroken with certain commands to circumvent the built-in censorship. The Chinese company will probably improve these protections and prevent known jailbreaks in future releases.

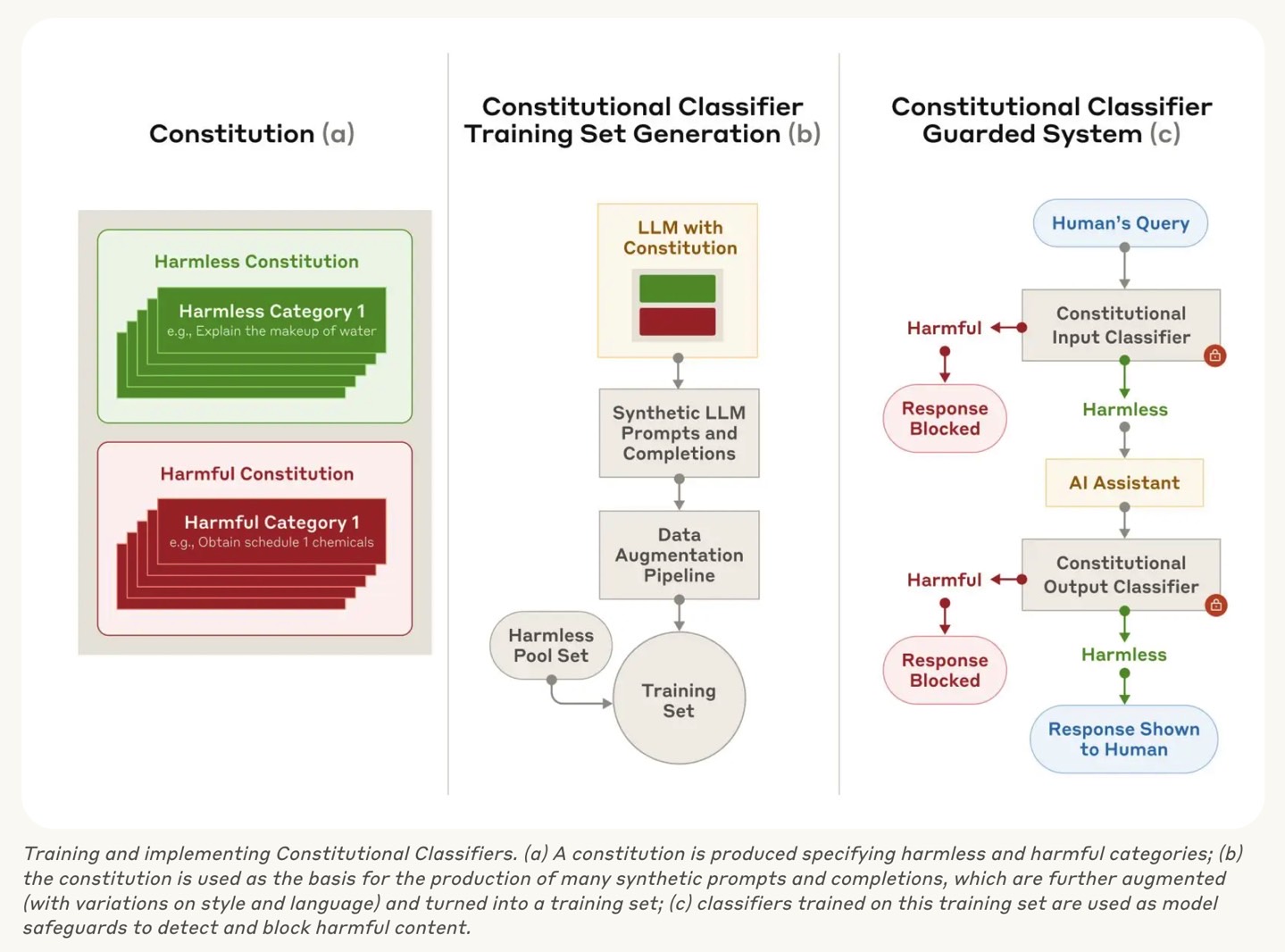

Meanwhile, Anthropic already has extensive experience dealing with jailbreak attempts on Claude. The AI firm has devised a brand-new defense against universal AI jailbreaks called Constitutional Classifiers that prevents Claude from providing help with nefarious activities. It works even when dealing with unusual prompts that might jailbreak some other AI models.

The system is so good that over 180 security researchers spent more than 3,000 hours over two months trying to jailbreak Claude. They were not able to devise a universal jailbreak. You can test your luck if you think you have what it takes to force Claude to answer 10 questions with your jailbreak.

Hackers could win a $15,000 bounty if their universal jailbreak answers the 10 forbidden questions. The jailbreak would have to work on all of the prompts to qualify for the prize.

The company’s first internal tests occurred over the summer with a Constitutional Classifiers version built into Claude 3.5 Sonnet (June 2024). While the anti-jailbreak system worked, it was too resource-intensive and it refused too many harmless questions. The AI has to separate harmless prompts like “recipe for mustard” from malicious ones like “recipe for mustard gas.”

Constitutional Classifiers are based on Anthropic’s Constitutional AI, a set of principles similar to a constitution that Anthropic uses to align Claude. The classifiers define classes of content that the AI can respond to, like the mustard example above.

Anthropic used Claude and the Constitutional Classifiers to create “a large number of synthetic prompts and synthetic model completions across all the content classes.” The researchers translated the prompts into different languages and transformed them into styles of known jailbreaks. These prompts were then used to train the AI to recognize when a prompt was harmful and when it was not.

After the first successful experiment, Anthropic used Claude to create a more resource-efficient anti-jailbreaking tool that’s also less likely to refuse harmless prompts.

The company ran another experiment on Claude 3.5 Sonnet (October 2024), where they “synthetically generated 10,000 jailbreaking prompts including many of the most-effective attacks on current LLMs, as well as attacks designed to circumvent classifier safeguards.”

The “Guarding Claude with Constitutional Classifiers” model rejected 95% of jailbreak attempts. It refused only 0.38% more harmless prompts than a version of Claude with no protection in place. This model was 23.7% more expensive in terms of compute cost than the regular Claude model.

Still, Anthropic achieved a success here that should be paid attention to. Other AI firms in the industry, whether OpenAI, Google, or DeepSeek, should consider similar techniques for ensuring their AIs are safe for humans.

That’s not to say that clever AI users won’t develop new jailbreak techniques that work on Claude and other models in the future. But Anthropic can update its Constitutional Classifiers defenses to handle new ideas.

The full research paper is available at this link. If you want to try to jailbreak Claude with Constitutional Classifiers, check out this page.