If you thought generative AI photos were incredibly impressive before Abobe’s Max event, prepare to be pleasantly surprised. Adobe introduced three new generative AI models at the show, including Firefly Image 2, which will let you create even more convincing AI images than before. These are great features to have on hand. Anyone can now become a pro at Photoshop without actually having any experience with the program.

But this level of generative AI sophistication opens the door to abuse. The more realistic the photos you make with Firefly Image 2, the more difficult it’ll be to figure out which photos are genuine and which have been created with the help of AI.

That’s why it’s great to see Adobe spearheading a Content Credentials program, which will essentially put a “digital nutrition label” on generative AI photos. However, there’s a big caveat here: Applying these labels will be optional.

Adobe unveiled the Firefly Image 2 Model at Max 2023, as well as the Firefly Vector Model and the Firefly Design Model. The company says more than three billion images have been generated so far with Firefly Image 1, one billion of which were created in the past month.

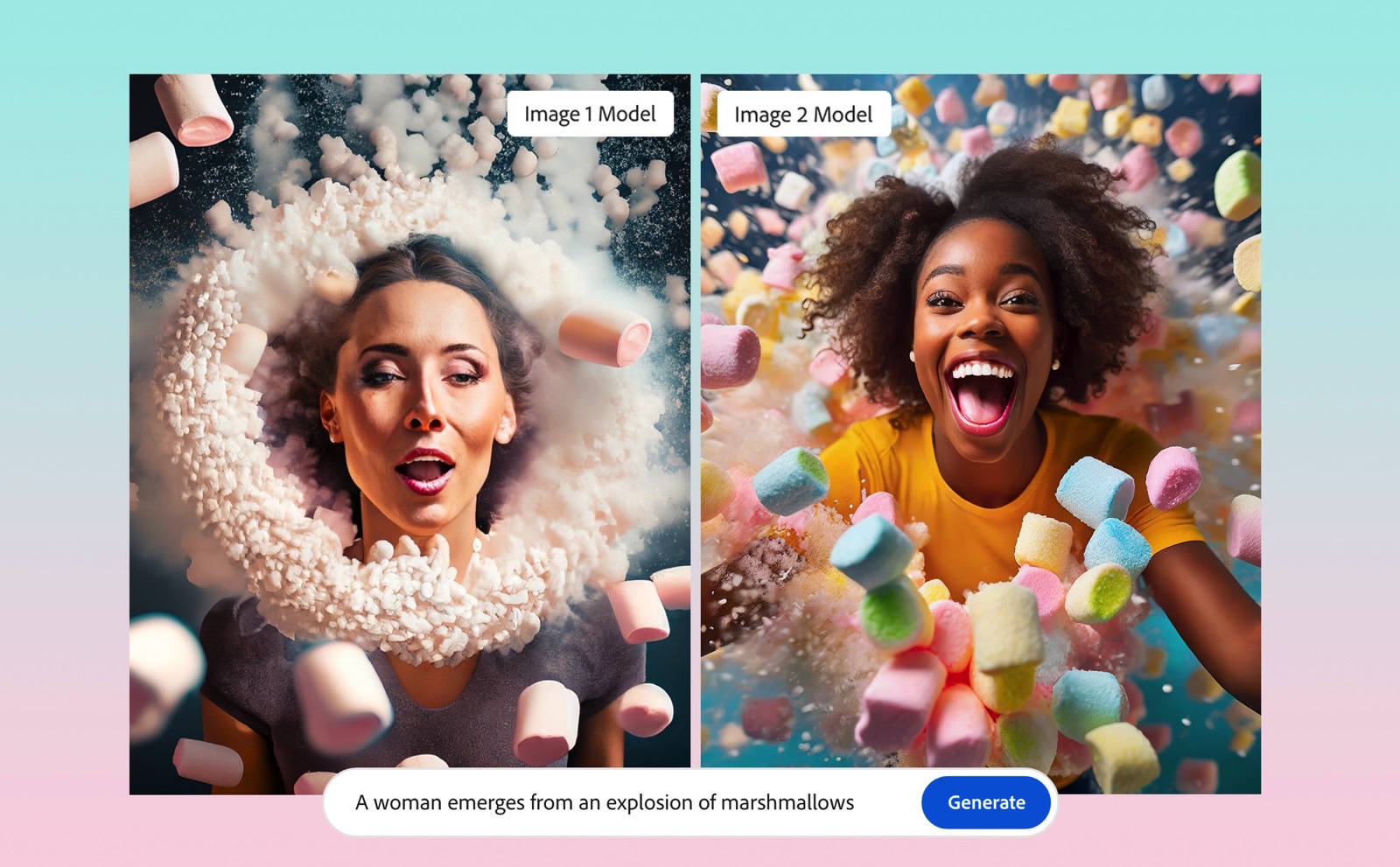

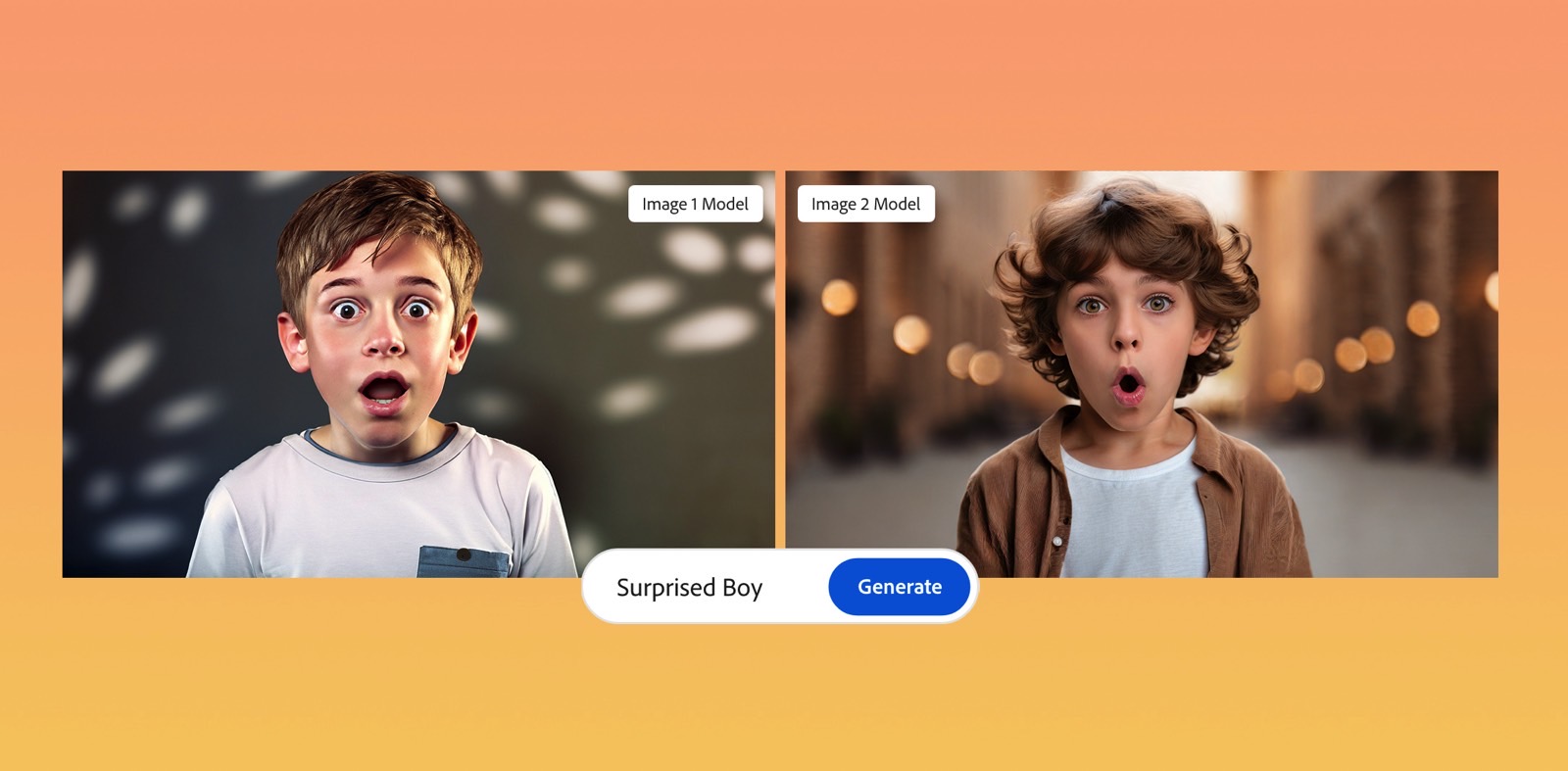

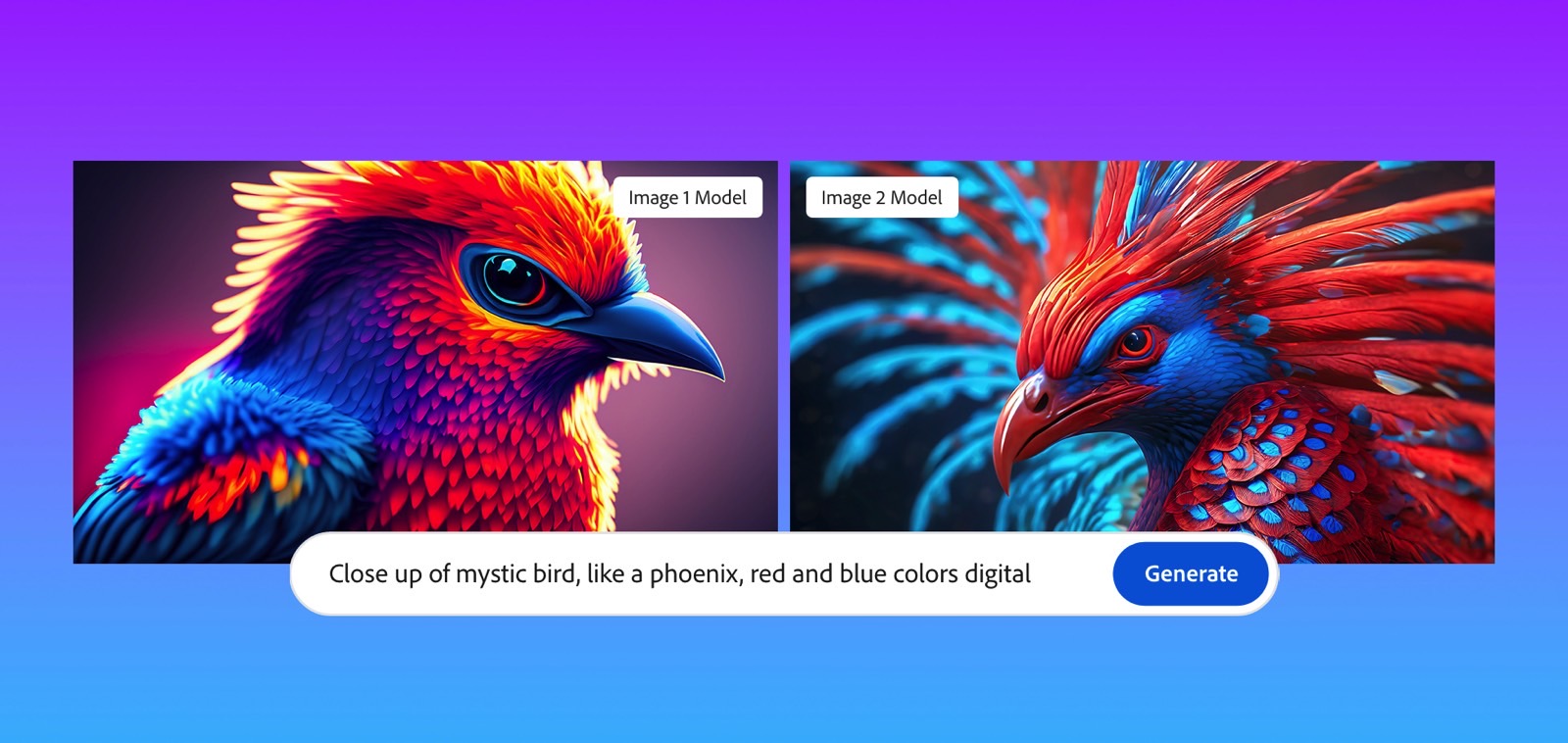

Firefly Image 2 will make the generative AI photos even more lifelike than before. Here’s how Adobe describes the new photo settings of the updated AI model:

Firefly Image 2 enables more photorealistic image quality with higher-fidelity details, including skin pores and foliage, plus greater depth of field control, motion blur, field of view and generation. Users can apply and adjust photo settings, similar to manual camera lens controls, helping creators quickly achieve their creative visions, saving time and resources. Auto Mode will now automatically select either “photo” or “art” as an image generation style, then apply appropriate photo settings during prompting, guaranteeing great results without tinkering.

The samples above and below highlight the major differences between Firefly Image 1 and Image 2. Yes, creating great content on the fly will be much easier. But it’ll also be that much easier to generate deepfakes of people or fake events that never happened.

Adobe also unveiled a new Generative Match feature that will be part of its products. It brings another layer of complexity to generative AI imagery.

With Generative Match, you can tell the AI what style to apply to a certain image. That will come in handy when working on marketing campaigns and connected products. You’ll be able to maintain a consistent style across the board.

But Generative Match opens the door to abuse, too. Specifically, some creators might use copyrighted content as inspiration to create images in a similar style. Adobe says it’ll enforce a few strict policies to prevent that.

First, Adobe trained its Firefly models on content that’s not copyrighted. Secondly, when a user uploads an image to show the AI the style Generative Match will have to replicate, the user will have to deal with a prompt that confirms they have the right to upload the image. Adobe will then keep a thumbnail of the photo.

This feels more like Adobe trying to protect itself against future litigation. But Adobe has to start somewhere, and hopefully, these requirements will prevent Generative Match abuse.

The bigger protection against AI-generated fake imagery is the new Content Credentials feature. It’s an open standard driven by the Content Authenticity Initiative and co-founded by Adobe. It has nearly 2,000 members, including Microsoft, Leica, Nikon, and others.

The way Content Credentials works is pretty simple. Creators will be able to place a Content Credentials pin on top of their AI images (see the image at the top of this post). That pin will always move with the image and will never be removed.

Just tap the white “cr” pin that will appear in the top right corner of an image, and you’ll get a complete history of the photo. You’ll know which generative AI tool has been used to create it.

As I said before, the big caveat here is that Content Credentials are optional:

Creators can choose to attach Content Credentials to their content, which might include things like whether AI was used or not. Voila! This information is added to the edit history of the content—creating a permanent record that can be confirmed.

Voila! Abusers will be able to circumvent this by not adding any pins to their images. So will those creators who do not want to watermark their images.

While this is a good start, Adobe just proved at Max that generative AI is getting a lot better at generating fake content. Policing this content will be imperative. Features like Content Credentials should become mandatory rather than optional. And more tech companies developing generative AI products like Firefly should join this standard or create their own visual indicators for AI content.

As for Adobe’s new generative AI products unveiled at Max, they’ll be available in beta versions for you to try in some Adobe apps (Illustrator and Express), as well as online in the Firefly web app.