When Humane announced the Ai Pin last year, it seemed like the company was “fixing” a problem we didn’t have. The wearable device is essentially a phone without a screen, focusing entirely on AI with voice input at the center. A few months later came the Rabbit r1, which brought a new type of AI behavior: The r1 can use some phone apps on your behalf.

These gadgets made it seem like we were about to be flooded with AI products in the coming years, including the “iPhone of AI” that Jony Ive and Sam Altman might be working on. But we already have AI installed on our main computers as well as the iPhones and Android handsets we use daily. All they need is proper AI.

Cut to this month, as OpenAI and Google are delivering huge AI upgrades. ChatGPT’s GPT-4o model is now available to anyone, including users on the free plan. Google baked Gemini into all of its products, including Android, which will have AI at the center of it. And it already looks like the GPT-4o and Gemini features coming to phones and the web are ready to kill devices like the Ai Pin and the r1.

The Ai Pin’s big selling point is a custom AI that handles voice commands flawlessly so you can focus on being in the moment. Real-life tests showed that the AI isn’t always working well and that battery life can be problematic.

But why even buy the Ai Pin for $700, not including the second data plan, when you have GPT-4o on your phone right now? The new model handles voice commands brilliantly, and the chats with AI are starting to sound more like the conversations we have with other people.

You can interrupt the AI without having it lose its train of thought, so you can update your prompts. ChatGPT’s voice also sounds shockingly human.

On top of that GPT-4o lets you show the AI images and video and ask questions about it. All you’d have to do is power your phone and give GPT-4o “eyes.” It’s a much better experience than the Ai Pin. Plus, the battery will last longer, and there are no associated costs.

The Gemini Assistant will get similar abilities later this fall, probably once Android 15 launches. Google demoed the Project Astra agents that work just like ChatGPT’s voice abilities after the GPT-4o update.

ChatGPT can’t control apps for you, at least not for the moment. Neither does Gemini. But the latter is being built into every app and service that Google offers. Interestingly, Google built a new trip planner into Gemini that will let you give the AI complex instructions. Here’s an example from Google:

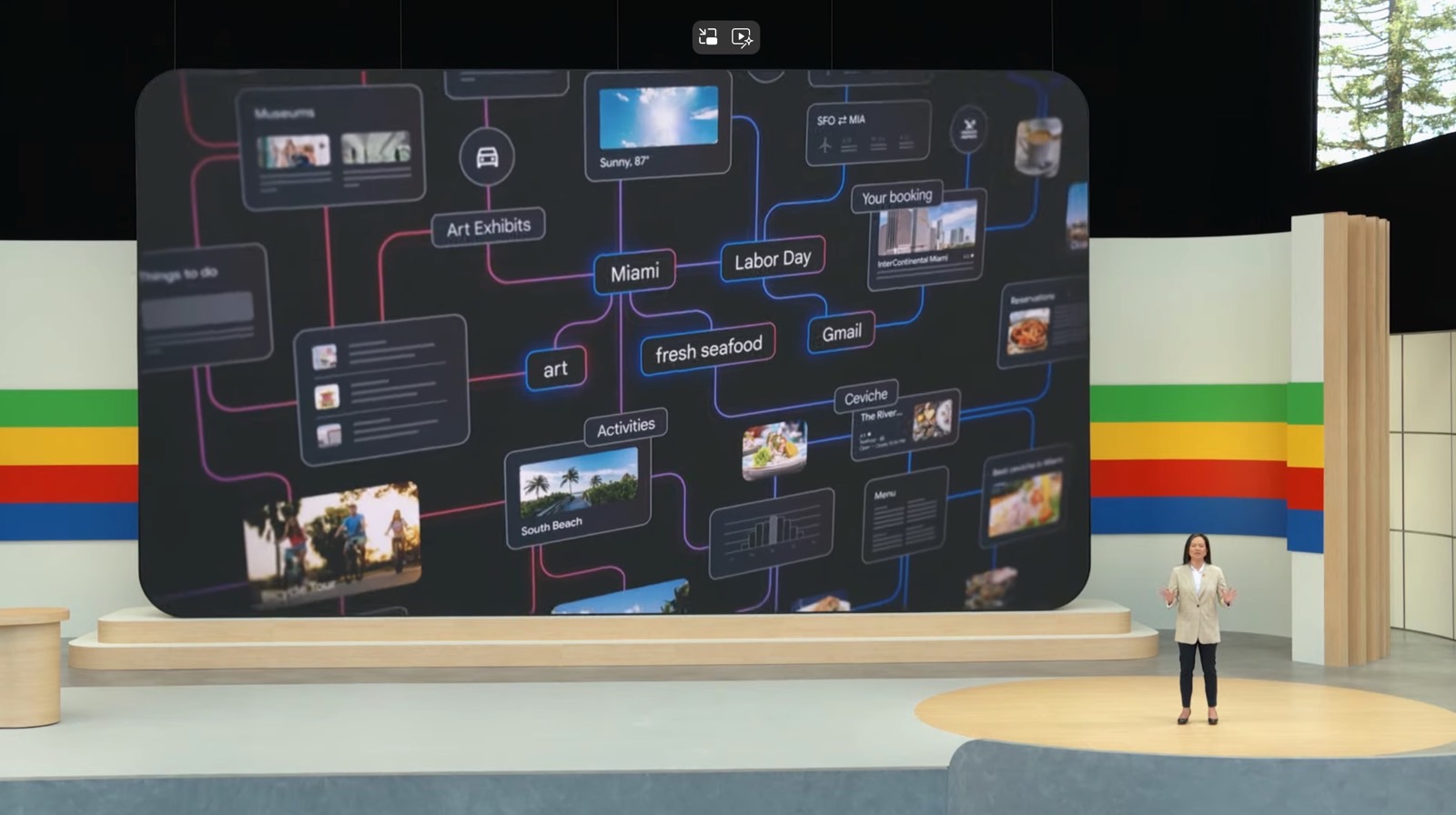

My family and I are going to Miami for Labor Day. My son loves art and my husband really wants fresh seafood. Can you pull my flight and hotel info from Gmail and help me plan the weekend?

Gemini will take into account your preferences, work out booking schedules and travel times, and offer suggestions into a customized itinerary. Gemini doesn’t handle the reservations itself, nor does it process payments. But if Gemini can plan a vacation for you now, I’d imagine it could also make the necessary purchases in a future version.

Why use a device like the Rabbit r1, which is an Android app running on what’s essentially an Android phone, when Gemini is already getting there?

I’d expect other companies to want to offer similar AI assistant capabilities in the coming months and years. Microsoft can adapt GPT-4o for its own needs. And Meta can add voice to its AIs. Then there’s Apple. The iPhone maker should integrate AI features in its products in ways only Google can.

As for that “iPhone of AI,” I’m already starting to wonder whether we really need it, if AI on phones is about to get this much better. I’m starting to get why Sam Altman teased that we might not want new products to use good AI in a recent interview.