On Wednesday morning in Mountain View, California, Google has taken the stage for its annual I/O developers conference. The first big announcement of the day comes in the form of Google Lens: a “set of vision-based computing capabilities” that will give Google Assistant the ability to analyze and detect the things that it sees through your smartphone’s camera.

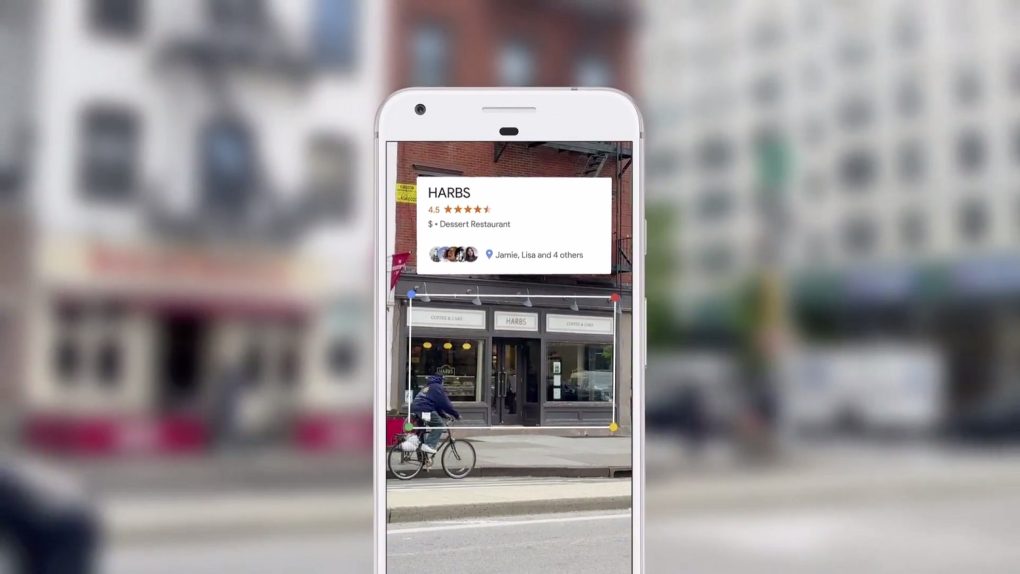

During the keynote address at Google I/O, CEO Sundar Pichai demonstrated how the new feature could be used to identify almost anything. Point the camera at a restaurant and Google Assistant will tell you information about that restaurant. Point the camera at a flower and the Assistant will identify it for you. Point the camera at the Wi-Fi details on the bottom of a router and you’ll be automatically connected to the network. These are just a few of the ways that Google Lens upgrades the Assistant.

With Google Lens, your smartphone camera won’t just see what you see, but will also understand what you see to help you take action. #io17 pic.twitter.com/viOmWFjqk1

— Google (@Google) May 17, 2017

As The Verge points out, this isn’t the first time that Google has added exciting new functionality to smartphone cameras. With the Google Translate app, users are able to point their smartphone cameras at text in different languages and see a translation on their screen almost instantaneously. This appears to be a similar technology, but with infinitely more possibilities than translating road signs.

According to Google, the Lens feature will be available first for Google Assistant and Photos, but has yet to announce when the feature will actually be available for consumers to try out.