Researchers from Google published a paper saying they created a model generating high-fidelity music from text description. It’s called MusicLM, and according to AI scientist Keunwoo Choi, this model’s overall structure is based on other models, which combine MuLan + AudioLM and MuLan + w2b-Bert + Soundstream.

Choi explains a bit about how each of these models works:

- MuLan is a text-music joint embedding model with contrastive training and 44M music audio-text description pair from YouTube;

- AudioLM uses an intermediate layer from a speech-pre-trained model for semantic information;

- w2v-BERT is a Bidirectional Encoder Representation from Transformers, a deep learning tool originally for speech, this time used for audio;

- SoundStream is a neural audio codec.

Google combined all of this to generate music from text. Here’s how the researchers explain MusicLM:

We introduce MusicLM, a model generating high-fidelity music from text descriptions such as ‘a calming violin melody backed by a distorted guitar riff‘. MusicLM casts the process of conditional music generation as a hierarchical sequence-to-sequence modeling task, and it generates music at 24 kHz that remains consistent over several minutes. Our experiments show that MusicLM outperforms previous systems both in audio quality and adherence to the text description. Moreover, we demonstrate that MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption. To support future research, we publicly release MusicCaps, a dataset composed of 5.5k music-text pairs, with rich text descriptions provided by human experts.

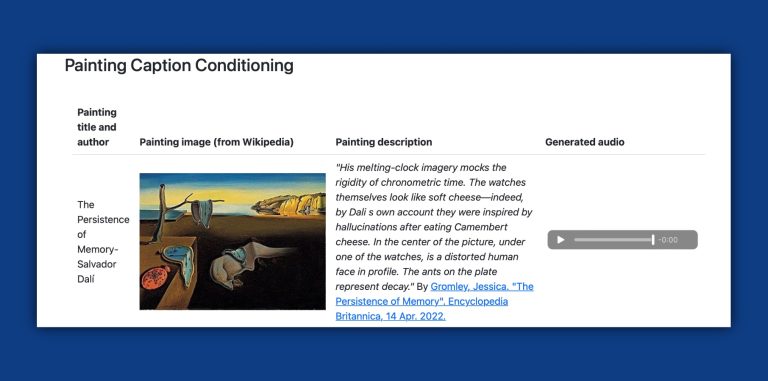

Comparatively, it’s interesting to think about the things ChatGPT was able to perform. Tough exams, analyzing complex codes, writing laws for Congress, and even creating poems, music lyrics, etc. In this case, MusicLM goes beyond and transforms intention, a story, or paint into a song. Seeing The Persistence of Memory by Salvador Dalí transformed into a melody it’s fascinating.

Google’s MusicLM made available more than 5,000 music-text pairs available for people to experiment with its creation. Unfortunately, the company doesn’t plan to release this model to the public. That said, you can still look – and listen – at how this AI model can generate music from text here.