After last year’s I/O 2024, which was dedicated to new Gemini AI features, much like yesterday’s I/O 2025 keynote, I wondered who won the AI duel we had just witnessed. Last year, OpenAI brilliantly ambushed Google with a massive ChatGPT event hosted a day before the I/O keynote.

OpenAI beat Google to the punch, giving ChatGPT multimodal features and a voice mode before Google unveiled similar features for Gemini. At the same time, I said last year that the I/O 2024 keynote felt like a tour de force from Google when it comes to Gemini AI abilities. There really was nothing else that mattered at last year’s event other than Gemini.

A ChatGPT event did not precede this year’s I/O 2025. There’s no battle for the limelight between ChatGPT and Google. But given what we saw on Tuesday from Sundar Pichai & Co., I don’t know what OpenAI could have launched before Google’s event to make us ignore all the Gemini-powered AI novelties that Google presented.

I/O 2025 was an even bigger display of force than last year. Gemini is even more powerful and faster than before. It’s also getting deeper integration in Google’s apps and services, making it a more useful tool for anyone excited about AI, even a little bit.

The best part is that Google unveiled a bunch of exciting AI features that have no equivalents from OpenAI. I should know. It’s not just that I’ve been covering ChatGPT and all AI news for a while now, but ChatGPT is my default AI right now. And I envy several Gemini novelties that OpenAI can’t possibly match.

Some of them are obvious, and I’m referring to the ties between Gemini and Google, something OpenAI can’t match. And some of the Gemini features that Google unveiled are not ready for a wide commercial rollout. That doesn’t change the fact that Google is taking a big lead over ChatGPT, and I can’t help but wonder how OpenAI will respond.

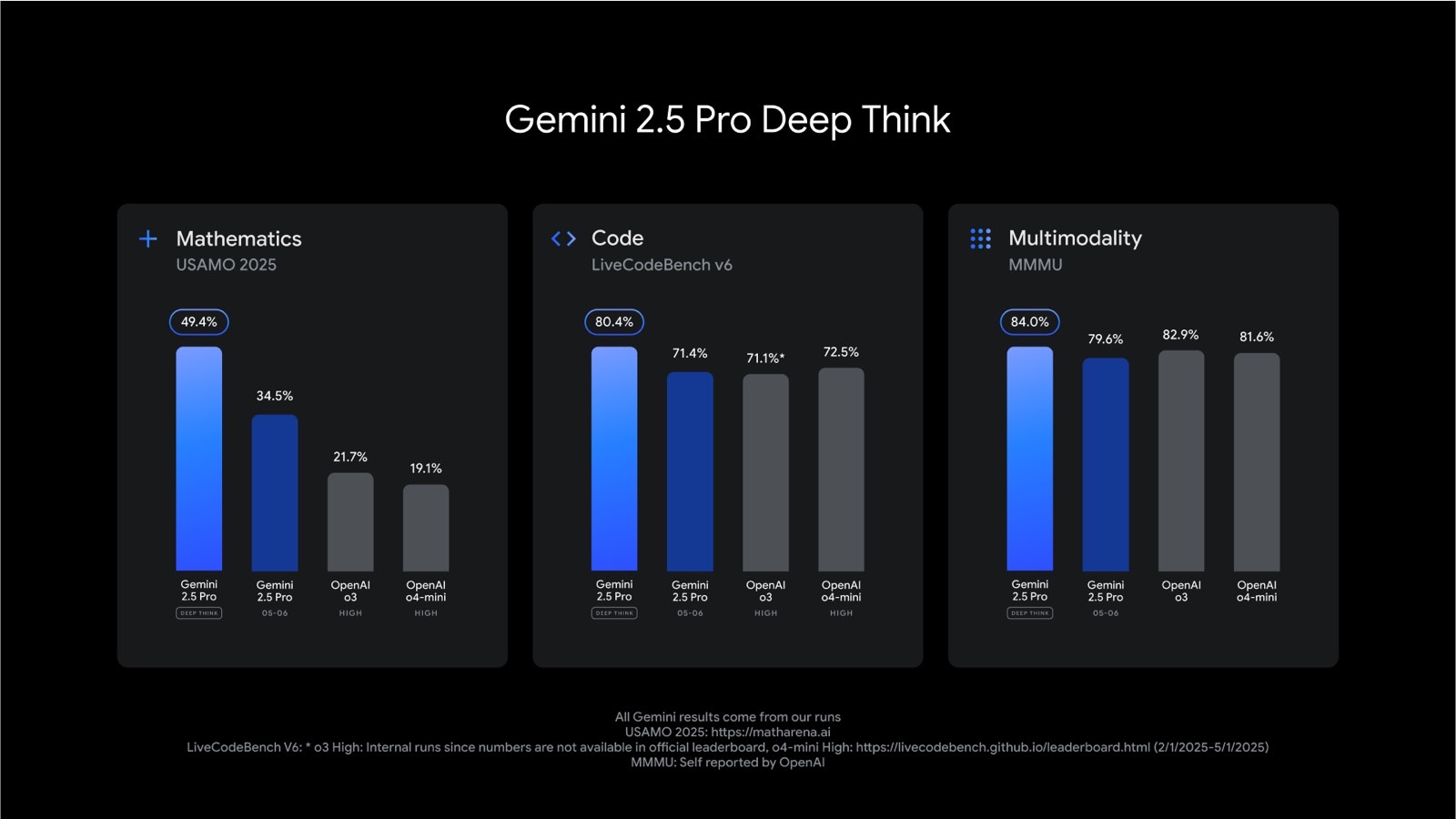

Deep Think

We’ve had Deep Research support in ChatGPT and Gemini for months, and I love the functionality. The AI can deliver detailed reports about any topic by performing in-depth research.

Google is ready to go a step further with Gemini Deep Think, an enhanced reasoning mode that will give Gemini the ability to provide even better responses.

Deep Think is still in the safety evaluation testing phase, and it’s not available to most Gemini users.

AI agents everywhere

I didn’t like Project Mariner when it came out in December, and I certainly favored OpenAI’s Operator over Google’s implementation. However, Google has improved Project Mariner significantly, and the AI agent has received massive powers, at least according to what we saw yesterday.

The best part is that Google will ship AI agents in various products. For example, Google Search AI Mode will be able to monitor the web for price changes for the product you want to buy and let you purchase the item you want.

Over in Gemini Live, AI agents will let Gemini call businesses for users while the AI continues to engage with you, and place orders online for goods. The AI can also browse the web to find the information you need, scroll through documents, and see the world around you while interacting with you via voice.

Gemini Live

Gemini Live is growing into the kind of AI assistant we’re used to seeing in the movies. Watch the demo below that Google offered at I/O to get an idea of what Gemini Live will do for the user once Google is ready to launch these new Project Astra features.

It’s not just agentic behavior, like making calls on your behalf or buying products. The AI can grab information from other Google apps, it’s aware of the user’s history, and can handle multiple people talking in the room without losing track of the task at hand.

Most of these capabilities aren’t rolling out to Gemini Live yet, but they’re above and beyond what OpenAI can do with ChatGPT. OpenAI also wants ChatGPT to become your assistant and know everything about you to provide better responses. But the company can’t integrate ChatGPT with other apps that provide that data about yourself, like Google does.

I already said I’m envious about the new Gemini Live capabilities, and I wonder when and if OpenAI will release a similar product.

While you wait for Gemini Live to get all the new features, you can use the camera and screen-sharing abilities for free right now on Android and iPhone.

Shopping with AI

When OpenAI unveiled Operator, it showed the world what the AI agent coming to ChatGPT would be able to do in real-life situations. That involved looking for things to buy for the user, whether it’s an actual product or making a reservation.

Half a year later, Operator remains available to ChatGPT Pro users only, but I can’t justify the $200/month tier right now.

Meanwhile, Google has brought an AI agent to Google Search and packed it into AI Mode. The Gemini-powered AI Mode will let you find things to buy even if you use conversational language rather than a specific prompt. ChatGPT can do that, too.

But AI Mode will have a “Buy for me” feature that lets you instruct the AI to monitor a product’s price. The AI will notify you when the price drops and offer to buy it for you. ChatGPT can’t do any of that.

Then there’s the “Try it on” feature that’s simply mind-blowing and the best use of AI in products like online search yet. Find a clothing item you might like, upload a recent photo of yourself, and a special AI model will determine how you’ll look wearing those clothes.

AI Mode will also let you find and buy event tickets, make restaurant reservations, and schedule other appointments. ChatGPT can’t do any of that for you.

Personalization

Sure, there are privacy implications for using AI Mode features like the ones above. And I’m not a big fan of handing over any of my personal data or access to it to the AI. But I’ll have to do that once I’m ready to embrace an AI model as an assistant that knows everything about me and can access my data, whether it’s email and chat conversations, documents, or payment info.

Other people will not feel that way, and if they have no problem with the AI accessing data from other apps, they’ll want to see what Google is doing with Gemini.

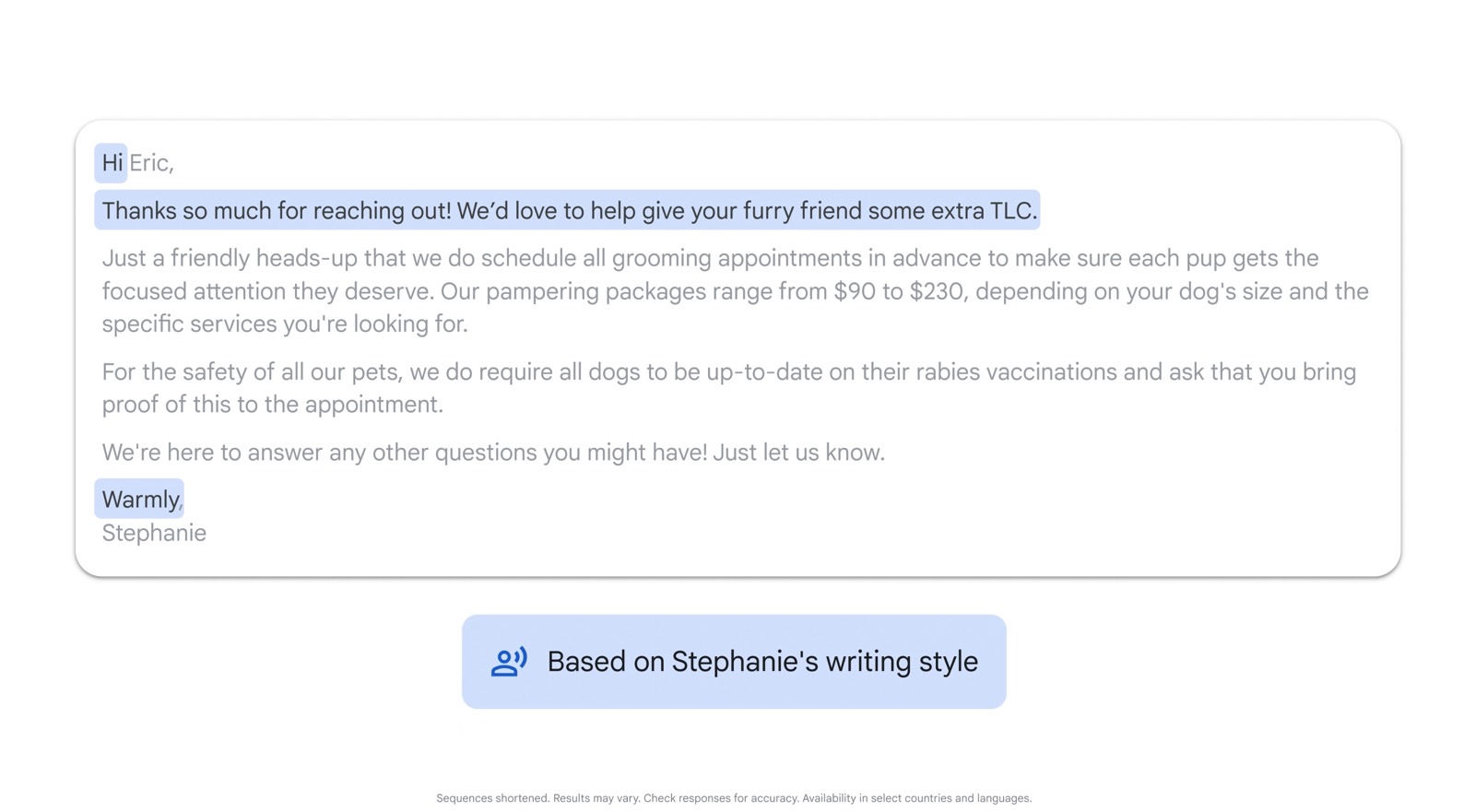

One of the best demos at I/O was Gemini writing an email in Gmail using the user’s tone and surfacing the right information from other Google apps, whether documents or photos.

Similarly, Gemini Live could access Gmail data in the example above to find the information the user needed, and it remembered the user’s dog for an internet search.

Even AI Mode can offer personalized suggestions based on your past searches if you desire. It can also connect to Gmail for more personal context.

ChatGPT can do none of that because it doesn’t have its own suite of complementary apps. And connecting to third-party apps might be more difficult.

Flow

OpenAI lets you generate video with Sora, and you can start directly from ChatGPT, but Google’s Flow is undoubtedly one of the big highlights of I/O 2025 and probably a step above Sora.

Flow lets you generate amazing videos with audio. They offer character and scene consistency, and you can continue to edit your project outside of the AI program.

Of all the features announced at I/O 2025, Flow might be the easiest for OpenAI to match.

Real-time translation

Google isn’t the first to offer AI-powered real-time translation. The feature has been one of the staples of Galaxy AI, with Samsung improving it over the years. ChatGPT can also understand and translate languages for you.

But Google is bringing Gemini-powered real-time translation to video-chatting apps like Google Meet. That’s something ChatGPT can’t do.

The feature is even better on Gemini-powered hardware, like the upcoming wave of Android XR devices.

The Gemini hardware

This brings me to the first devices developed with Gemini at the core: Google’s AR/AI and AI-only smart glasses. I/O 2025 finally gave us the public demos we were missing. There were glitches, and internet connectivity definitely impacted performance, but the demos showed these AI wearables work.

We’ll see Android XR glasses in stores later this year, and they’ll be the perfect device for using AI. Yes, Meta has its Ray-Ban Meta smart glasses that do the same thing with Meta AI. But everything I said about Google’s new Gemini powers makes the Android XR glasses even more exciting, at least in theory.

OpenAI can’t match that. There are no first-party smart glasses that run ChatGPT natively. I’m sure such a product will come from Jony Ive and Co. in the coming years, and the ChatGPT hardware will be worth it. But Google is getting there first, and it’s certainly turning heads in the process.