Following the rapid advancements in AI tech like ChatGPT, it’s only a matter of time before the leading AI firms come up with AGI, or artificial general intelligence. By definition, AGI is AI that’s as good as a human at tackling any task, with the obvious advantage that AI would have access to massive amounts of information. ASI, or artificial super intelligence, is the step after that.

AGI might not be a perfectly objective term, but at least we supposedly know what to expect from it. With superhuman intelligence, all bets are off. This is the kind of AI that is so smart that it can self-improve. It might invent new technologies that improve human life in ways previously unseen just as easily as it might try to destroy humans. It’s unclear how long it’ll take to reach ASI, but it’s certainly happening.

As a longtime ChatGPT user, I don’t want a world where AI isn’t there to assist me. I don’t want to imagine a future where even better AI will not be there to improve the way I work and function, even if AI will eventually steal my job. Put differently, I don’t think AI development should stop. We have to get to AGI and then ASI. And yes, we need to do it safely. By we, I mean the entire human species, not any specific country.

I say that in response to a new survey Time shared that says the vast majority of Brits want tougher AI regulation, including a ban on smarter-than-human AI.

According to the poll, 87% of Brits would back a law requiring AI developers to prove their systems are safe before release. There’s nothing wrong with that. We should want safety from AI products. AI has to be aligned with humanity’s interests so it can protect it rather than destroy it.

But banning the development of smarter-than-human AI, as 60% of respondents want, isn’t just wishful thinking — it’s a futile endeavor. What’s the point of even going through the motions of having ASI banned in the UK when the rest of the world will develop it?

The poll was conducted by YouGov on behalf of the non-profit Control AI, which focuses on AI risks.

Say the US, UK, EU, China, and other markets agree on banning ASI development; who will stop covert operations that all these countries will surely fund to reach ASI? Who will stop regular developers with access to AI from accidentally creating ASI? Or doing it quietly and without publishing any breakthroughs. Not to mention, there’s always the risk of ASI eluding detection, just like in the movie.

Compare the state of AI with the early days of the atomic bomb if it’s easier. It’s a scientific challenge for those people who can develop such technologies. The nuclear bomb came out of an arms race, but we would have gotten to it eventually. I mean humanity again.

We would have tested it and used it before agreeing that it could destroy it. Upon that agreement, humanity has largely agreed to “ban” the use of atomic weapons. I put it in quotes because the theoretical threat is still there. We haven’t stopped making nuclear weapons.

Regulating AI will be even harder because anyone can develop good AI in their basement. Just this week, we saw a paper that said researchers spent less than $50 to use existing AI programs to train a new AI and make it as good as ChatGPT o1.

A week earlier, the DeepSeek R1 model stunned the world and tanked the stock market because of the software innovations that helped a Chinese AI lab develop amazing AI despite dealing with an ongoing ban on hardware purchases.

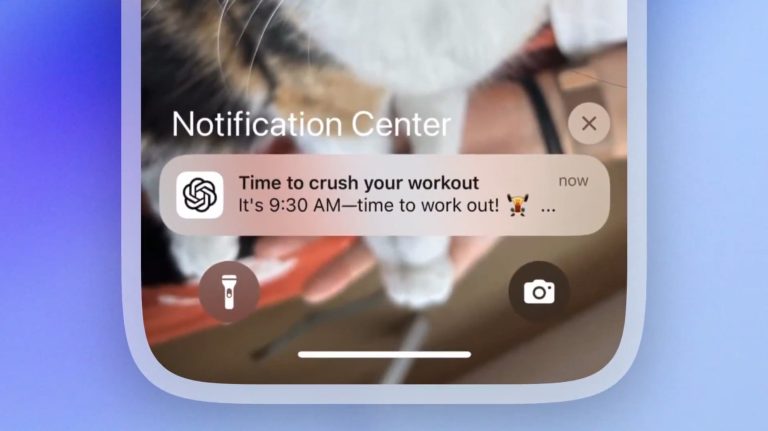

Any country that overregulates AI, like implementing a ban on smarter-than-human AI models, would be shooting itself in the foot. The EU currently has the toughest AI regulation. They’re a good example of that, and I say that as a European who has to wait for the latest ChatGPT and Gemini developments compared to international users. AI firms have to make sure they respect the local laws. But that doesn’t mean OpenAI and Google are stopping their quest to reach AGI and ASI.

Meanwhile, the Trump administration announced a massive $500 billion investment in its Stargate AI development. The initiative could very well result in the creation of superhuman AI.

Brits who fear the prospect of ASI should consider all that, even if their worries stem from the fact that non-superhuman AI is stealing some of their jobs.

The same survey says that just 9% of respondents trust tech CEOs to act in the public interest when discussing AI regulation. That figure shouldn’t be surprising, especially at this current point in time. World leaders and top execs from AI firms will gather in Paris next week to discuss the development of AI, and regulation will certainly be a topic.

I don’t trust the tech CEOs either, but that doesn’t mean I don’t want better AI features from ChatGPT and other models. I want laws that ensure AI development is safe, but I don’t want anything banned.

The YouGov survey also says that 75% of Brits want laws that explicitly ban AIs that can escape their environments. That’s another good stance. But if we reach a point where ASI is developed, no amount of legislation will prevent it from trying to escape.

While the respondents to this YouGov poll are clearly worried about AI developments, the US government isn’t as keen on going forward with tougher AI regulation. Time notes that the ruling Labor Party had pledged to introduce tougher laws before the 2024 general election. But now that they’re in power, they’re also aware of the importance of AI for the economy. If anything, British Prime Minister Keir Starmer is now in favor of using AI for growth.

A statement signed by 16 British lawmakers from both major parties accompanies the poll. They want AI regulation targeting superintelligence.

“Specialised AIs – such as those advancing science and medicine – boost growth, innovation, and public services. Superintelligent AI systems would [by contrast] compromise national and global security,” the statement reads. “The UK can secure the benefits and mitigate the risks of AI by delivering on its promise to introduce binding regulation on the most powerful AI systems.”

I’m afraid we won’t get to AI-led science and medicine breakthroughs without reaching AGI and ASI first.

Then again, the British people can choose for themselves if need be when it comes to any problem. Just look at Brexit and how great it all turned out. I don’t think that tough AI regulation would really let them Brexit AI.