We’re using our phones’ cameras not just to save memories of special events for posterity but also to pretty much grab and preserve all the information we need from real life. The camera is excellent to capture document scans, and we usually point it to everything containing written information that we might need later. It might be phone numbers, email addresses, or general information that we think we’ll require at some point later, and the phone’s camera is the best place to store it. Sadly, there’s no way to grab that information and convert it into digital text right after we snap the photo, at least on iPhone and iPad. Third-party apps with text recognition support can do that. And Google Lens has the same powers.

iOS 15 fixes all that, and Live Text will be one of the most exciting features built into the next versions of iOS and iPadOS. And Live Text is going to be available on macOS Monterey this year as well. The feature can be used to automatically detect and pick up the text and even translate it if the situation requires it. But not all iPhones, iPads, and Macs that will be updated to the latest operating system versions will get Live Text and the Live Text translation feature.

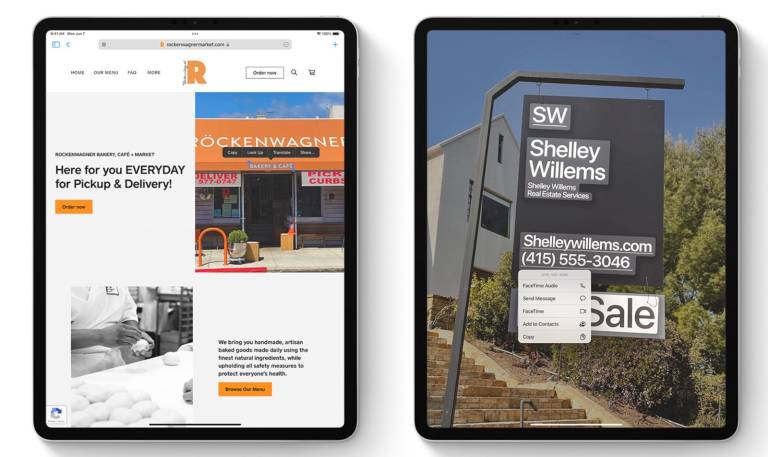

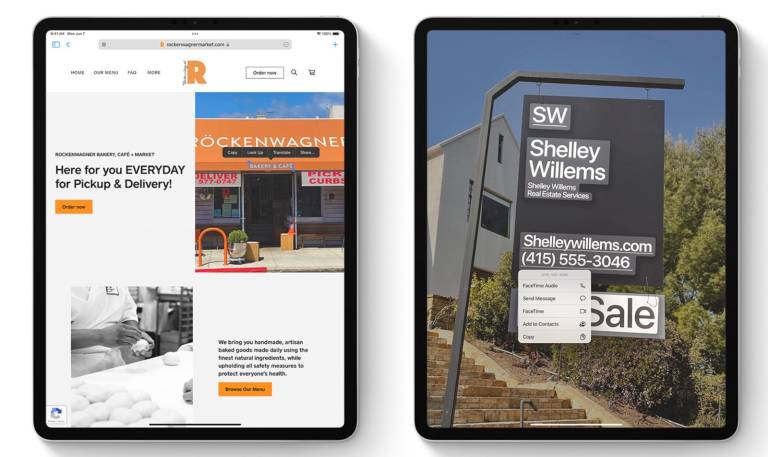

Open the camera app in iOS 15, point the iPhone to something with text in it, and a small icon in the bottom right corner will appear on the screen, signaling that it has recognized the text. Tap it, and you’ll then be able to tap the text in the photo and interact with it. You can perform several actions as well, like tapping a phone number in the image to call that number or an email address to start an email. You can also copy the text and paste it into a different app, look up words, and translate everything.

Live Text translation supports seven languages, including English, Chinese, French, Italian, German, Portuguese, and Spanish. iOS 15 and iPadOS 15 also support system-wide translation.

Live Text works in Photos, Screenshot, Quick Look, Safari, and live previous in Camera, MacRumors reports.

As amazing as this Google Lens alternative that’s now available by default in Apple’s core apps on iPhone, iPad, and Monterey, there is one important caveat to remember. Live Text needs Apple’s neural engine to work, and Apple has specific hardware requirements for it.

iOS 15, iPadOS 15, and macOS Monterey might work on a large number of devices, including the six-year-old iPhone 6s phones, but not all of them have what it takes to run Live Text and Live Text translation. You’ll need devices running on an A12 Bionic chip or later or M1-powered Macs to take advantage of this awesome new functionality.

Devices like the iPhone X and earlier handsets will not have Live Text enabled. The majority of Macs won’t support it either, as they run on Intel chips. On the tablet side of things, you’ll need at least iPad Air (3rd-gen), iPad (8th-gen), or iPad mini (5th-gen) to get Live Text running on iPadOS 15.