When Apple showed off its Vision Pro headset during Monday’s WWDC keynote, there was a lot of important information that was left unsaid. And with good reason, Apple’s Vision Pro won’t even arrive until the beginning of 2024 at the earliest. In turn, Apple’s Vision Pro unveiling focused more on showing us the device’s capabilities as opposed to illustrating the full range of ways users can interact with the device.

While Apple, for example, did a great job of showing how Vision Pro users might navigate a page in Safari, they didn’t exactly demonstrate how users can hop from one website to another. With that said, I was especially curious about how text input on Vision Pro operates without using a Bluetooth keyboard.

To this point, Apple addressed the topic during a WWDC developer session titled Design for Spatial Input. The session — which is available on video via the link above — is naturally geared for developers. Still, it provides us with an in-depth look at the myriad of ways users can interact with Vision Pro. The entire video is worth watching in its entirety, but for now, we’ll focus on text input.

Virtual typing on the Vision Pro

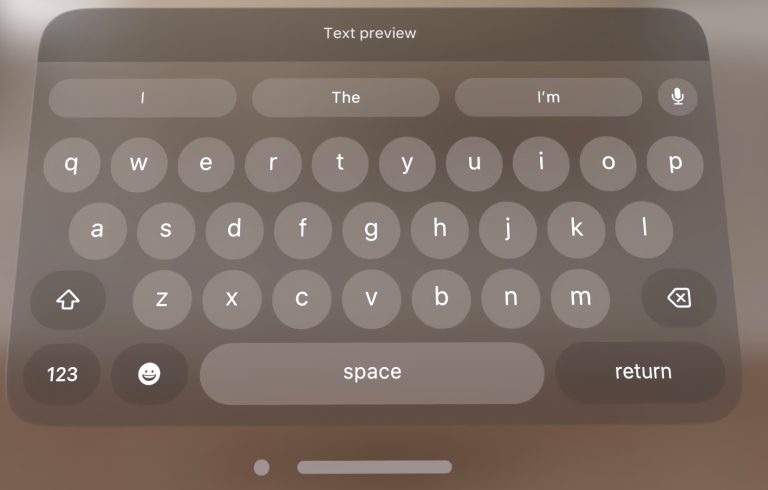

visionOS includes a virtual keyboard that floats in front of a user’s eyes when called into action. And with the device’s array of cameras, it can detect what keys are being pressed virtually in the air. When a finger is above a virtual key, the letter will light up. And to provide haptic feedback, users will hear a click for every button pressed.

Apple’s engineers explain:

The buttons are actually raised above the platter to invite pushing them directly. While the finger is above the keyboard, buttons display a hover state and a highlight that gets brighter as you approach the button surface. It provides a proximity cue and helps guide the finger to target.

At the moment of contact, the state change is quick and responsive, and is accompanied by matching spatial sound effect.

These additional layers of feedback are really important to compensate for missing tactile information, and to make direct interactions feel reliable and satisfying.

Aside from the virtual keyboard, other visionOS input methods include voice commands via Siri, scrolling and selection via hand movements, and of course, selection via eye tracking. Users can also use a connected Bluetooth keyboard or iPhone for text input.

Lastly, it’s worth mentioning that all of the early hands-on reviews of the Vision Pro are overwhelmingly positive.