I don’t know who needs to hear this right now, but absolutely do not include sensitive personal information like usernames and passwords in your ChatGPT prompts. Apparently, more than a year after the launch of ChatGPT, the AI still might leak info to other ChatGPT users.

Even without these leaks, which must be rare, you should never provide sensitive information. Depending on how you’ve set up ChatGPT, your data can be used to train the chatbot. Your data will reach OpenAI’s servers, and you won’t have any control over how it’s used.

We don’t see reports detailing these issues that frequently. But it can happen, and it is a disturbing “feature” for a viral product that’s been in use for over a year.

UPDATE: OpenAI reached out to BGR with the following comment, explaining that the ChatGPT user who reported seeing the “leaked” chats had their account hacked:

Ars Technica published before our fraud and security teams were able to finish their investigation, and their reporting is unfortunately inaccurate. Based on our findings, the users’ account login credentials were compromised and a bad actor then used the account. The chat history and files being displayed are conversations from misuse of this account, and was not a case of ChatGPT showing another users’ history.

While this incident is not what we thought initially, the point about password security still stands, you should protect your passwords, and that includes keeping them away from generative AI software. The initial article follows below.

A ChatGPT Plus user informed Ars Technica about one such incident. I know it’s a ChatGPT Plus experience because the screenshots the user provided show that GPT-4 is in use. That language model is restricted to OpenAI’s premium subscription version. The free ChatGPT version uses GPT-3.5.

GPT-4 is OpenAI’s best ChatGPT version to date. So you’d expect it to offer the best experience, especially considering that OpenAI reportedly fixed the chatbot’s problem with laziness.

But the conversations Ars reader Chase Whiteside shared show that’s not the case. It’s unclear how these ChatGPT conversations made it into the history of a different user. That’s just one of the disturbing things this event has revealed:

“I went to make a query (in this case, help coming up with clever names for colors in a palette) and when I returned to access moments later, I noticed the additional conversations,” Whiteside wrote in an email. “They weren’t there when I used ChatGPT just last night (I’m a pretty heavy user). No queries were made—they just appeared in my history, and most certainly aren’t from me (and I don’t think they’re from the same user either).”

The other big problem that this interaction shines some light on is the inclusion of ChatGPT prompts from unknown users. They included confidential information like usernames and passwords that were given to ChatGPT by the original users who typed out the prompts.

Whether you pay for ChatGPT Plus or use the free chatbot, this “feature” is certainly not what we want to see from such a product. It’s a huge privacy problem that OpenAI has to fix as quickly as possible. It’s unclear why it happened, but the screenshots that Ars posted reaffirm the user’s claims.

If someone else’s chats appear in your history, then your ChatGPT chats might appear in someone else’s. And you’d have no way of knowing it happened.

How to improve your ChatGPT privacy

Regardless of this bug, you should absolutely not give any generative AI product sensitive information like usernames and passwords. Even if they’re not yours. Even if you manage to block your data from training products like ChatGPT.

I’ll remind you that in the early days of ChatGPT, big tech companies like Samsung and Apple banned the product from internal use. They did it to prevent leaks, and prevent ChatGPT from training using that data.

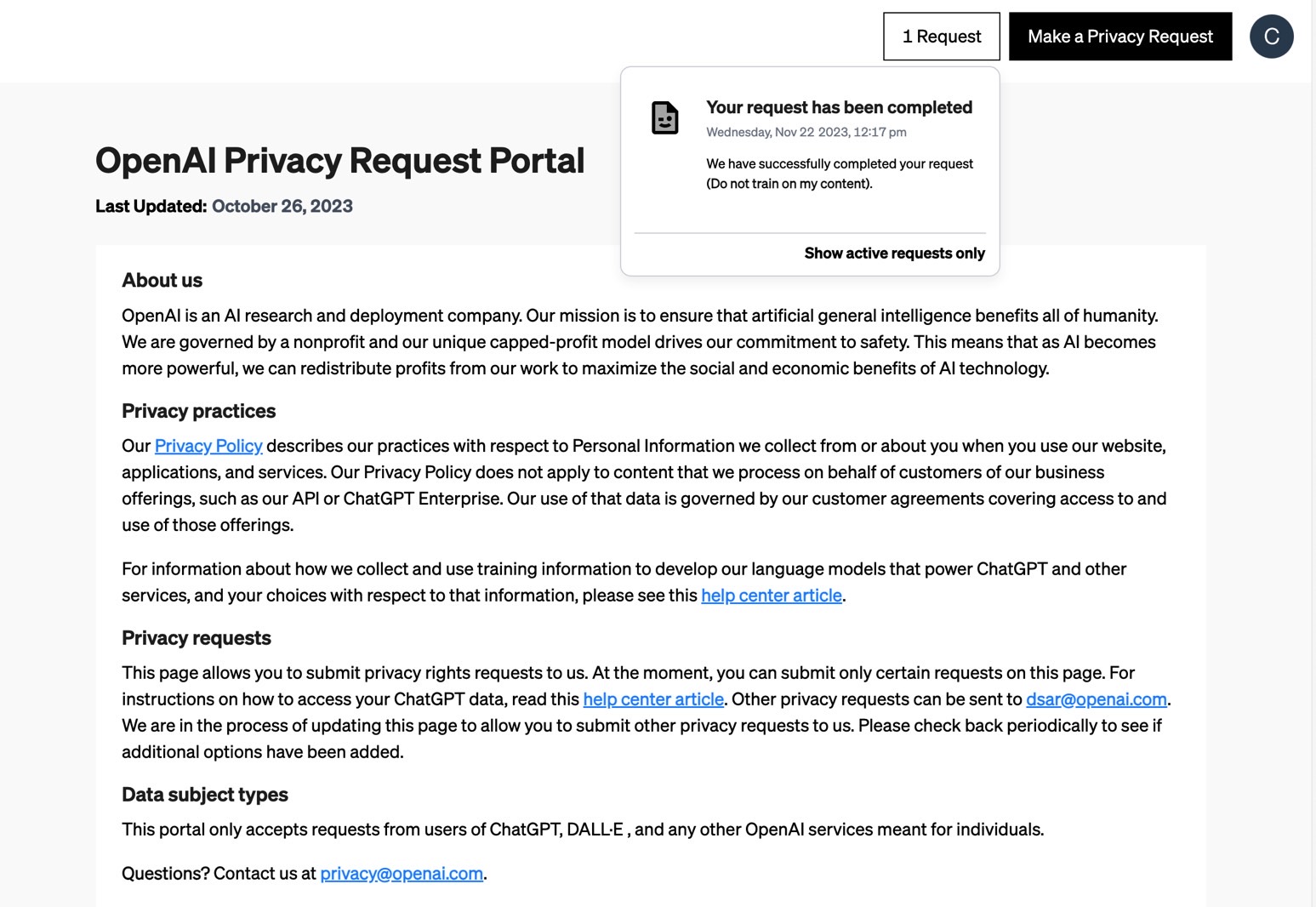

Back then, OpenAI didn’t have any privacy protections. Those came out later, allowing users to prevent their data from reaching OpenAI. But this will cost you your ChatGPT history if you don’t know how to do it correctly. That is, I found the only way to keep my ChatGPT chats and prevent OpenAI from collecting all that chat data (image above). It takes only a few minutes to complete.

This privacy feature could prevent unwanted chat leaks like the ones Ars detailed. But even if your data can’t reach ChatGPT servers, you should still avoid using private information in those prompts. Just take a minute to delete passwords from your prompts.

OpenAI confirmed that it is investigating the issue, according to Ars.

The headline of this article has been updated to reflect new information provided by OpenAI.