Apple published research last week that details MGIE, a generative AI photo editor that lets you make changes to an image using text prompts. That’s the kind of feature I’d expect to see in iOS 18, alongside more conventional generative AI photo editing tools. You know, like interacting with an image using your hands. You can already test Apple MGIE without installing anything on your computer.

If that wasn’t enough to prove that Apple is serious about adding generative AI to its operating systems, there’s more evidence that was just uncovered. Apple released a new study that details a different piece of generative AI research.

Apple Keyframer is a generative AI tool that lets you edit images via prompts. But, unlike MGIE, Keyframer lets you create animations out of a still image using text commands.

Keyframer sounds more complex than MGIE. And, like MGIE, there’s no guarantee you’ll see Keyframer on the iPhone once iOS 18 is released. But I already have an idea about what Keyframer could be used for: Animated stickers in iMessage.

You can create your own stickers from any image. Just tap and hold on a photo to grab the part you want to transform into a sticker. But what if you could tell Apple AI to create animations from an image, and then save it as an animated sticker? I’m only speculating here, of course. And this would be the simplest use of Keyframer I can think of as I’m writing these lines of text.

The study

Apple’s study, titled “Keyframer: Empowering Animation Design using Large Language Models,” offers more advanced use cases for such generative AI powers.

Apple has actually tested Keyframer with professional animation designers and engineers. The company sees the AI product as a potentially useful tool that professionals might use while working on new animations.

Rather than spending hours to reiterate a concept idea, they could just create it with the help of a static image and text prompts. They could refine the prompts to get a result closer to what they want to achieve. After using AI to prototype ideas, they could then get to work. Or they could just use animations that Keyframer puts out.

That’s to say that Apple might want to add Keyframer to specific photo and video editing software in the future that extends beyond the iPhone or iOS 18.

How Apple Keyframer works

Keyframer works fairly simply, where by simply I mean it’s using a large language model like ChatGPT. Apple actually relied on OpenAI’s GPT-4 for Keyframer rather than a proprietary Apple solution.

Rumors say that Apple is working on its own language models, and I’d expect a commercial version of Keyframer to employ that rather than anything from the competition.

Back to Keyframer, the user simply had to upload an SVG image and type a text prompt. This implies that the large language model has to support multimodal input. And explains why Apple would have gone to GPT-4 to test the tool. Also, the fact that Apple tested Keyframer with non-Apple testers explains why Apple chose a publicly available AI product.

Once that’s done, the AI would generate the code that makes the animation happen. Users can edit the actual code or provide additional prompts to edit the result.

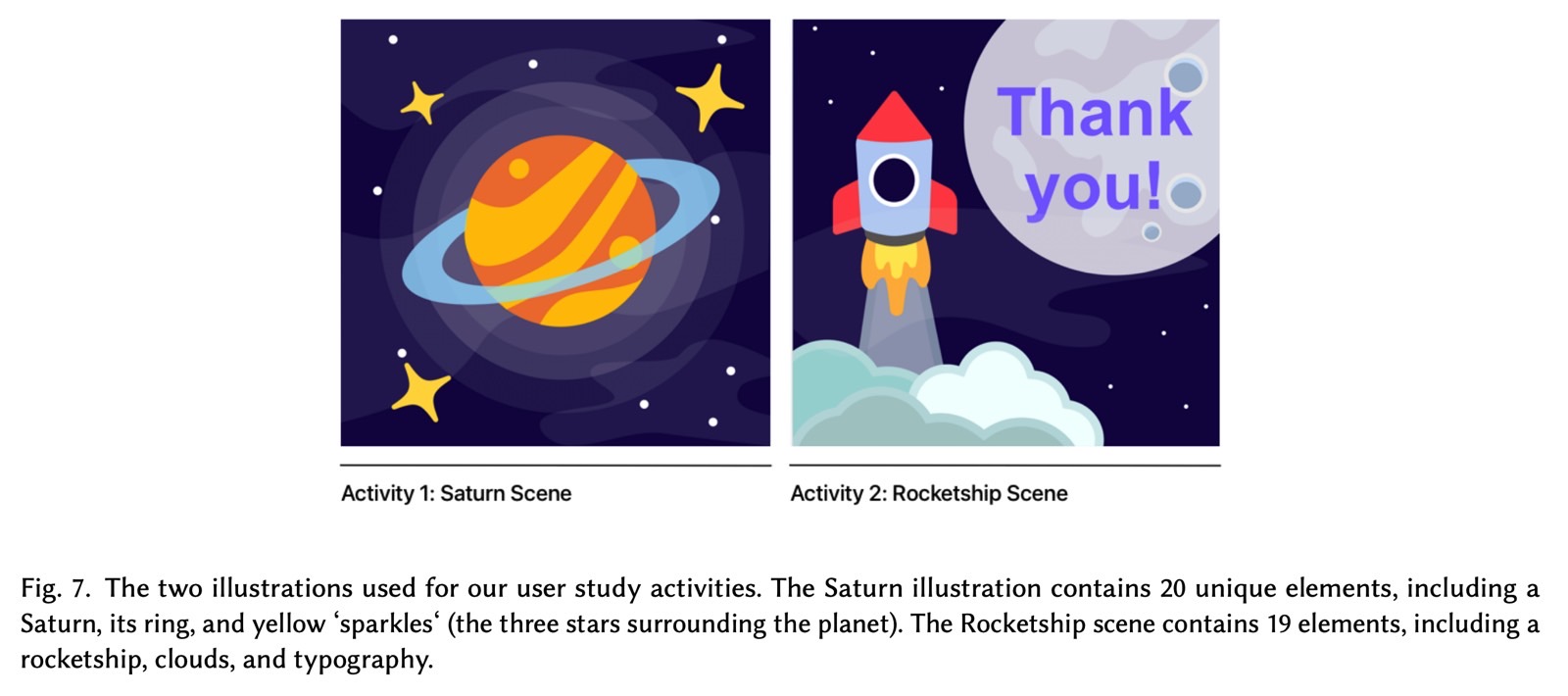

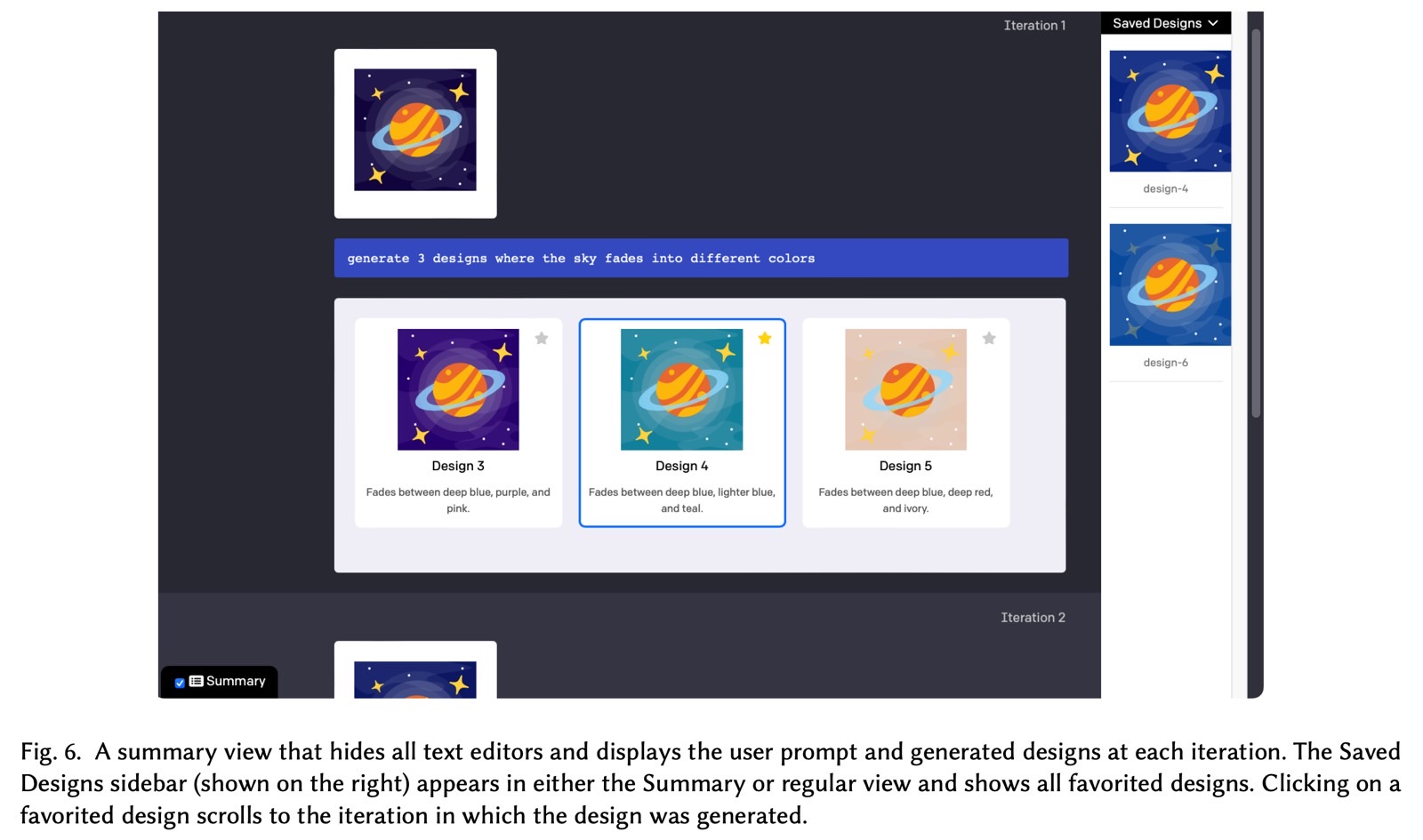

Unfortunately, the study doesn’t offer animation examples, but it includes images that attempt to detail the process of using LLMs to generate and improve animations via Keyframer. One such example is found above.

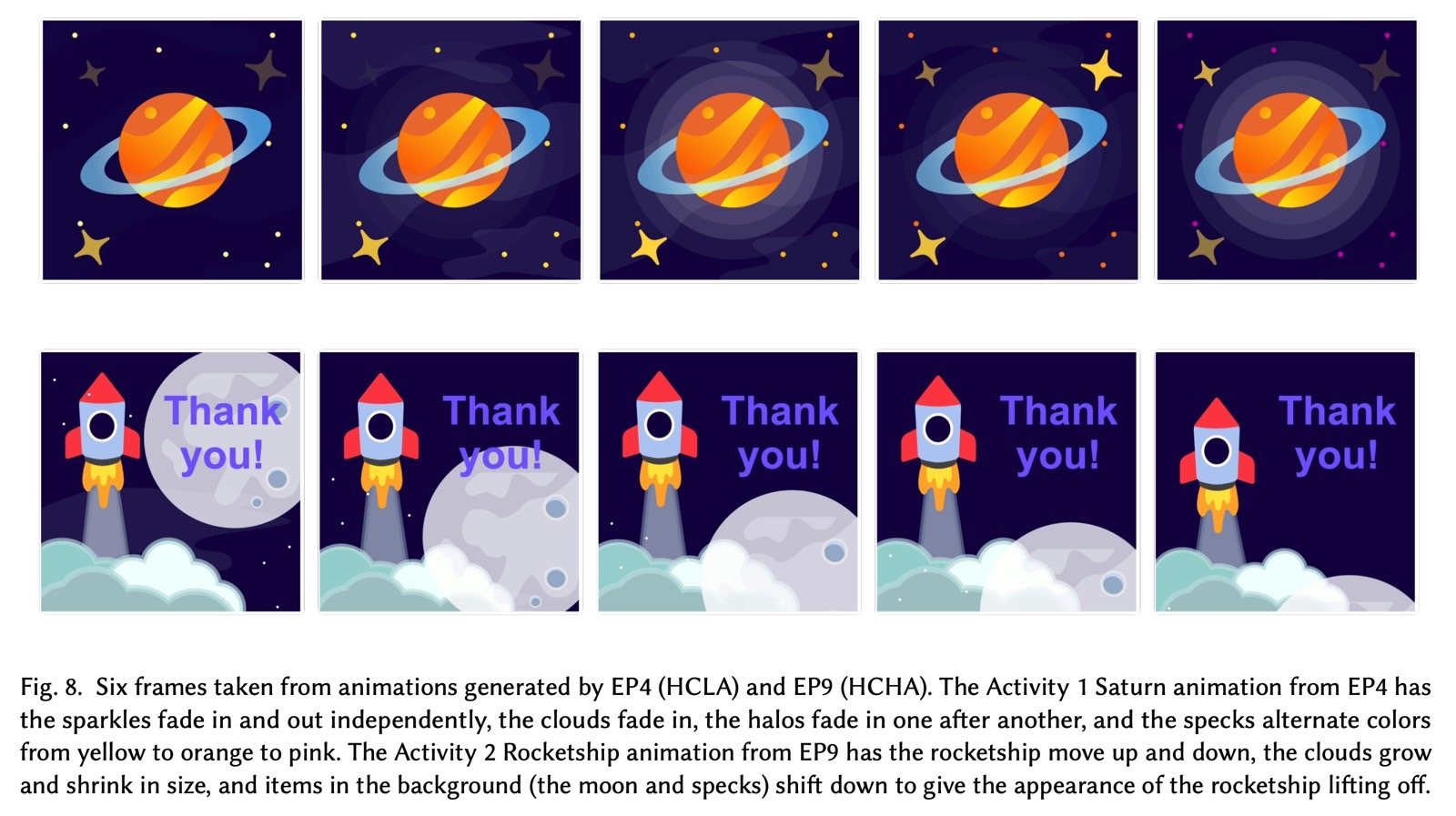

Another example follows below that contains descriptions of Keyframer animations from Apple’s test subjects. Again, actual animations would have been much better, but this is a printed study, which can’t feature any animated content.

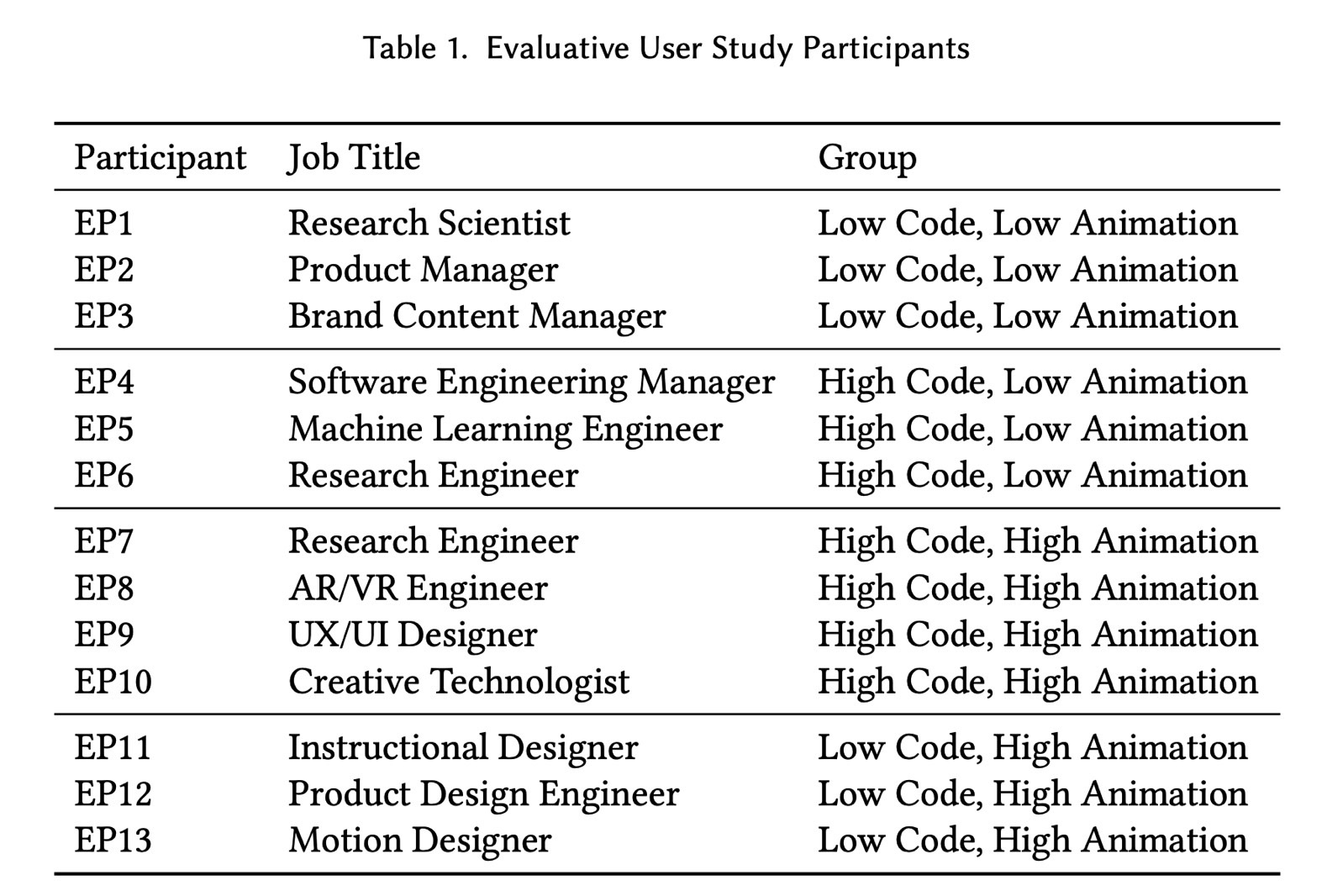

As for those EP4 and EP9 subjects Apple mentions, they’re two of the 13 testers Apple had selected. As you can see later in the post Apple looked at different types of testers with various backgrounds in code generation and animation. They all had to edit the same two images.

Will we see it in iOS 18?

Regardless of their background, the participants appreciated the Keyframer experience. “I think this was much faster than a lot of things I’ve done… I think doing something like this before would have just taken hours to do,” EP2 said, a participant from the first group said. Even the most skilled coder and animator liked the experience.

“Part of me is kind of worried about these tools replacing jobs, because the potential is so high,” EP13 said. “But I think learning about them and using them as an animator — it’s just another tool in our toolbox. It’s only going to improve our skills. It’s really exciting stuff.

The study details how many lines of code the AI produced to deliver the animation and how the testers worked. Whether they refined the results and worked on the different animations at the same time or separately. It also looks at the actual prompts from the various individuals, and how specific or vague they are.

Apple concluded that LLMs like Keyframer can help creators prototype ideas at various stages of the design process and iterate on them with the help of natural language prompts, including vague commands like “make it look cool.”

The researchers also found that “Keyframer users found value in unexpected LLM output that helped spur their creativity. One way we observed this serendipity was with users testing out Keyframer’s feature to request design variants.”

“Through this work, we hope to inspire future animation design tools that combine the powerful generative capabilities of LLMs to expedite design prototyping with dynamic editors that enable creators to maintain creative control in refining and iterating on their designs,” Apple concludes.

Unlike MGIE, you can’t test Keyframer out in the wild yet. And it’s unclear whether Keyframer is advanced enough to make it into any Apple operating systems or software in the near future. Again, this research used GPT-4. Apple would need to replace it with its own language models.