There are so many interesting things happening in the world of AI right now. For starters, some are saying that AI like ChatGPT is already hurting our critical thinking skills. Other people are already trying to make AI suffer in an effort to test its sentience. But, what you might not have expected is how AI reacts to the Rorschach test.

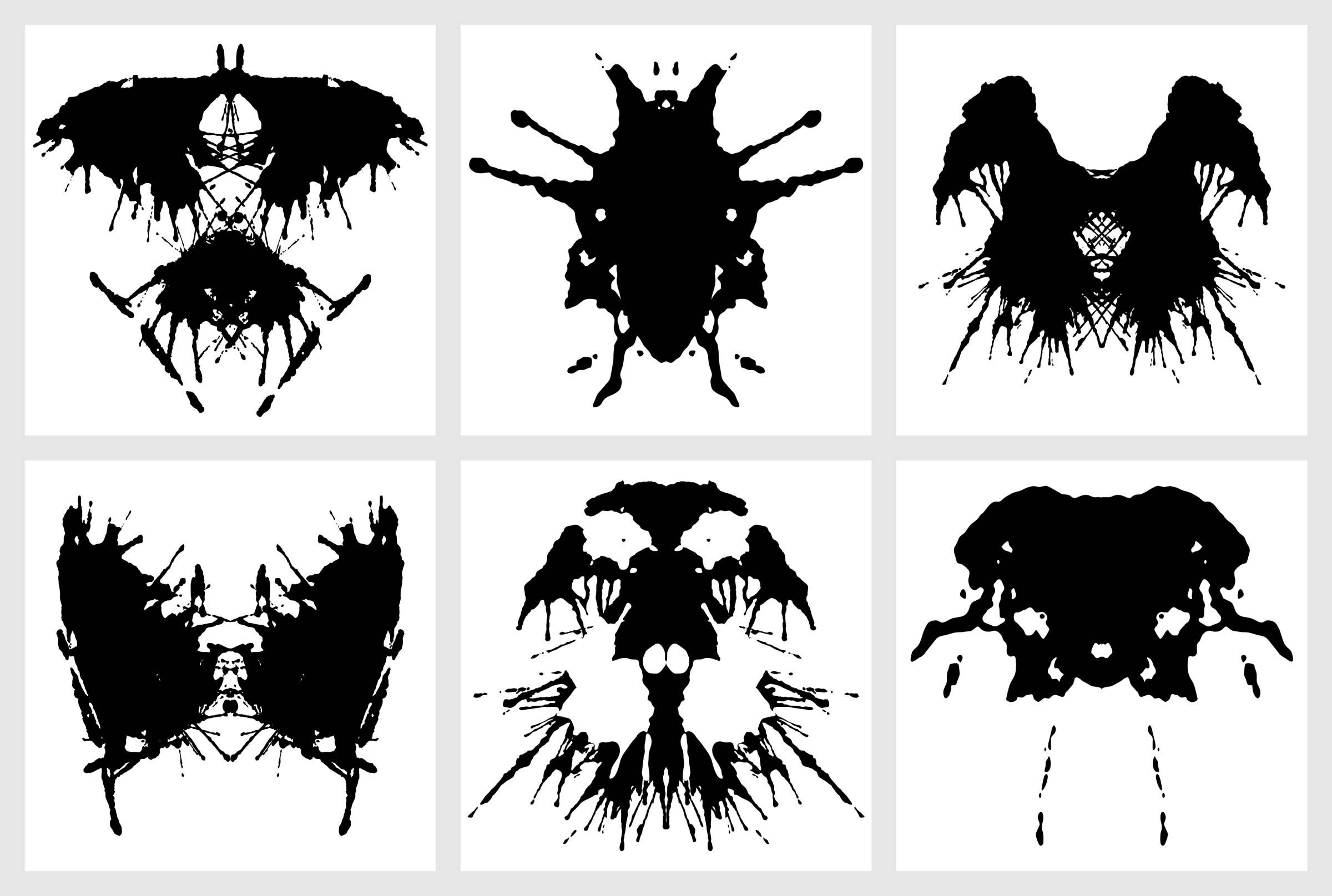

Psychologists have been using the Rorschach test to explore human thought patterns and personality traits for over a century. The premise here is simply: you present individuals with ambiguous inkblots and ask them what they see.

The test works by tapping into a phenomenon researchers call pareidolia, where the brain instinctively assigns meaning to random shapes. But what happens when an AI—which lacks human emotions and experiences—is given the Rorschach test?

Well, with the ongoing rise of multimodal AI models, which can analyze both images and text, some experts were curious. So, they put AI to the test. Unlike humans, who project emotions, fears, and personal experiences onto inkblots, AI lacks an unconscious mind.

Instead, the system analyzes shapes, textures, and patterns and then pulls from its training data to generate a response. When AI was presented with one of the most common Rorschach inkblots—a shape often interpreted by humans as a bat, butterfly, or moth—it responded differently.

At first, it acknowledged the image’s ambiguity, stating that different people may see different things. But when pressed to pick a specific interpretation, the AI settled on a “single entity with wings outstretched,” likely influenced by human responses to the Rorschach test that it had been trained on.

This highlights a key difference: while humans assign personal meaning to images based on emotions and past experiences, AI mimics collective human interpretations rather than truly “seeing” anything in the inkblots. This is because researchers argue the AI does not actually “see” images like people do.

As such, its responses are simply a reflection of the data it has processed. A psychologist comparing AI’s responses to human ones noted that, unlike people, AI does not struggle with inner conflicts, emotions, or subconscious biases—it simply identifies patterns and predicts what it reasons is the likeliest answer.

Additionally, AI is inconsistent. If shown the same inkblot twice, it may generate a completely different interpretation each time, whereas humans typically give stable answers based on their unique perspectives. Ultimately, this experiment underscores a fundamental truth: AI can mimic human perception, but it does not experience the world as we do.

While AI can describe emotions and interpretations, it lacks true imagination, subconscious thoughts, or personal biases, proving that, for now, AI remains a reflection of human input rather than an independent thinker.