Why I Can't Wait For Google To Put Selfie Cameras Under The Screen Of Pixel Phones

The iPhone 15 series will get us even closer to the dream of an all-screen phone by shrinking the bezels even further thanks to a new OLED panel. Only the Pro models will get the smaller bezels, and they'll still feature the Dynamic Island at the top. But Apple is getting closer to placing the selfie camera under the screen for a perfect all-screen phone design.

Unsurprisingly, Google is also working on this technology, and we might see Pixel phones with under-display cameras in the near future. And while I'm unlikely to switch from an iPhone to a Pixel phone anytime soon, I'm excited about Google pursuing this upgrade.

The problem with under-display cameras

When Apple and Google deliver their under-screen camera tech, they won't be the first to have done so. ZTE did it with a traditional phone years ago. Then Samsung put an Under Display Camera (UDC) on the Galaxy Z Fold. Also, other vendors have been researching the technology for years.

The problem with under-display cameras is that we need additional tech innovations for them to go mainstream. There's a reason Samsung doesn't use UDC selfie cams for its Galaxy S series. On the Fold, the UDC selfie cam is a backup. You have two other ways to shoot selfies, so you don't have to worry about camera quality.

Placing the camera under several layers of materials will impact the picture quality. It's not just the glass covering the lens but also the OLED screen, which has to be transparent.

Apple is already working on perfect iPhones

But you don't get perfect transparency since that OLED screen section covering the camera has to work as a display. You need algorithms on top of the camera's capabilities to develop decent selfie photos. And the selfie is a massive part of our smartphone experience.

Under panel Face ID is now expected to be pushed at least a year to 2025 or later due to sensor issues.

— Ross Young (@DSCCRoss) March 9, 2023

Google Pixel phones aren't necessarily the best iPhone alternatives out there, but Pixels are amazing cameras. And that's the reason why I'm excited to see that Google is working on under-screen camera tech. Google can deliver the kind of camera innovations others will try to replicate. And it could put pressure on Samsung and Apple. That's if Google's under-screen camera will be able to excel, that is.

It's unclear when Google will launch such a Pixel. It's not happening with the Pixel 8. If anything, Google might let Apple's iPhone go first, as Google's strategy has been copying Apple while simultaneously criticizing the iPhone. And rumors say we're not too far off from iPhones with under-screen selfie cams.

Google's under-screen selfie cam idea

Google is studying this technology, and Forbes found a patent application titled System And Apparatus Of Under-Display Camera.

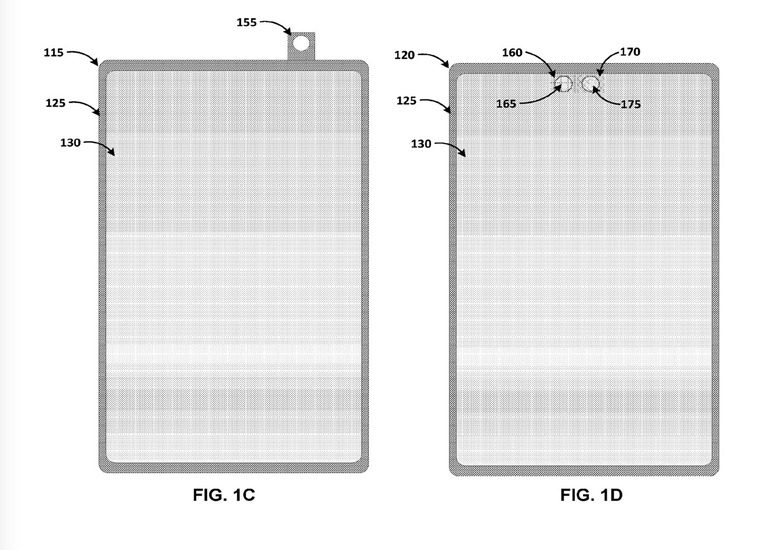

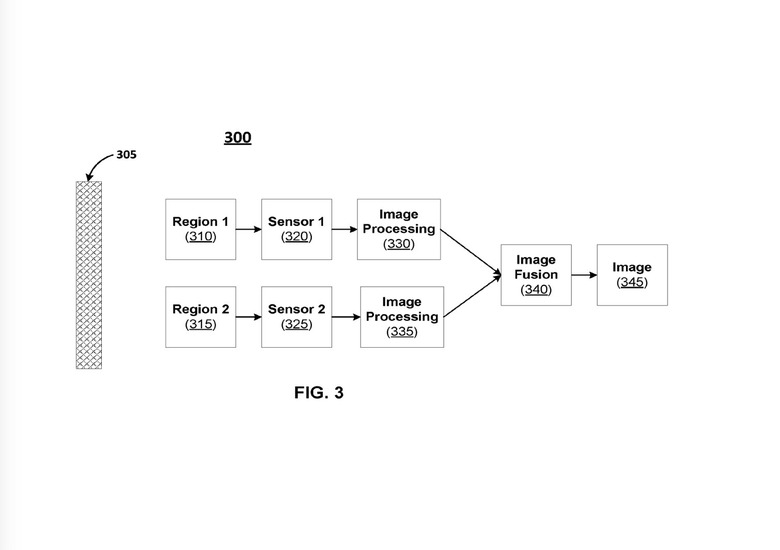

The patent application tells half of the story of Pixel phones featuring under-display cameras. It focuses on the Pixel producing high-quality selfies by using not one but two distinct under-screen cameras with different abilities to capture image data.

One lens could be monochrome, while the second would be a color sensor. Each sensor would feature display covers with particular light-blocking elements. One of the cameras could focus on a specific characteristic, for example, sharpness for the monochrome sensor and colors for the RGB one:

[0038] In an example implementation, camera 165 can include an RGB sensor, and the first display region 160 can have a circular LED or transistor layout structure.

Furthermore, camera 175 can include a monochrome sensor, and the second display region 170 can have a rectangular LED or transistor layout structure. The combination of an RGB sensor and a circular layout structure can generate signals causing an image to have a first characteristic(s) (e.g., quality, sharpness, distortion, and the like) based on light distortion and camera sensitivity. The combination of a monochrome sensor and a rectangular layout structure can generate signals that can generate signals causing an image to have a second characteristic(s) (e.g., quality, sharpness, distortion, and the like) based on light distortion and camera sensitivity.

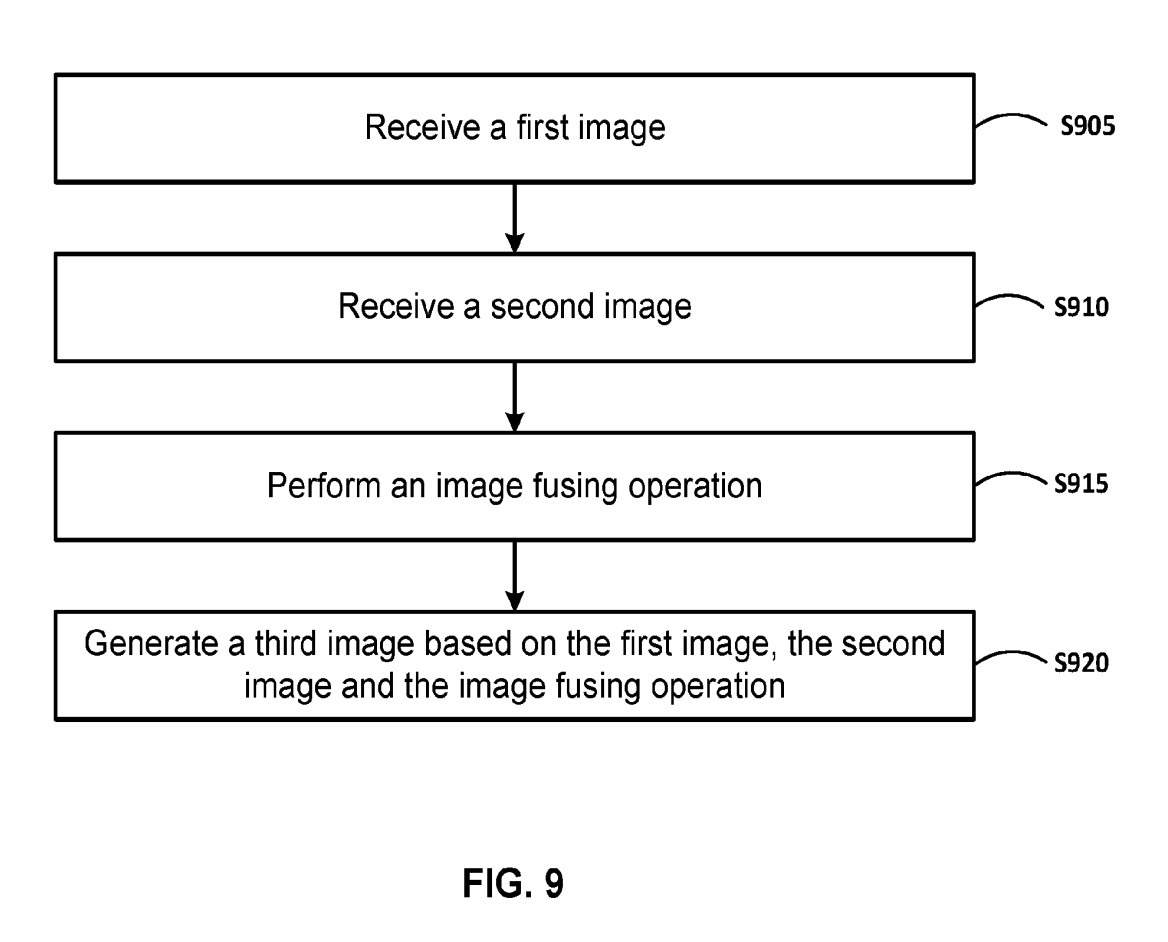

For example, the rectangular layout structure allows more light to pass through and also generates images with better sharpness, and the monochrome sensor is more suitable than the RGB sensor to pick up these characteristics. Overall, the first display-sensor combination and the second display-sensor combination can be designed to pick up first and second characteristics that are complementary to each other. The two images can be fused afterwards so that the resulting image has both complementary characteristics.

The software would then fuse the two images and deliver the best possible selfie. A photo with great sharpness and color fidelity, with the information coming from two different images.

Current under-display cameras use just one camera sensor placed under the display.

That said, there's no telling if Google will go forward with this idea. This might be just a patent that helps the company cover all its bases. Also, placing two cameras under the display means Google has to worry about two different parts of the OLED screen acting as seamless displays when the cameras aren't in use. Presumably, a separate patent application would cover that technology.

Also, as with any other technology that appears in patents and patent applications, there's no telling if it'll ever reach a consumer product. Or how long it'll take for that to happen.

Separately, I can't but wonder whether placing two cameras under the screen of a Pixel phone would enable 3D face recognition like the iPhone's Face ID.

Meanwhile, we'll get a Pixel 8 series this October, which will look much like their predecessors, including the hole-punch camera design.