iOS 18 Vocal Shortcuts Help Take GPT-4o To The Next Level

Apple announced a variety of accessibility features for the iPhone that will be part of iOS 18 in anticipation of Global Accessibility Awareness Day. It's becoming a tradition for Apple to detail new accessibility features for its next-gen operating systems in May, ahead of the WWDC keynote in June, where Apple will introduce those operating systems.

Among the various exciting additions to the iPhone's growing list of accessibility features, there's a new Vocal Shortcuts tool that I find interesting. As the name implies, you'll be able to assign voice shortcuts to actions. And I think Vocal Shortcuts could be very useful for triggering AI apps on the handset.

Specifically, I think Vocal Shortcuts might help me launch ChatGPT by voice. From there, I'd be just one touch away from talking to GPT-4o, OpenAI's brand-new model.

GPT-4o is easily one of the best AI features launching this week. That might be strange to hear, considering that Google delivered a big I/O 2024 keynote event centered around AI. Google couldn't stop talking about incorporating Gemini into its products. Artificial intelligence was such a big topic that Android seemed like an afterthought.

Despite that, the live ChatGPT demos OpenAI offered a day before I/O 2024 made a big impression. GPT-4o can handle text, voice, image, and video inputs simultaneously. It lets you interrupt it when chatting with it, and the experience feels like you're talking to a person.

That's a big upgrade for ChatGPT, and you can use GPT-4o multimodel on iPhone right now via the ChatGPT app. You don't have to wait for iOS 18.

After I saw the two AI presentations, I said that Apple can't deliver real iPhone AI experiences in iOS 18 without including ChatGPT or Gemini in the operating system. Given the current state of Siri, I'd rather invoke GPT-4o than Apple's assistant. While Siri improvements are probably part of the iOS 18 rollout, I don't think the assistant will get chatbot abilities.

How do Vocal Shortcuts fit into all of this? Ideally, ChatGPT will be integrated into iOS 18 so I can easily trigger it on my iPhone. But say Apple doesn't partner with OpenAI or Google, Vocal Shortcuts might help me get the functionality I want.

Here's how Apple describes Vocal Search:

With Vocal Shortcuts, iPhone and iPad users can assign custom utterances that Siri can understand to launch shortcuts and complete complex tasks.

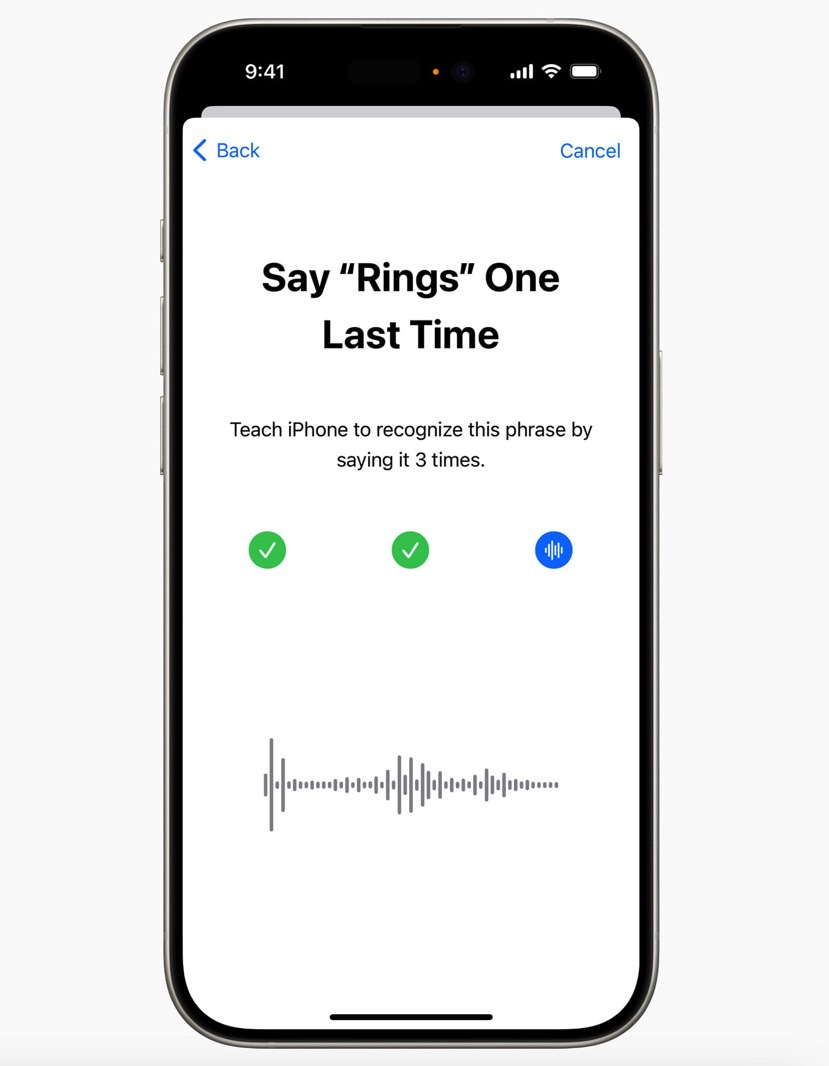

Apple offers an example of how the feature will work. You'll train the iPhone to recognize a specific phrase by saying it three times. Then, you'll assign that shortcut to the complex task you want it to perform.

I'd set up a Vocal Shortcut to open the ChatGPT app. If possible, I'd set it up to trigger GPT-4o's voice ability. That way, I'd be one voice command away from asking ChatGPT questions.

I could just as easily create Vocal Shortcuts for Gemini and any other AI assistant that might be available as an iPhone app. That way, I'd be just one voice command away from invoking the AI assistant I need.

I'm still speculating, as the Vocal Shortcuts feature isn't technically available. I'll have to wait for the first iOS 18 beta to try it out.

Remember that you can tell Siri to launch any iPhone app right now. That's not what I want, however. I'm hoping Vocal Shortcuts would give me more granular control over the app features I can launch by voice.

Finally, I'll note that I plan to upgrade to an iPhone 16 model later this year. I'll get access to the Action button, which can also be used as an app shortcut. If Vocal Shortcuts fail me, I could still pair ChatGPT with that button.