These Google AI Apps Are Going To Be Amazing On Apple's Vision Pro

Apple unveiled the Vision Pro several months before the spatial computer is ready to ship because this is a first-gen device. Apple may have shown us its vision for the headset, pun intended, but it's third-party developers that will come up with exciting Vision Pro apps to convince customers to press that purchase button. Moreover, Apple needs more time to market the device and let prospective buyers get used to that sky-high $3,499 price. Again, this is where third-party apps could help sway some consumers.

While we wait for developers to show off their Vision Pro app ideas, I can't help but notice that Google has several apps ready that would be a great fit for Apple's headset. And they're apps that are already infused with AI, which is obviously a key feature of the Vision Pro headset.

From the get-go, I told you the Vision Pro would need a ChatGPT-like generative AI product. Thanks to its new eye-and-hand interaction model, the Vision Pro will make computing faster than ever. Vision Pro also supports voice input, and that's where a great AI app will shine. It might be a version of Siri or whatever name Apple chooses for such an assistant.

Then, I highlighted a few apps I'd like to use in augmented reality on the Vision Pro. One of them came from Redditors who thought of an app that lets you try furniture before you buy it. I said at the time the same principle could apply to other things on the Vision Pro, like clothes and shoes.

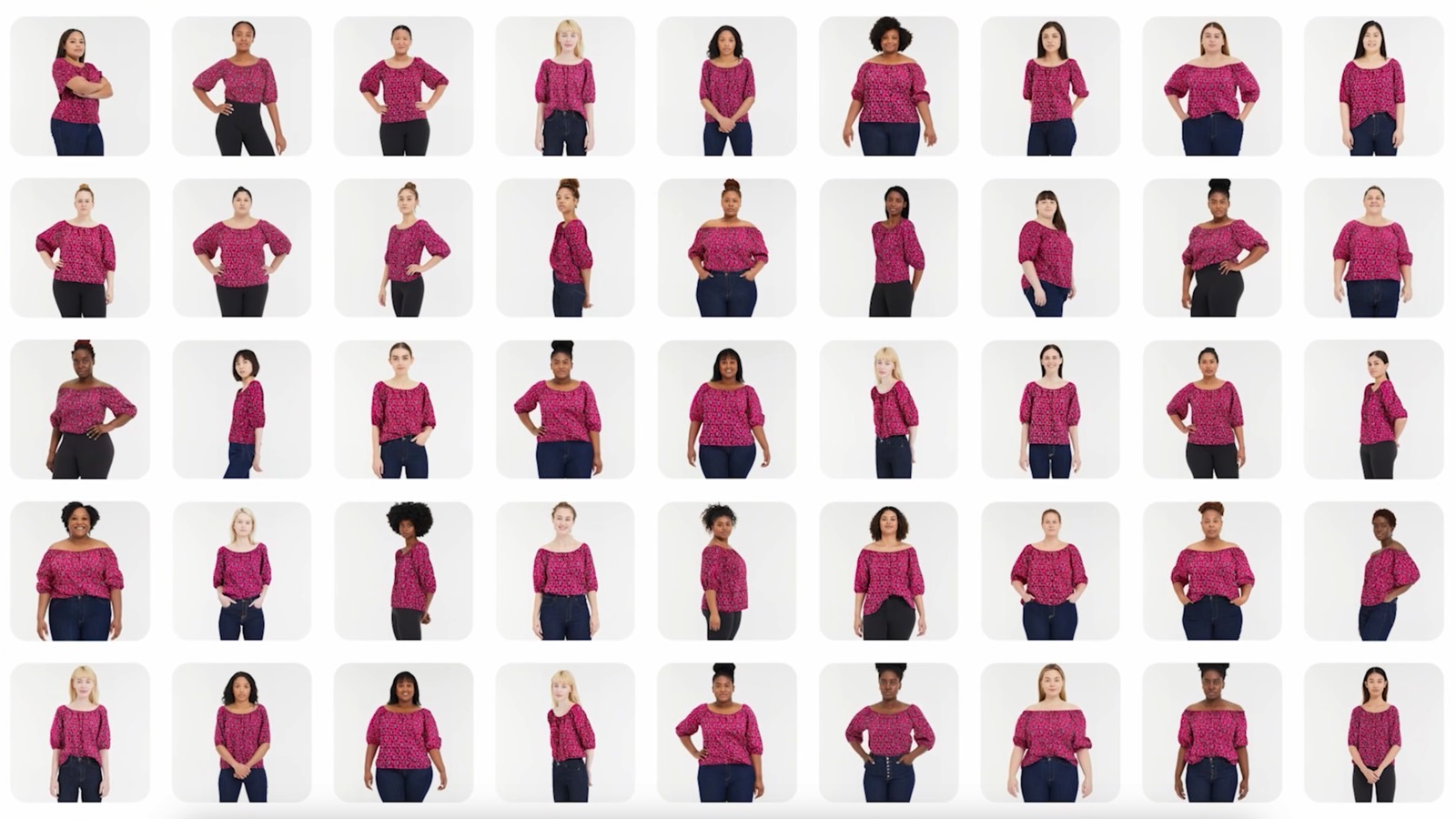

That was before Google's barrage of AI announcements this week. One of the software features that Google brought to Search is a new shopping experience called Visual Try On. Google will show the same clothing items on different models so you can get an idea of how they'll fit on you — from Google:

Our new generative AI model can take just one clothing image and accurately reflect how it would drape, fold, cling, stretch, and form wrinkles and shadows on a diverse set of real models in various poses. We selected people ranging in sizes XXS-4XL representing different skin tones (using the Monk Skin Tone Scale as a guide), body shapes, ethnicities, and hair types.

That happens on traditional computers, whether it's an iPhone or a Chromebook. But Google can transform that experience for AR devices like the Vision Pro.

Google could use the same algorithms to let you try the on clothes you might want in the comfort of your own home. Fashion brands can create similar features for AR shopping experiences.

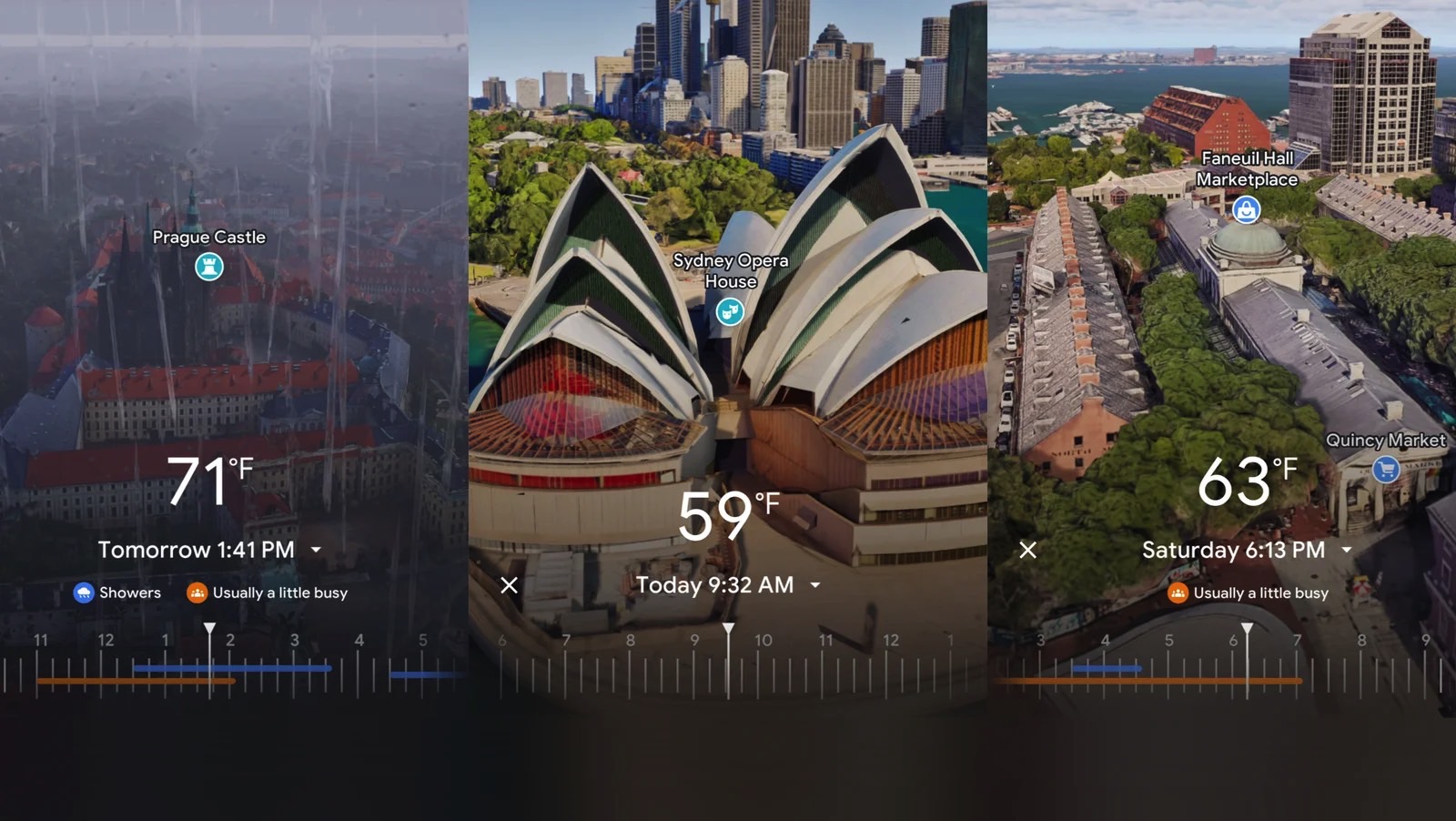

That wasn't Google's only new AI-related announcement. The company also expanded the availability of Immersive View on Google Maps to more landmarks around the world. The feature gives you a 3D model of tourist attractions which AI obtains by stitching together billions of images.

Immersive View will give you a better idea of what your next vacation spot looks like. But the same feature could look even better on Vision Pro than on an Android device or a Mac. You could be actually immersed in Google Maps without leaving the house. You'd use your eyes and hands to navigate without walking.

Moving on, Google Lens is also getting better AI powers. Like the ability to diagnose skin conditions with an image. That's just one thing that Lens can do. And the app will be even better on Vision Pro. You could use it to look at various images and ask questions about their contents.

You'll still be home. But remember that Vision Pro will let you load all sorts of apps, including internet browsers. You could just look at something and ask Lens what it is.

I will also name Google Bard and the new SGE (AI Google Search) as great Google apps for Vision Pro. That's because I keep saying Vision Pro needs AI apps to make spatial computing even better.

I'm only speculating here, as Google has not announced the availability of these apps on Vision Pro. But Google will want to be the main search engine on spatial computers, so we'll likely see these apps move over to Vision Pro soon.

Not to mention that, unlike a certain Meta CEO, Google's Sundar Pichai is actually excited about the Vision Pro.