Rotten Tomatoes Wants You To Think The Little Mermaid Remake Earned A Near-Perfect Audience Score

If you haven't gotten around yet to taking your family to the movies to see Disney's live-action remake of The Little Mermaid, chances are that some of you might first do a bit of research online to see if this big new release will be worth your while. Notwithstanding the controversy attached to the movie, with the casting of Halle Bailey as the titular mermaid having sparked a racist backlash, some potential audience-goers will want to know in advance what critics and/or other viewers think.

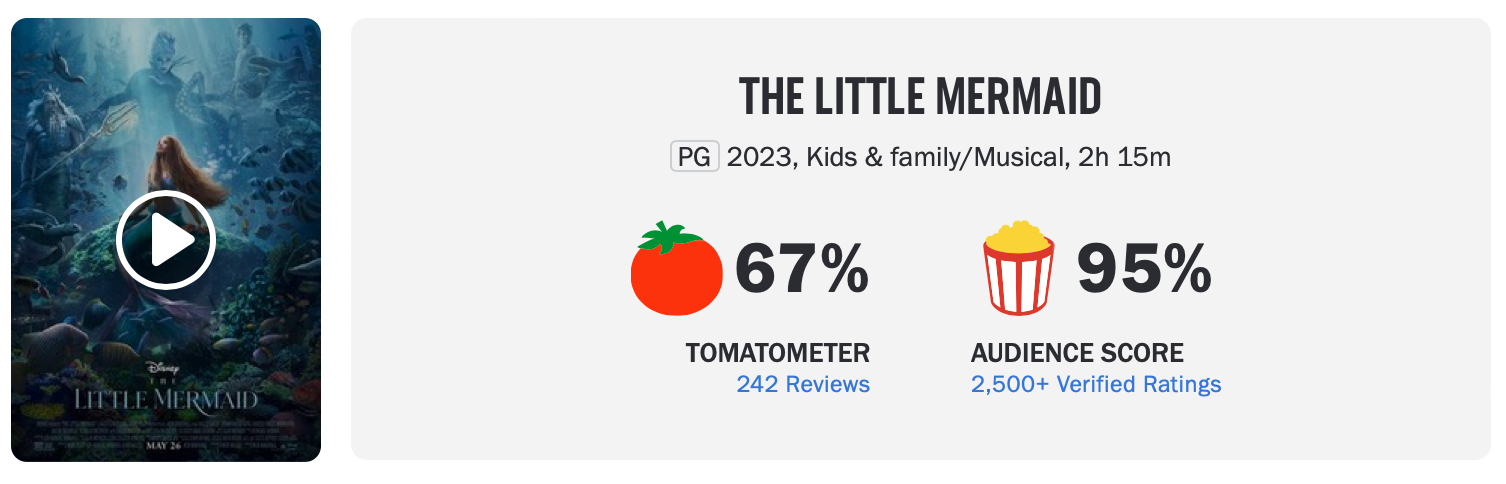

So let's say that includes you — that you want to scan some early reviews and audience feedback about the movie, which finally hit theaters ahead of the long Memorial Day weekend. If you head over to Rotten Tomatoes right now, for example, here's what you see for The Little Mermaid:

Critics have clearly responded to the movie with a collective "meh," since that 67% score above is just a few percentage points away from the site's "rotten" designation that starts at 59%. However, take a look at that near-perfect audience score. Quite a lopsided response here, don't you think? The audience score is a very stellar 95%, based on more than 2,500 verified audience ratings (and that "verified" designation is important, which is why we'll come back to it in just a moment).

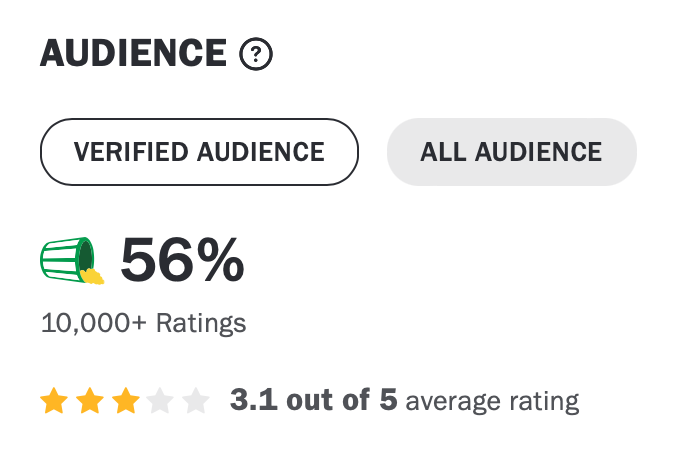

If you're on the site and you click on that "95%," you're then presented with a new box that lets you drill down further into the audience response. You can choose, at that point, to see "verified audience" ratings, or "all audience" ratings.

You can probably guess where this is going.

The "verified" audience ratings for The Little Mermaid represent a modest subset of the overall pool of ratings that the movie has garnered so far on Rotten Tomatoes. That's what the score you see in the box above represents: 95% of all verified Rotten Tomatoes audience ratings are positive.

But something very different happens when you tab over to "all audience" ratings. In fact, it changes the picture quite dramatically. Compared to a 95% verified audience score on Rotten Tomatoes, the "all audience" score drops all the way down to just 56%. And whereas the former is based on more than 2,500 ratings, the all audience score is based on more than 10,000 user ratings.

Here's what's going on.

First, by showing a 95% verified audience score by default, that leaves visitors to the review site with the impression that that's the score. Seems like a home-run, right? A 95% is near-perfect, so how can you say no to going to the theater for that one?

It's easy to look at this and feel like Rotten Tomatoes is trying to hood-wink the audience for a movie that's become an avatar for the woke-ification of Hollywood. And that may or may not be what's going on, but Rotten Tomatoes says that designating some reviews as "verified" is meant to distinguish between people who actually went to the theater and saw a movie — and are thus able to actually have a useful take to offer — versus someone sitting at home whining about, say, a casting choice.

How does Rotten Tomatoes embark on this analysis of audiences for a movie like The Little Mermaid, you might ask? Basically, if you bought your ticket through a provider like Fandango, AMC, or Cinemark — which all signed up to participate in Rotten Tomatoes' verification program — Rotten Tomatoes can see that you actually bought a ticket to the movie that you're reviewing.

Right there, though, that's a problem for me. Because I can think of a few different ways someone would legitimately buy a ticket and go see a particular film outside of Rotten Tomatoes' ability to confirm that they did so (gift card, small local theater, etc.). Nevertheless, the reviews in those cases — whether they're angry or fawning for a movie like The Little Mermaid — are hidden behind the verified audience score, which I highly doubt most visitors to the site will actually click through in order to get more information.

Granted, there absolutely does need to be some way to separate the wheat from the chaff here. So much of what people leave on Rotten Tomatoes, for example, isn't a review so much as a comment. The last time I checked the Rotten Tomatoes audience ratings for the Paramount series Yellowstone, for example, there was an audience review from someone, right at the top, complaining that Yellowstone should have ended before now — and that was it. That was the "review." So, I get what the site is trying to do.

At the same time, though, setting the audience score system up like this kind of leaves you with the impression that Rotten Tomatoes is saying this curated number is the real score, while the one for which the site doesn't have its thumb on the scale is not to be trusted. ("Just take our word for it, these are the only reviews you need to read ...") The point of a site like Rotten Tomatoes is to inform visitors, and it's hard to see how you do that by adding more friction to the process of finding that information.