YouTube Will Tell You When The Video You're Watching Was Generated By AI

YouTube says it will take steps to ensure that generative AI has a place on the video platform while also being responsible with it. In a blog post this week, Jennifer Flannery O'Connor and Emily Moxley, Vice Presidents of Product Management at YouTube, shared several AI detection tools that will help the video platform highlight AI-generated content.

The two said that YouTube is still in the early stages of its work, but that it plans to evolve the approach as the team learns more. For now, though, they shared a couple of different ways that the video platform will detect AI-generated content and warn users of it so that they can use it responsibly.

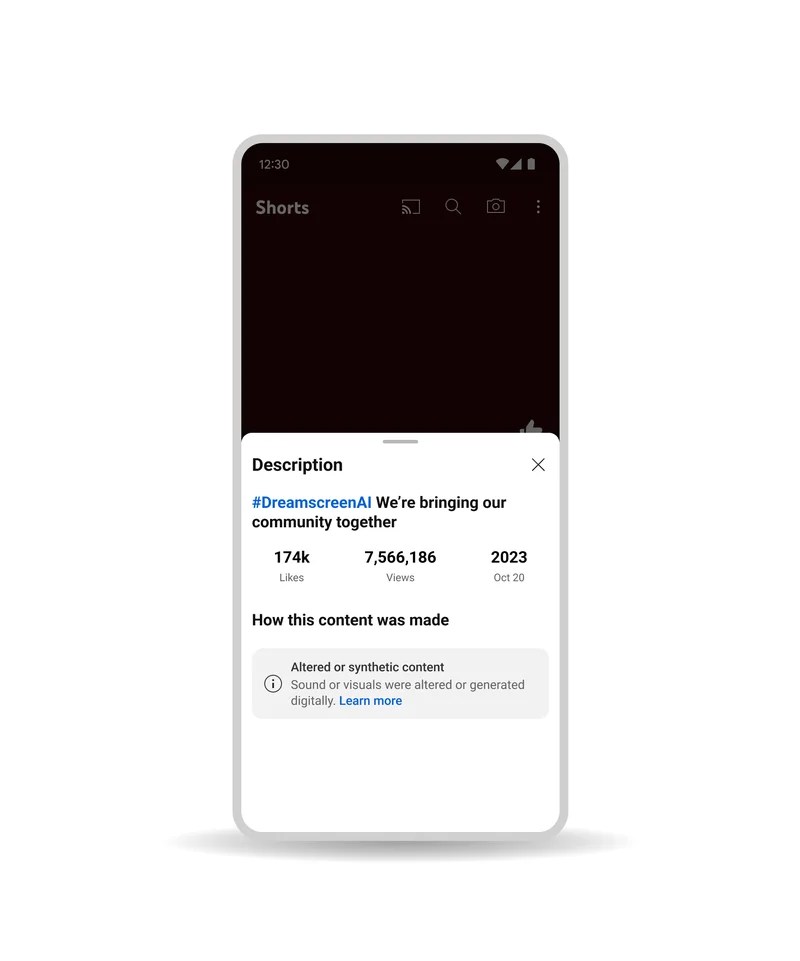

The first method will require disclosure from creators whenever something is created using AI. This means if anything within the video was generated with AI, it should have a disclosure on it, as well as one of several new content labels, helping you pinpoint what was created with AI and what wasn't.

This particular issue will be handled using a system that informs viewers when something they are watching is "synthetic" or AI-generated. If any AI tools were used in the video, it would have to be disclosed to help cut down on the potential spread of misinformation and other serious issues, YouTube notes.

YouTube says it won't stop at labels and disclosures, though it will also use AI detection tools to help cull any videos that show things that violate community guidelines. Further, the two say that anything created using YouTube's generative AI products will clearly be labeled as altered or synthetic.

Further, YouTube's future AI detection tools will allow users to request the removal of altered or synthetic content that simulates an identifiable individual, including their face or voice. This will be done through the privacy request process, and YouTube says that not all content submitted here will be removed, but it will be considered following a variety of factors.

AI-generated content is here to stay, especially with ChatGPT continuing to offer so much for so many. And while it's unlikely we'll ever see AI completely leave the entertainment medium, at least YouTube is taking some steps to help mitigate the risks it could pose in the long run. Of course, YouTube's track record with community policing hasn't been the best in the past, so it'll be interesting to see how this all plays out going forward.