YouTube Is Making It Harder To Be Fooled By AI-Generated Videos

Artificial intelligence isn't quite on the verge of taking your job or replacing your favorite search engine yet, but it's certainly capable of tricking the average internet user. As such, YouTube will now require creators on its platform to start disclosing the use of "altered or synthetic media" in their videos, including generative AI, to create realistic content.

It's an interesting distinction but an important one. Creators do not always have to let us know if their video contains AI-generated content. If the content is "clearly unrealistic, animated, includes special effects, or has used generative AI for production assistance," the YouTube creators don't have to tell viewers that they used AI.

Here are a few examples of AI-generated content that would require disclosure:

- Using the likeness of a realistic person: Digitally altering content to replace the face of one individual with another's or synthetically generating a person's voice to narrate a video.

- Altering footage of real events or places: Such as making it appear as if a real building caught fire, or altering a real cityscape to make it appear different than in reality.

- Generating realistic scenes: Showing a realistic depiction of fictional major events, like a tornado moving toward a real town.

Basically, if the intent of your video is to fool viewers into believing something that doesn't exist or didn't happen, you need to let them know that you used AI.

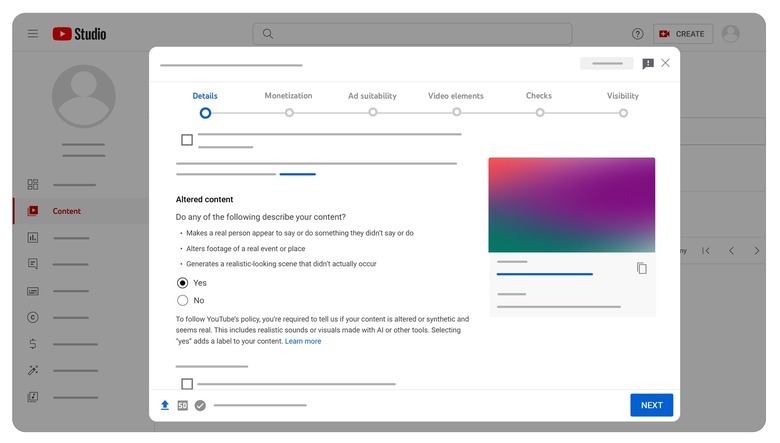

Creators will now see an Altered content field under the Details section in YouTube Studio. If their video meets the criteria described above, they will need to select "Yes" in response to the disclosure questions. For most videos, YouTube will then add a label in the description field to indicate that the video features altered or synthetic content.

For videos covering sensitive topics, like elections, conflicts, natural disasters, or health, there might be an additional and more prominent label in the actual video player so that there will be even less chance of anyone watching being deceived.

YouTube says that these labels will begin rolling out on the mobile app first, followed by the desktop and TV apps. The company also notes that it might add these labels to videos even if the creator chose not to disclose the use of AI, depending on the content.