Viral Video Of TikToker Using AI To Catch Cheating Boyfriend Is Totally Fake

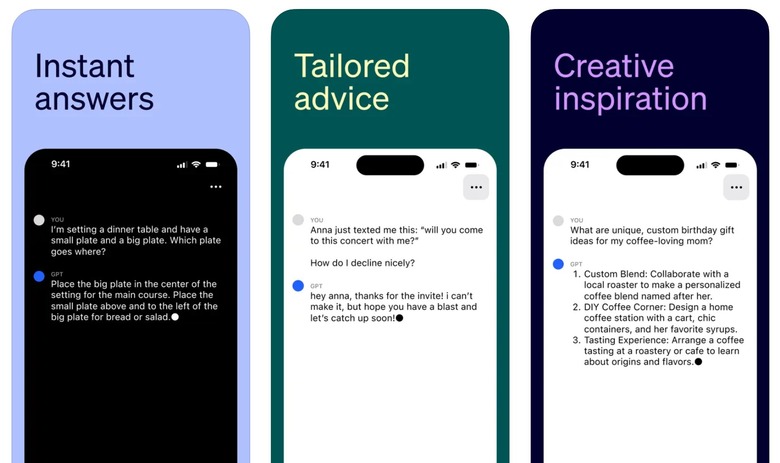

After ChatGPT burst onto the scene, the buzz surrounding generative AI software exploded. These programs can generate more than just text. Some create amazing imagery from text prompts, and others can compose music. Some AI apps can even clone someone's voice to perfection. That's the trick a popular TikToker used in a viral video where she purportedly caught her boyfriend cheating with the help of AI.

It turns out the video was completely fake. It was just a prank, and it was carefully staged. After all, this is TikTok, so you have to question everything you see. Especially when it involves generative AI. While this particular AI stunt was fake, it's actually within the realm of possibility. But the stars would have to align perfectly in order for you to pull it off.

The viral TikTok clip

Mia Dio published her short clip in mid-April, and the video went viral. In it, you can see Dio using software to clone her boyfriend's voice based on audio from voicemails he left her. She then uses his iPad to FaceTime audio a friend of his and makes him confirm that he had been kissing someone else the night before.

@miadio I cloned my boyfriends voice using #AI to find out if he was cheating on me. #fyp #cheating #boyfriend #voicecloning

The clip has topped 2.7 million views on TikTok, making it one of the TikToker's most-watched videos in recent months.

It was really a prank

Dio promises a reveal to her boyfriend at the end of the clip. Instead, we learn from an interview that Dio, her boyfriend, and his friend worked together on the prank:

Important context here is that this video was fully a prank on my fans, and both my boyfriend and friend were in on it.

One night, him and I are talking and it comes up how you can genuinely clone someone's voice.

I remember watching the news and they were talking about that AI kidnapping scam, and I thought it was crazy.

Right around the same time, the AI Drake song went super viral and I was blown away at how realistic it sounded.

From there, I began thinking about scenarios in my own life that an AI voice imitation would have been useful.

I thought about an ex who had cheated on me and how this type of technology would have saved me a lot of time.

We decided to try it with his voice and we were shocked at how close the results were – and we came up with the idea for the fictional prank video together.

Dio used an app called ElevenLabs to clone her boyfriend's voice for under $5. Then she created a "natural-sounding" script for the FaceTime audio call. The generative AI delivered the speech you hear in the clip.

Whenever I talk about the capabilities of generative software like AI, I remind you that ChatGPT-like programs can offer false or misleading results. Add in pranks like Dio's that are passed off as real, and you end up with misleading content all over the internet.

The TikTok clip went viral because people believed it was real, as she said in the interview:

We were surprised at the number of people who believed this happened.

The reaction to the cheating online was largely supportive of me and I appreciated the love my fans gave, even though it ended up being a prank.

Can you catch someone cheating on you with programs like ChatGPT?

I said before that it's probably unlikely for you to replicate the scenario in the viral TikTok clip above. The stars have to be aligned perfectly for you to succeed. First, getting a recording of your significant other isn't difficult. And you can clone their voice to then generate any audio using AI.

The real problem is that you can't foresee what the person on the other end of the call might say or ask. You're using a script that can't be changed in real-time, so you can't adapt your responses and reactions to what that friend has to say.

Secondly, who are you going to call? Will you just try one of their friends at random and see if they confirm your suspicions? The script Dio used only "worked" because her boyfriend was supposedly drunk the night before.

Finally, placing a FaceTime audio call like that might be easier said than done. You must first access your significant other's device to make the call. Then you have to hope their friend picks up. If they don't, they would call back via FaceTime audio or regular call. Or text. And that call/message will go to your significant other's iPhone.

All of that makes Dio's scenario extremely unlikely. I'm not saying you shouldn't use generative AI software to spy on your significant other. Or that you should. But the likelihood that you can pull it off is close to zero. Addressing the matter directly might be easier than concocting schemes to catch someone cheating.