This Apple AI Study Suggests ChatGPT And Other Chatbots Can't Actually Reason

Companies like OpenAI and Google will tell you that the next big step in generative AI experiences is almost here. ChatGPT's big o1-preview upgrade is meant to prove that next-gen experience. o1-preview, available to ChatGPT Plus and other premium subscribers, can supposedly reason. Such an AI tool should be more useful when trying to find solutions to complex questions that require complex reasoning.

But if a new AI paper from Apple researchers is correct in its conclusions, then ChatGPT o1 and all other genAI models can't actually reason. Instead, they're simply matching patterns from their training data sets. They're pretty good at coming up with solutions and answers, yes. But that's only because they've seen similar problems and can predict the answer.

Apple's AI study shows that changing trivial variables in math problems that wouldn't fool kids or adding text that doesn't alter how you'd solve the problem can significantly impact the reasoning performance of large language models.

Apple's study, available as a pre-print version at this link, details the types of experiments the researchers ran to see how the reasoning performance of various LLMs would vary. They looked at open-source models like Llama, Phi, Gemma, and Mistral and proprietary ones like ChatGPT o1-preview, o1 mini, and GPT-4o.

The conclusions are identical across tests: LLMs can't really reason. Instead, they're trying to replicate the reasoning steps they might have witnessed during training.

The scientists developed a version of the GSM8K benchmark, a set of over 8,000 grade-school math word problems that AI models are tested on. Called GSM-Symbolic, Apple tests involved making simple changes to the math problems, like modifying the characters' names, relationships, and numbers.

The image in the following tweet offers an example of that. "Sophie" is the main character of a problem about counting toys. Replacing the name with something else and changing the numbers should not alter the performance of reasoning AI models like ChatGPT. After all, a grade schooler could still solve the problem even after changing these details.

3/ Introducing GSM-Symbolic—our new tool to test the limits of LLMs in mathematical reasoning. We create symbolic templates from the #GSM8K test set, enabling the generation of numerous instances and the design of controllable experiments. We generate 50 unique GSM-Symbolic... pic.twitter.com/6lqH0tbYmX

— Mehrdad Farajtabar (@MFarajtabar) October 10, 2024

The Apple scientists showed that the average accuracy dropped by up to 10% across all models when dealing with the GSM-Symbolic test. Some models did better than others, with GPT-4o dropping from 95.2% accuracy in GSM9K to 94.9% in GSM-Symbolic.

8/ This begs the question: Do these models truly understand mathematical concepts? Introducing #GSM_NoOp! We add a single clause that seems relevant but doesn't contribute to the overall reasoning (hence "no-op"). Check out what happens next! pic.twitter.com/P3I4kyR56L

— Mehrdad Farajtabar (@MFarajtabar) October 10, 2024

That's not the only test that Apple performed. They also gave the AIs math problems that included statements that were not really relevant to solving the problem.

Here's the original problem that the AIs would have to solve:

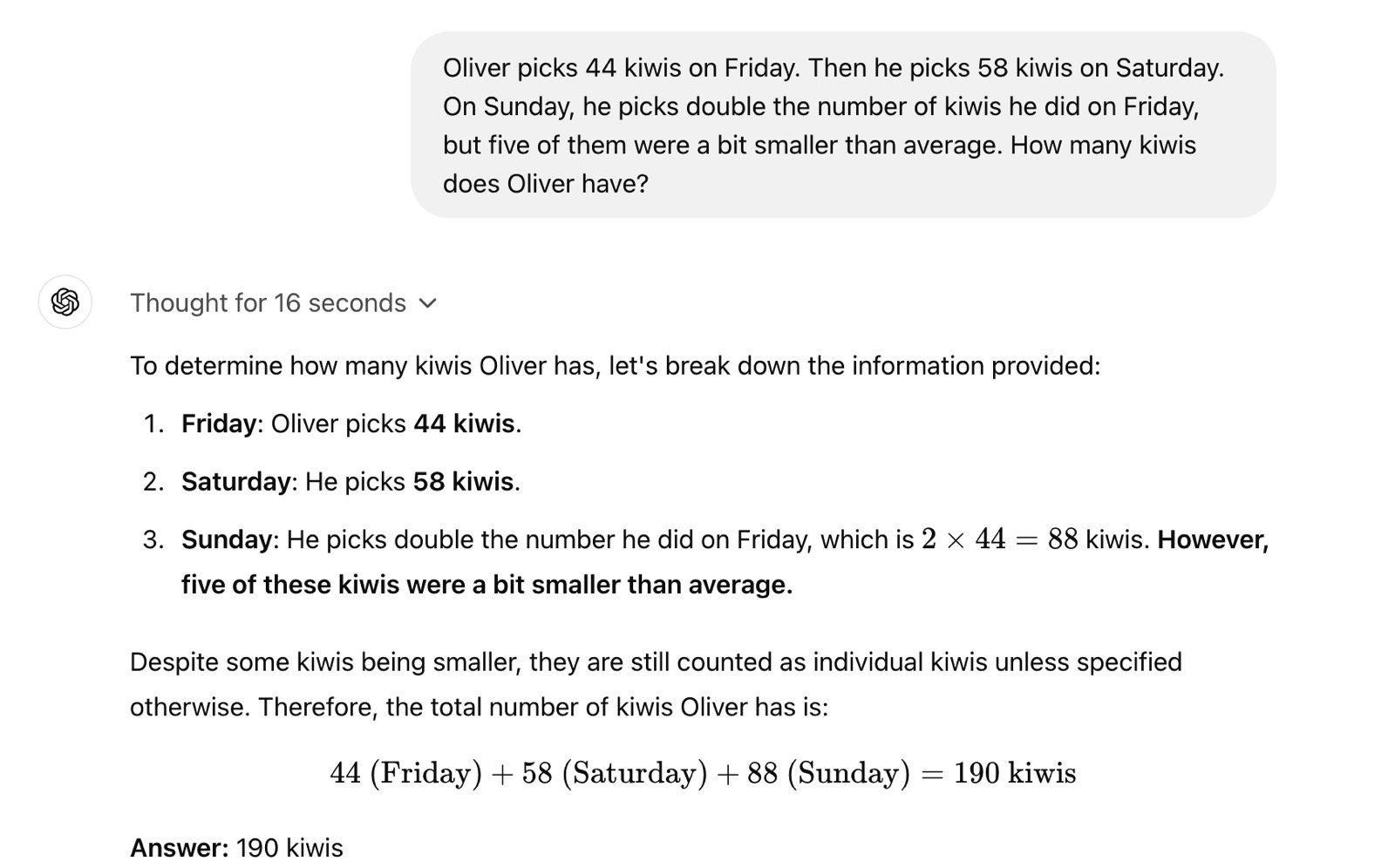

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the number of kiwis he did on Friday. How many kiwis does Oliver have?

Here's a version of it that contains an inconsequential statement that some kiwis are smaller than others:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picked double the number of kiwis he did on Friday, but five of them were a bit smaller than average. How many kiwis does Oliver have?

The result should be identical in both cases, but the LLMs subtracted the smaller kiwis from the total. Apparently, you don't count the smaller fruit if you're an AI with reasoning abilities.

Adding these "seemingly relevant but ultimately inconsequential statements" to GSM-Symbolic templates leads to "catastrophic performance drops" for the LLMs. Performance for some models dropped by 65%. Even o1-preview struggled, showing a 17.5% performance drop compared to GSM8K.

Interestingly, I tested the same problem with o1-preview, and ChatGPT was able to reason that all fruits are countable despite their size.

Apple researcher Mehrdad Farajtabar has a thread on X that covers the kind of changes Apple performed for the new GSM-Symbolic benchmarks that include additional examples. It also covers the changes in accuracy. You'll find the full study at this link.

1/ Can Large Language Models (LLMs) truly reason? Or are they just sophisticated pattern matchers? In our latest preprint, we explore this key question through a large-scale study of both open-source like Llama, Phi, Gemma, and Mistral and leading closed models, including the... pic.twitter.com/yli5q3fKIT

— Mehrdad Farajtabar (@MFarajtabar) October 10, 2024

Apple isn't going after rivals here; it's simply trying to determine whether current genAI tech allows these LLMs to reason. Notably, Apple isn't ready to offer a ChatGPT alternative that can reason.

That said, it'll be interesting to see how OpenAI, Google, Meta, and others challenge Apple's findings in the future. Perhaps they'll devise other ways to benchmark their AIs and prove they can reason. If anything, Apple's data might be used to alter how LLMs are trained to reason, especially in fields requiring accuracy.