OpenAI's ChatGPT GPT-4 Is Getting A Cheaper And Faster Turbo Mode

ChatGPT and OpenAI's GPT-4 are about to get some significant improvements. During today's DevDay, the Microsoft-backed company revealed GTP-4 Turbo, a new version of its GPT-4 model that is faster and cheaper for developers to utilize. Alongside the new turbo mode, which is available in previews, OpenAI also detailed several other improvements coming to the model.

For starters, GPT-4 Turbo will offer 128K context and launched into preview today. The next generation of the model has been trained on events up until April 2023, and the increased context means that it will be able to fit the equivalent of 300 pages of text into a single prompt.

Further, OpenAI says it will offer GPT-4 Turbo at a price three times cheaper for input tokens and two times cheaper for output tokens compared to the older GPT-4 model. It's now available for all paying developers, who can try it out by passing gpt-4-1106-preview in the API.

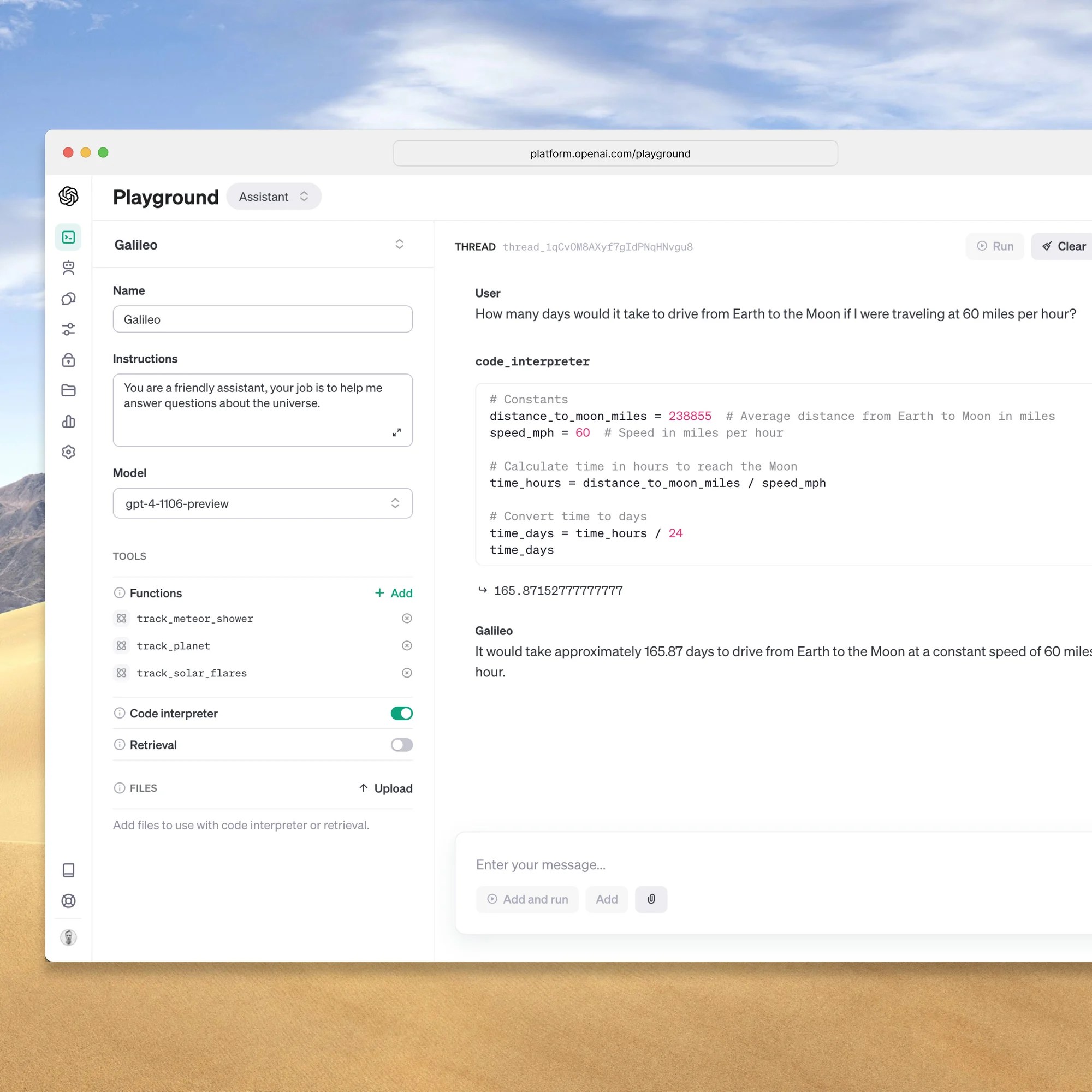

Aside from GPT-4 Turbo, OpenAI also revealed a new Assistants API, which it says should make it easier for developers to build their own assistive AI apps, all of which can have goals and call models and new tools. The company showcased how easy the new API will make creating new assistants, so it should be a welcome change for developers.

The company says it plans to release a table production-ready model in the next few weeks. Alongside GPT-4 Turbo, OpenAI is also releasing an updated version of GPT-3.5 Turbo that will support a 16K context window by default. The new version will also support improved instruction following, JSON mode, and parallel function calling, which OpenAI has also updated in GPT-4 Turbo.

We saw leaks about these upcoming ChatGPT and GPT-4 features earlier today, but it's good to see OpenAI confirming these additions, as well as the fact that they're already available to developers in a preview that releases today. If you're already a paying developer, you can try out the new changes OpenAI is implementing.