Elon Musk-Backed Lab Uses 100 Years' Worth Of Experience To Make Robot Hands More Human-Like

The AI research initiative that trained AI bots to play Dota 2 — and smoke amateur gamers — now has another win under its belt. Researchers at the OpenAI lab backed by billionaires like Elon Musk and Peter Thiel have taught robots how to manipulate objects with a level of dexterity that resembles that of a human.

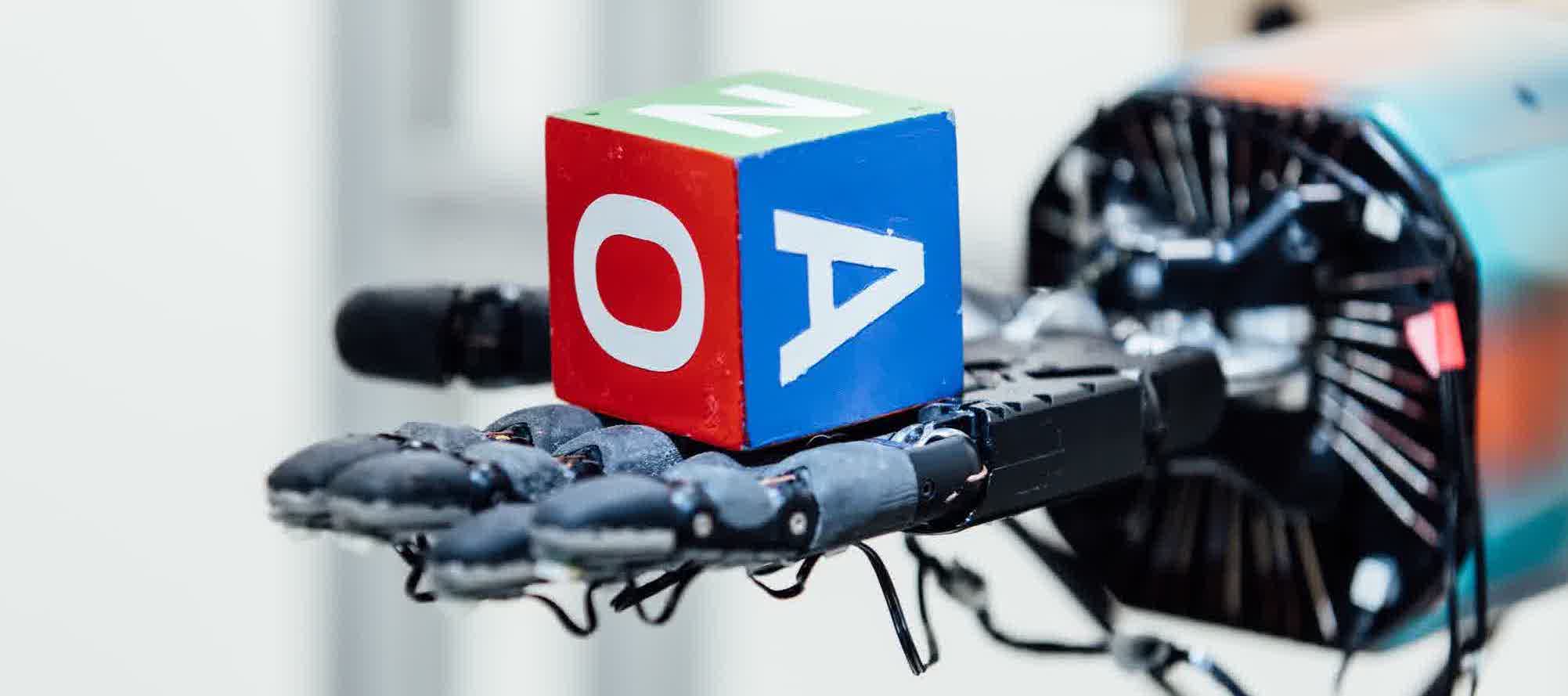

The project is spelled out in an OpenAI research paper (titled "Learning Dexterous In-Hand Manipulation") and used a reinforcement learning model to help a robot hand figure out how to precisely grasp and manipulate objects like a six-sided cube. The robot learned entirely free of human demonstrations and also thanks to its accumulation of 100 years' worth of experience in a short amount of time.

"While dexterous manipulation of objects is a fundamental everyday task for humans, it is still challenging for autonomous robots," researchers write in the paper. "Modern-day robots are typically designed for specific tasks in constrained settings and are largely unable to utilize complex end-effectors. In contrast, people are able to perform a wide range of dexterous manipulation tasks in a diverse set of environments, making the human hand a grounded source of inspiration for research into robotic manipulation."

After helping a computer vision model recognize the poses of a manipulated object, researchers moved on to the painstaking work of using 384 machines with 16 CPU cores each to train a model to use simulated camera images to predict how an object would be oriented in the robot hand.

To sped up the learning, researchers randomized many aspects of the project like gravity and the texture of the cube's surface, among other things. All to help give the AI a better idea of what manipulating something like this cube in real life would be like — and enough variables to help the AI learn how to deal with any pattern.

Smruti Amarjyoti of Carnegie Mellon University's Robotics Institute told The Verge this research doesn't herald any kind of breakthrough, per se, in terms of robotic manipulation. But he did say the robotic hand movements it produced were "graceful" in a way he didn't realize was achievable with AI.

Other researchers noted there are many limitations still inherent in this project, such as the task being limited to a robot with a palm facing up and the robot working with a cube that wasn't particularly large. It also, again, took packing 100 years' worth of knowledge into enough sessions that the robot could figure out how to do this smoothly.

Nevertheless, researchers got the level of dexterity they wanted. Now the race is on to figure out how to take an ability like this — of a robot learning how to start matching human motor skills — and go farther, to see what else it can do, and what other achievements are possible to build on top of something like this.