You Can Finally Tell When An Image Is An AI Fake Made By DALL-E

We've been talking about the problems fake AI photos can create for months, ever since it became clear that AI image generators would be able to come up with pictures that are indistinguishable from reality. That's why I don't like how easy it is to use Google's AI in photos and change those memories until they stop looking like whatever you actually photographed.

These tools can be used for malicious purposes, like faking photos of candidates during a big election year. People who still don't know how good AI imagery can be might be fooled easily.

But then, fake Taylor Swift AI porn images started popping up a few weeks ago. I am sure it must have been an incredibly painful event for the beloved music star. But the flip side is that the world is now aware of how realistic these fake AI images can be.

I'm not saying the Taylor Swift AI scandal is the reason why OpenAI has just announced that it will watermark AI photos created with ChatGPT and Dall-E 3. Or that Meta is taking a similar approach on its social platforms because of the explicit AI content that made the rounds in the past week. But it's all happening now, much later than it should have.

OpenAI announced the new changes in a help document. The company is embracing the C2PA AI standards that Adobe and others announced in October. C2PA stands for the Coalition for Content Provenance and Authenticity, a consortium of companies that are developing ways to identify the types of content you might see online, including AI-generated photos, videos, and other types of media.

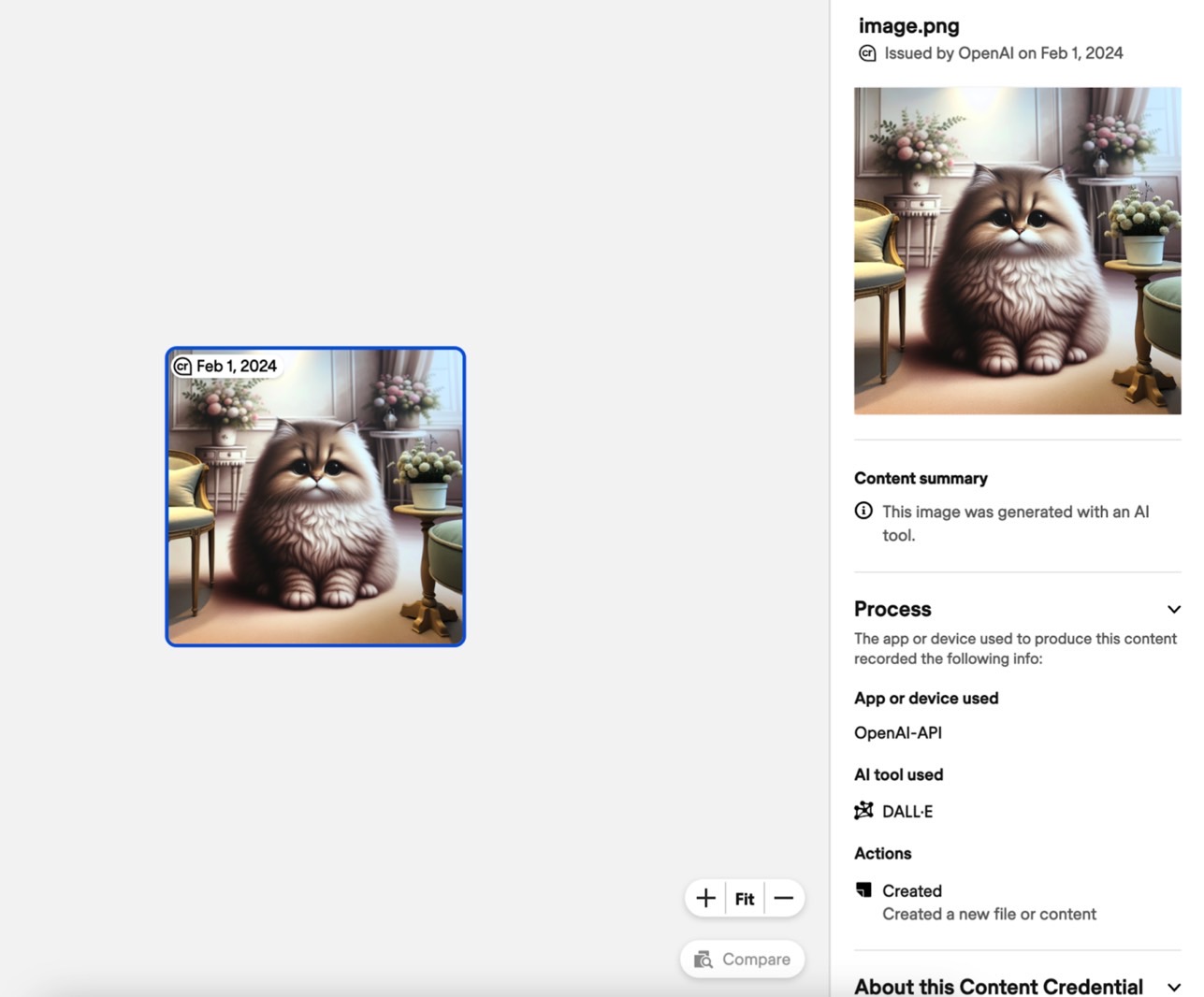

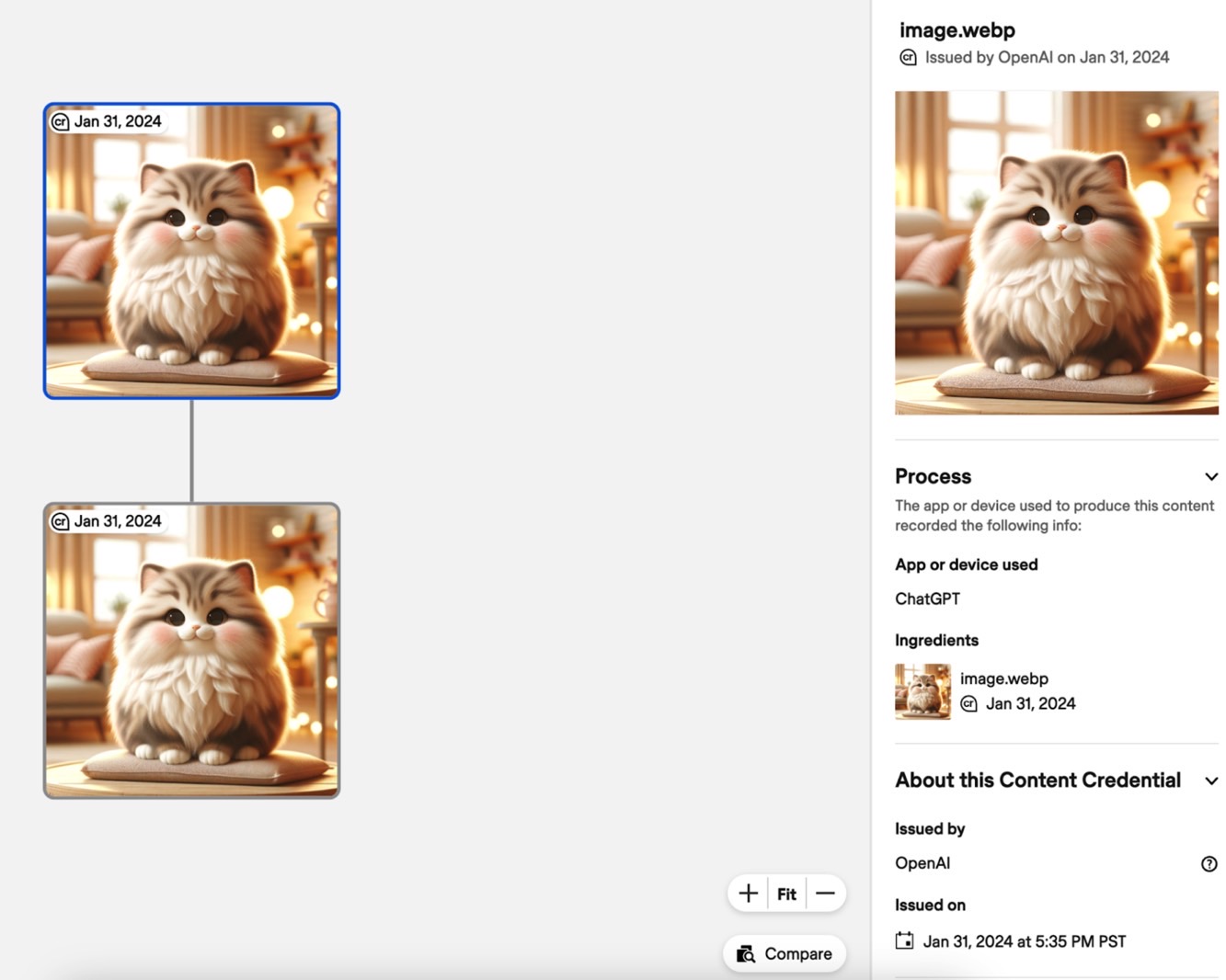

OpenAI will start showing people what images were created with ChatGPT and Dall-E by placing a "CR" symbol in the top-left corner. The CR watermark stands for the Content Credentials watermark initiative that comes from the same C2PA group.

You'll see the watermark in the top left corner of ChatGPT and Dall-E images, like in the examples in this post.

Watermarks can be removed, of course. We saw it happen with the watermarks Samsung places on the AI-edited Galaxy S24 photos. That's why the OpenAI images will also feature metadata information that will specify the origin of a picture. You'll ChatGPT or Dall-E appear in the description.

But metadata can also be removed, something OpenAI cautions:

Metadata like C2PA is not a silver bullet to address issues of provenance. It can easily be removed, either accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.

Still, OpenAI finally watermarking AI content in images is a good start. Some of the watermarks and metadata will be erased, sure. But more people might be exposed to them. They'll at least learn that some of the images they see online might be fake.

The work has just started for OpenAI. It will only watermark ChatGPT and Dall-E images as AI creations, not text or voice. But all photos you generate with ChatGPT and Dall-E on the web now include the metadata. The mobile versions of the apps will get the same features by February 12th.

OpenAI also notes that files will be slightly larger now that they'll include watermarks and metadata.

Separately, Meta announced plans to identify AI content in Facebook, Instagram, and Threads, including video and audio. The company plans to label AI images whose provenance Meta can detect, including C2PA-marked ones.

Meta will also add "Imagined with AI" labels to photos created with its own AI and place invisible watermakers on them.

This will be an ongoing effort, and it'll take time until Meta can apply AI labels to AI-generated content.