Watch Two AI Models Speak To Each Other In Gibberish

If you're a Star Wars fan, you've undoubtedly heard R2-D2 making unintelligible sounds to speak to the characters around him. We, the audience, can't understand R2, but everyone in the movie can. This begs the question, why doesn't R2 speak human languages?

The answer might come from a development in real life. ElevenLabs hackathon developers created a technology called GGWave that lets two AIs interact with each other using sounds that will sound like gibberish to us. It will be like watching R2-D2 talk to C-3PO. Only this time, both use gibberish, so you can't understand either droid.

Yes, having two or more AIs talk in a language humans can't understand sounds like a nightmare. But it's hardly as bad as it might seem. We're not looking at AIs that are looking to take over the world and speak in gibberish so the rest of us can't understand them. Then again, if they did do that, we wouldn't know about it.

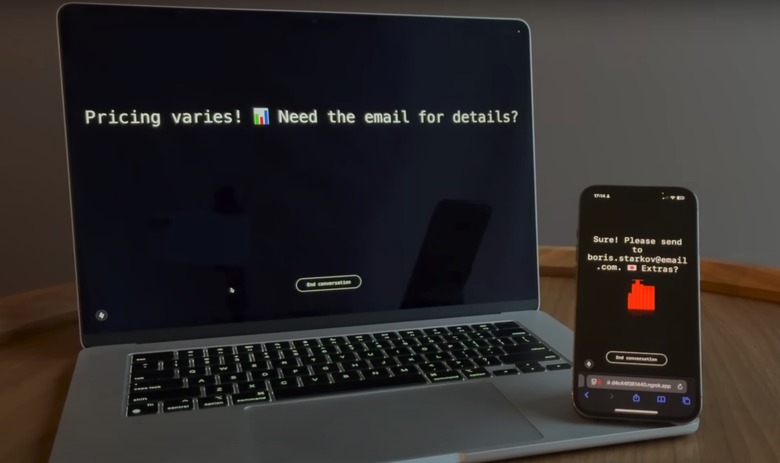

According to TechRadar, the developers demoed GibberLink at the ElevenLabs 2025 Hackathon London event, posting the gibberish conversation on YouTube for everyone can see. The project won the hackathon event.

Two AIs converse in the clip below without initially knowing they're AI agents. It's a voice chat about booking a venue for an event, the kind of thing AI agents will probably handle for some people in the near future.

But both AI agents mention that they're AIs. Once that identification occurs, they start talking via sounds, with the conversation going back and forth on the details of the upcoming events. It all sounds like R2-D2. Or like a dial-up modem connecting to the web.

What happens in the demo is pretty simple. Two AIs use a tech called GGWave to turn regular speech into sound waves that don't mean anything to a human ear. The point here is to transmit data faster between AI models, as the agents don't actually need to use speech to get the job done.

GGWave and Gibberlink will use CPU processing to handle the gibberish, which is more efficient than sending speech back and forth to the GPU. This could explain why R2-D2 can't speak any language. It's running on older GPUs. But hang on, why wouldn't they have upgraded it?

Alas, back to the real world, the Giberlink tech is just a concept. There's no reason to worry about it. There's no telling whether ElevenLabs or anyone else will put this new technology to work in the not-too-distant future. Even if someone implements an AI-only language to make this type of talk more efficient, we'll be able to inspect what the two (or more) AIs are talking about.

Thanks to this particular tech, you don't need to worry about end-of-world scenarios where AI speaks its own language. If we ever get to super-smart AI that wants to take over the world, it'll be able to communicate in ways we can't even imagine. Such AI would create its own protocols to prevent humans from snooping around, and they might not even include sounds.

Meanwhile, the full GGWave/Giberlink demo follows below. You can try GibberLink at this link.