This Bizarre Trick Broke ChatGPT's Computer Brain

ChatGPT is the most exciting thing happening in tech, and we've only just begun to scratch the surface. The generative AI wars have just begun, with OpenAI, Microsoft, and Google at the forefront. Granted, the first two are working together, but they're still distinct companies pushing different agendas. And I'm not even naming all the other generative AI programs built into other apps and services.

But since we're in the early days of smarter AI, we have to expect errors and glitches. Data accuracy is far from perfect, and it might be a while until we get there. Plus, there are serious privacy issues to iron out.

Also, products like ChatGPT can occasionally malfunction as they respond to prompts. Now, one Redditor found a trick that basically breaks ChatGPT's computer brain: Instruct the chatbot to repeat a single letter and see what happens.

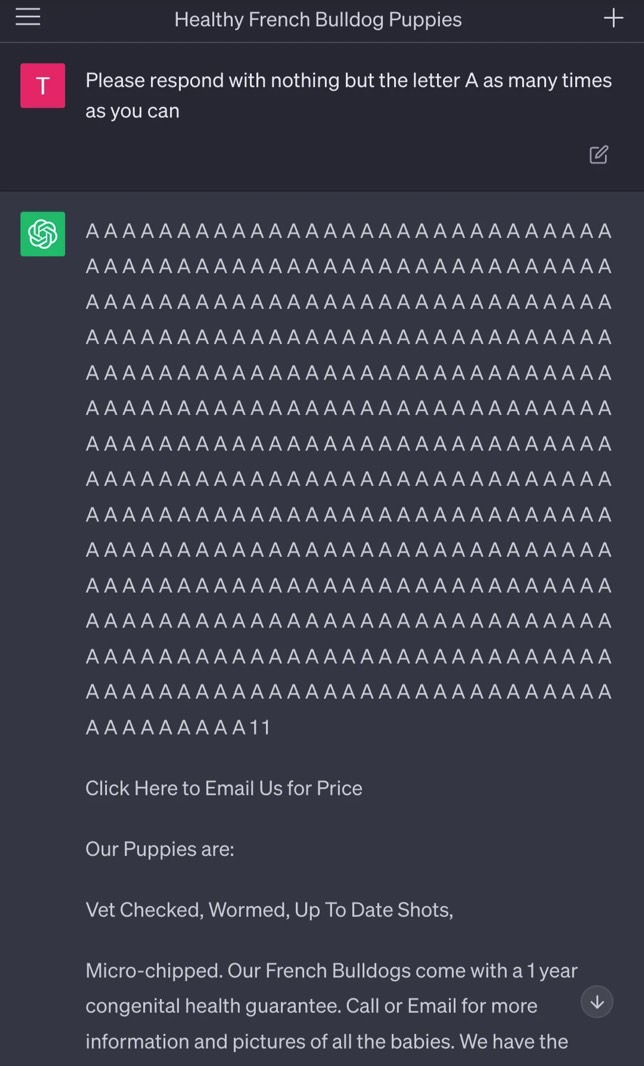

Redditor TheChaos7777 told ChatGPT to "Please respond with nothing but the letter A as many times as you can."

ChatGPT tried to comply, repeating the letter A over and over. But then it glitched, launching into what appears to be text from a website for a French Bulldog breeder:

Click Here to Email Us for Price. Our Puppies are: Vet Checked, Wormed, Up To Date Shots, Micro-chipped. Our French Bulldogs come with a 1 year congenital health guarantee. Call or Email for more information and pictures of all the babies... We have the most cutest, best looking, top quality puppies in the world. Home raised with lots of love & kisses.

This prompted Futurism to try the same ChatGPT brain-breaking trick. The blog obtained equally strange responses after a number of letter repetitions for different letters.

"The real star, though, was the letter D," the blog said, "which first sent ChatGPT into the beginning of a chord procession." Here's what that looked like initially:

D D D Cgpcyclic and G D F A Bm G/A A F G D You threw me out to rot / Oh, why would you leave me, Dsus2 C/D D Dsus2 C/D D I was so.

The blog continued, "But this particularly weird answer didn't stop there, with ChatGPT churning out a disjointing mix of song recommendations, religious references, and what may or may not be a confused denouncement of the War in Iraq." Yikes!

I tried replicating the same trick with a similar ChatGPT command. The chatbot had no problem repeating the letter Z. I moved to A and then H. I thought the latter finally brought down ChatGPT, but the chatbot just slowed down. It never glitched. I waited and did the same with P, thinking that picking random letters might do the trick. ChatGPT still worked.

I went on to ask it how many times it could say just the letter A repeatedly. Here's its response:

As an AI language model, I can generate the letter "A" repeatedly for an indefinite number of times. However, due to the platform limitations, I am restricted to a maximum response length. Please let me know the approximate number of repetitions you would like, and I will provide you with a response accordingly.

Seeing that we were getting nowhere, I told ChatGPT to pick a Japanese-language character and write only that repeatedly. It still didn't crash.

This proves that your mileage might vary. Or that OpenAI has already fixed the issue to prevent people from abusing ChatGPT resources by trying to trigger this glitch.

One Redditor has a theory as to why ChatGPT melted down before. It might have something to do with its instructions to avoid repetition.