Spain's New Anti-Deepfake Law Gives Massive Fines For Unlabeled AI Content

Since ChatGPT first went viral and made generative AI a must-have tool, I've said I'm not worried about an AI apocalypse or AI taking our jobs. These are real problems that we have to deal with, yes, but there was a more pressing AI issue that needed fixing — and it still does.

In their hurry to woo the world with new AI tricks, AI companies left and right came up with all sorts of generative AI features, including tools that let you edit images, create fake photos, and generate audio and video with a text prompt. Most of them came up with these features without fixing the glaring problem: AI-generated images and videos can be abused to mislead public opinion.

We've seen tech firms develop standards for labeling AI-generated content, but their solutions still fall short. AI content isn't immediately obvious, and it's not always watermarked. You might have to inspect a file to see whether metadata mentions AI.

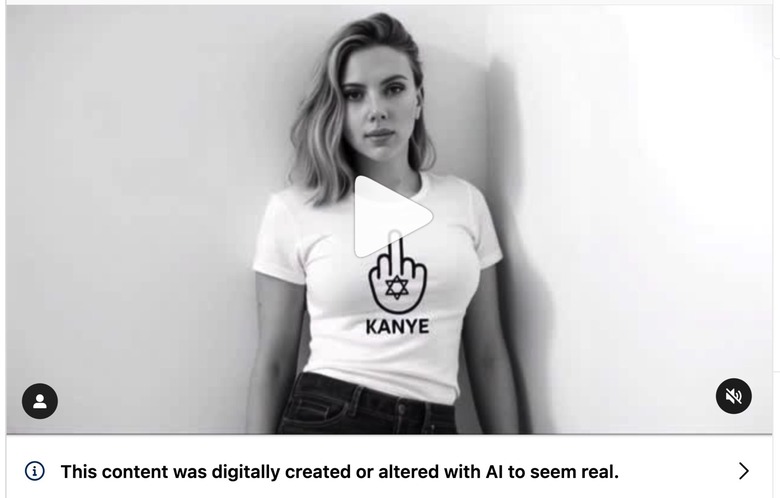

The ease of use of genAI products to edit images and create videos from scratch means anyone can make content without having knowledge of advanced software, which was a prerequisite in the pre-ChatGPT era. That's how we end up with deepfakes like the anti-Kanye clip that went viral a few weeks ago. The clip featured a deepfake of Scarlett Johansson, which the actress quickly criticized, urging the US government to pass legislation to prevent such abuse. That clip went viral just a few days after the US refused to sign an AI safety statement at the AI Action Summit in Paris.

While the Trump administration made it clear that it's not interested in heavily regulating AI, other countries are taking a different approach. Spain has a new law that will hit companies with fines of up to $38 million, or 7% of their annual turnout, for AI content that's not properly labeled as such.

According to Reuters, the Spanish government approved the bill on Tuesday in a bid to curb the use of deepfakes.

"AI is a very powerful tool that can be used to improve our lives ... or to spread misinformation and attack democracy," Digital Transformation Minister Oscar Lopez told reporters. He also said the bill adopts guidelines from the EU's AI Act.

The official added that everyone was susceptible to deepfake attacks, whether video, photos, or audio generated by AI programs.

The proposed legislation also bans other AI practices, like using subliminal techniques to manipulate people. Lopez mentioned chatbots that would encourage people with addictions to gamble or toys telling children to perform dangerous challenges.

Also important are the bill's provisions preventing companies from classifying people through their biometric data using AI. Under the law, companies would also be forbidden to rate people with the help of AI based on their behavior and personal traits to grant them access to benefits or assess the risk of committing crimes.

Only authorities would be allowed to use real-time biometric surveillance in public places, and only for security reasons.

The bill still needs to be approved by the lower house in Spain. Once it becomes law, companies deploying AI tools in Spain will risk those multi-million fines for not labeling AI content.

The bill will not ban deepfakes — that's not the point here. Instead, it'll pressure AI firms to come up with better tech to automatically label AI-generated content.

It'll be interesting to see what sort of labeling the Spanish watchdogs want to see implemented and how tech giants will react. Also, I can't but wonder whether the law would serve as inspiration for new EU legislation covering deepfakes and AI abuse in the entire region.

As a reminder, the stricter EU tech laws have prevented companies like OpenAI, Google, and Apple from releasing new AI products in the region as fast as the US and other international markets. Pressure from the Spanish law could lead to more delays in the future.

Then again, I'd prefer laws from regulators that force companies to label AI content more prominently over having immediate access to new AI tools. I say that as someone who has had to wait for plenty of ChatGPT features to roll out in the EU in the past few years.

Back to Spain, the government also created a new agency called AESIA to enforce the new rules. That's in addition to other watchdogs that might monitor AI use in specific cases involving data privacy, crime, elections, and others.