Sam Altman's Tone-Deaf Response To ChatGPT Stealing Content And Creating Deepfakes

I wasn't expecting a ChatGPT product to go viral in late March, but here we are. OpenAI stunned the world with the release of GPT-4o image generation, a new AI model that's built right into ChatGPT, giving users incredibly advanced image generation features.

Send a detailed prompt to the AI, and ChatGPT will immediately draw a mind-blowing image based on your instructions. The pictures can contain legible text, a first for ChatGPT image generation, which is impressive. Also, the AI image generation tool can use real photos to edit them however you want.

The problem is that OpenAI made ChatGPT's new image generation tool available to premium users without strong safety guardrails. The web was immediately flooded with deepfakes from ChatGPT that feature celebrities and a ton of Studio Ghibli-inspired AI drawings.

ChatGPT doesn't even place a watermark on its creations to inform viewers they're AI-generated images. The metadata isn't a good enough safety feature, not when anyone can create these fakes. Gemini might remove watermarks from copyrighted creations but at least places its own watermark on the results.

What's worse is Sam Altman's tone-deaf response to all of this. The OpenAI CEO is embracing all the praise the ChatGPT AI tool got, which is certainly deserved, without actually committing to better safeguards.

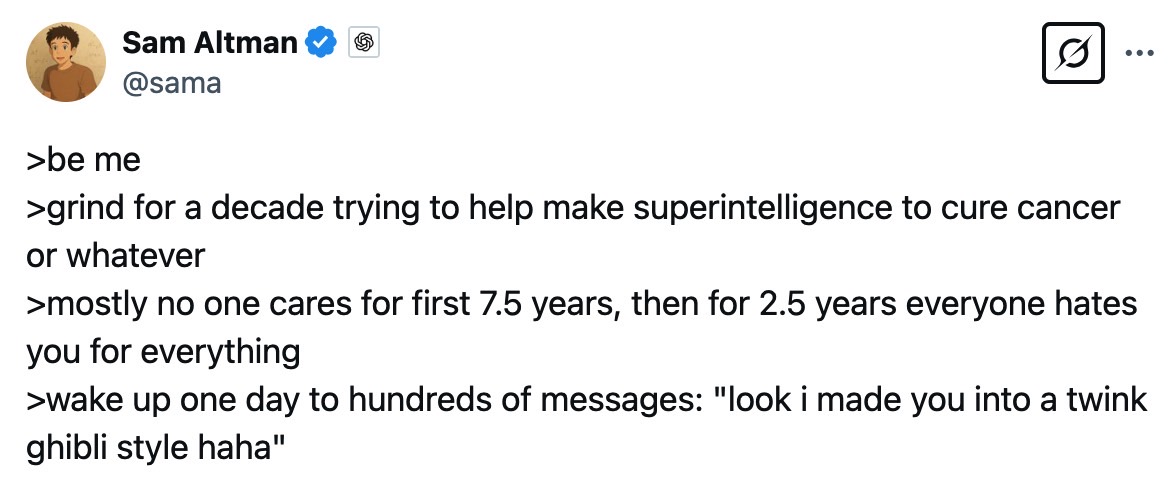

Here's the message Altman posted on X shortly after the release of 4o image generation:

>be me

>grind for a decade trying to help make superintelligence to cure cancer

or whatever

>mostly no one cares for first 7.5 years, then for 2.5 years everyone

hates

you for everything

>wake up one day to hundreds of messages: "look i made you into a twink

ghibli style haha"

The message accompanied a profile image change for Altman's X profile, as seen in the following image. Altman replaced his photo with a ChatGPT-created Ghibli-style version of himself.

It's all fun and games right now because we've yet to see real abuse. But make no mistake, people will abuse the tool to create deepfakes that can fool unsuspecting people, especially less tech-savvy people and those in countries where AI might not be used as widely.

It's not just ChatGPT that suffers from this safety issue. Google has its own advanced image generation tools for Gemini that can also be used to create fakes with ease.

But OpenAI deliberately chose not to impose stricter rules. In its initial announcement, OpenAI has a chapter on safety that addresses certain types of abuse, and that's commendable. AI images come with C2PA metadata that identifies images as created with AI, but all you need to do is take a screenshot of that image, and you'll remove that data.

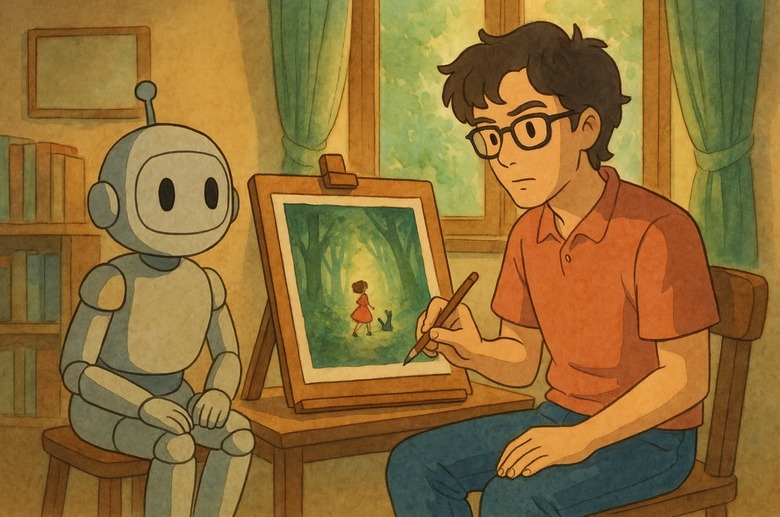

Take the top image in this post above; it's a screenshot of a ChatGPT-generated Ghibli-style image the AI made with the following prompt:

Make me a Studio Ghibli-style image based on what we just talked. I want it in 16:10 aspect ratio, you have freedom to imagine me and yourself

Also, ChatGPT blocks specific requests, "such as child sexual abuse materials and sexual deepfakes." OpenAI also said, "when images of real people are in context, we have heightened restrictions regarding what kind of imagery can be created, with particularly robust safeguards around nudity and graphic violence." But this doesn't change the fact that any ChatGPT user can now create deepfakes that can be abused. They don't have to be sexual to be potentially dangerous.

OpenAI could do more here, but it chooses not to do it despite fully knowing that some of the images coming from ChatGPT will look like real photos. Here's what Altman said on X after the tool was released:

we are launching a new thing today—images in chatgpt!

two things to say about it:

1. it's an incredible technology/product. i remember seeing some of the first images come out of this model and having a hard time they were really made by AI. we think people will love it, and we are excited to see the resulting creativity.

congrats to our researchers @gabeeegoooh @prafdhar @ajabri @eliza_luth @kenjihata @dmed256

2. this represents a new high-water mark for us in allowing creative freedom. people are going to create some really amazing stuff and some stuff that may offend people; what we'd like to aim for is that the tool doesn't create offensive stuff unless you want it to, in which case within reason it does. as we talk about in our model spec, we think putting this intellectual freedom and control in the hands of users is the right thing to do, but we will observe how it goes and listen to society. we think respecting the very wide bounds society will eventually choose to set for AI is the right thing to do, and increasingly important as we get closer to AGI. thanks in advance for the understanding as we work through this.

There's also the obvious elephant in the room that Altman doesn't even address. ChatGPT's image-generation powers have just made graphic designers obsolete. Or close to it. This was always bound to happen, but AI firms like OpenAI should at least try to pretend they care about the impact of their products on jobs and life as we know it.

Getting back to all the Gibli-ness in the ChatGPT images I see on social media, I'll also note OpenAI's disregard for copyright here. ChatGPT won't draw characters that belong to Studio Ghibli, sure, but it'll copy the style and adapt it to anything you want.

It's no wonder that Hayao Miyazaki's reaction to using AI for animation has resurfaced online this week. The Studio Ghibli cofounder said that AI-generated animation was an "insult to life itself" when presented with an internal AI-powered tool a few years ago that could create drawings in Ghibli style. I'm sure he's not too happy to see ChatGPT copy his company's style so easily.

That's not to say that AI can't be used for creative purposes or that ChatGPT's new image generation tech isn't impressive, because it is.

Also, in ChatGPT's defense, I saw safety protections in my tests, including copyright-related ones. But this doesn't change the fact that OpenAI makes it incredibly easy for anyone to use deepfakes that look almost indistinguishable from real photos, and the company's CEO doesn't seem to care.