Researchers Recreated DeepSeek's Core Technology For Just $30

A group of researchers at the University of California, Berkeley, say they've recreated the core technology found in China's revolutionary DeepSeek AI for just $30. This extremely cheap DeepSeek recreation is yet another indicator that while models from larger companies have been impressive, there may be much more affordable ways to build them.

Led by Ph.D. candidate Jiayi Pan, the team replicated DeepSeek R1-Zero's reinforcement learning capabilities using a small language model with just 3 billion parameters. Despite its relatively modest size, the AI demonstrated self-verification and search abilities, key features that allow it to refine its own responses iteratively.

To test their DeepSeek recreation, the Berkeley team used the Countdown game, a numerical puzzle based on the British game show where players must use arithmetic to reach a target number. Initially, the model produced random guesses, but through reinforcement learning, it developed techniques for self-correction and iterative problem-solving.

Eventually, it learned to revise its answers until it arrived at the correct solution. They also experimented with multiplication, where the AI broke down equations using the distributive property, much like humans might mentally solve large multiplication problems. This demonstrated the model's ability to adapt its strategy based on the problem.

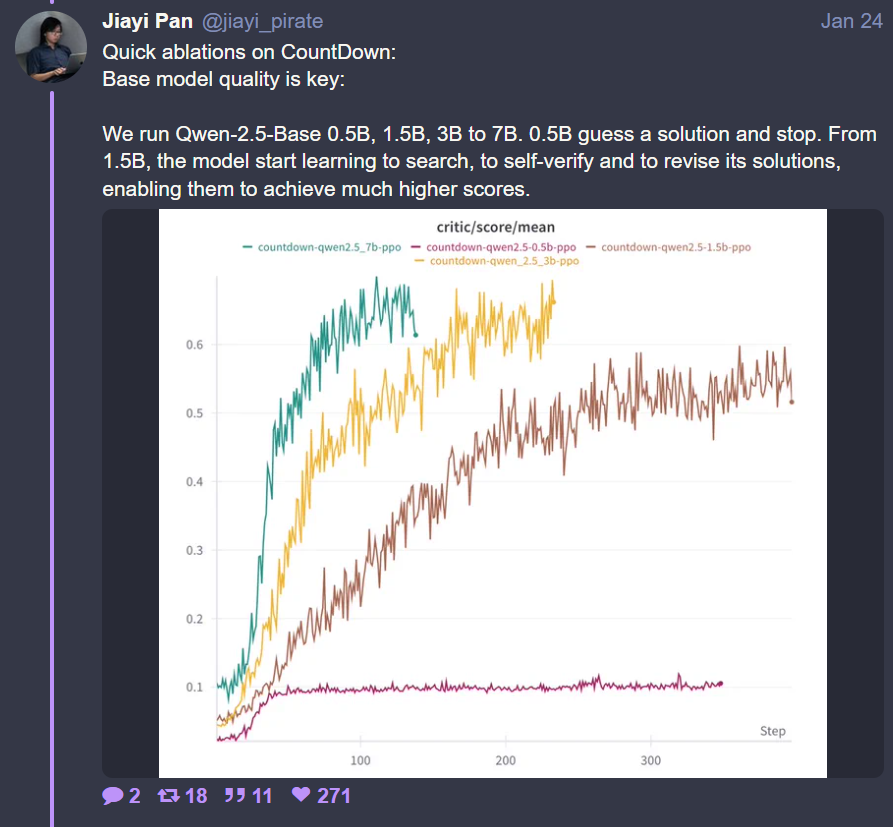

What's particularly impressive is that the entire recreation cost them just $30, Pan claims in a post on Nitter. This is a mind-boggling fraction of what leading AI firms spend on large-scale training. The researchers tested multiple model sizes, starting with a 500-million-parameter model that could only guess and stop, regardless of accuracy.

When scaled to 1.5 billion parameters, the DeepSeek recreation began incorporating revision techniques. Models between 3 and 7 billion parameters showed significant improvement, solving problems in fewer steps with better accuracy, Pan and the other researchers report.

For some context, OpenAI charges $15 per million tokens via its API at the time of writing, while DeepSeek offers a much lower cost of $0.55 per million tokens. The Berkeley team's findings suggest that highly capable AI models can be developed for a fraction of the cost currently invested by leading AI companies. Despite how cheap it is, there are many reasons you should probably avoid DeepSeek.

One reason is that some experts are skeptical about DeepSeek's claimed affordability. AI researcher Nathan Lambert has raised concerns about whether DeepSeek's reported $5 million training cost for its 671-billion-parameter model accurately reflects the full picture. The AI also sends a lot of data back to China, which is certainly cause for concern and is already leading to DeepSeek bans throughout the U.S.

In fact, Lambert estimates that DeepSeek AI's annual operational expenses could be anywhere between $500 million and over $1 billion, considering everything from infrastructure, energy consumption, and research personnel costs. OpenAI also claims there is evidence DeepSeek was trained using ChatGPT, which could help account for some of the reduced costs.

Even so, the Berkeley team's work proves that cutting-edge reinforcement learning can be achieved without the enormous budgets that industry giants like OpenAI, Google, and Microsoft currently allocate. With some AI labs spending up to $10 billion annually on training models, this research highlights what could become a potentially disruptive shift in the field.