New Apple Watch Feature Might Use AI To Predict Health Problems

I bought the Apple Watch Series 10 last fall because I'm a runner and I want the wearable to track all my health data while I train and rest. It's not just about keeping tabs on my running stats or trying to shave a few minutes off my marathon time.

I went with the more expensive Apple Watch Series 10 instead of replacing my Apple Watch SE's battery because I wanted the newer model's more advanced health sensors. I'm at an age where I'm more conscious of my health, and I want tools like the Apple Watch to gather as many health metrics as possible.

When symptoms eventually show up, I'm hoping all the Apple Watch data in the Health app, combined with advanced AI tools, will provide early warnings so I can take immediate action. That long-term data might also help doctors by giving them years of health history to review.

There's a missing piece in all this. The Apple Watch collects a massive amount of health data during training, rest, and sleep. But the current watchOS and iOS software can't yet bring all those data points together and analyze them in one place.

I don't want the Apple Watch to simply tell me that my heart rate is too high. I want to see how I trained, what I ate, how I slept, and then make a more informed judgment. Known medical conditions, medications, and other factors could help the Apple Watch explain its warnings better. It might even catch early signs of developing conditions sooner.

That might sound like wishful thinking for now, but Apple is already working on these kinds of AI features for the iPhone and Apple Watch. The company published a new study detailing an AI model that interprets overall well-being by analyzing a wide range of health data instead of just individual signals from the Watch's sensors.

The AI model

The study, titled "Beyond Sensor Data: Foundation Models of Behavioral Data from Wearables Improve Health Predictions," is available at this link.

Apple researchers trained an AI model on health data from more than 160,000 Apple Watch users, calling it the wearable health behavior foundation model (WBM). The system processed 15 billion hours of data so the AI could learn what healthy patterns look like and how they shift with different conditions.

Here are the categories of behavioral data the researchers collected:

- Activity (8 variables): active energy (estimated calories burned), basal energy, step count (phone and watch), exercise time, standing time, and flights climbed (phone and watch).

- Cardiovascular (4 variables): resting heart rate, walking heart rate average, heart rate, and heart rate variability. Vitals (3 variables): respiratory rate (overnight only), blood oxygen, and wrist temperature (overnight).

- Gait / mobility (8 variables): walking metrics (speed, step length, double support percentage, asymmetry percentage, and steadiness score), stair ascent/descent speed, and fall count.

- Body Measurements (2 variables): body mass and BMI.

- Cardiovascular Fitness / Functional Capacity (2 variables): VO2max and six-minute walk distance, both clinically validated measures of fitness and capacity.

The researchers trained the WBM AI on eight A100 GPUs for 16 hours, then tested how well it could detect both long-term conditions and week-to-week health changes.

What the researchers found

Apple scientists found that WBM outperforms algorithms that rely on specific biometric data, like heart rate, when it comes to detecting certain conditions. That's because this AI model looks at all the behavioral data over time, not just one sensor's readings.

For instance, WBM is better at analyzing sleep. "Behavioral data capture information from every hour of the week, including overnight periods, where we can infer how long someone was asleep by periods of inactivity (e.g., from step count, exercise minutes, and heart rate)."

The photoplethysmogram (PPG), which the Apple Watch uses to gather health data by shining colored light on your skin, only takes measurements a few times a day.

The researchers also found that WBM detects infection or injury better than sensor-based algorithms. For example, gait and mobility changes are good indicators of injury.

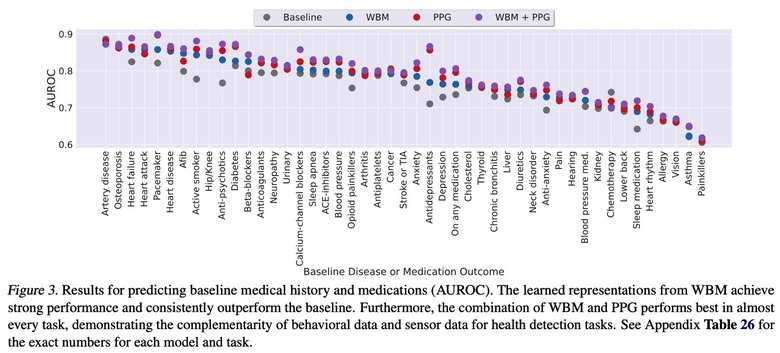

According to the study, WBM beats PPG-only readings for 18 out of 47 baseline diseases and medication outcomes. The model did especially well in predicting beta blocker use, a drug commonly prescribed for heart conditions.

In some cases, though, PPG data alone still outperformed WBM, like with diabetes and certain medications such as antidepressants, where direct physiological data is more telling.

Combining WBM and PPG delivers the best results. The Apple Watch could detect conditions more accurately by analyzing both behavioral data and sensor readings together, rather than relying on just one source.

In fact, researchers found that combining WBM and PPG is "remarkably performant for pregnancy prediction."

Will we see these Apple Watch AI features in Apple Intelligence?

The WBM model is exactly the kind of feature I hope to see in Apple Intelligence soon. It could help improve my well-being by offering real-time advice. I'd love for the Watch to give me training suggestions based on how I'm doing. It might let me know I'm pushing too hard or not enough. The AI could also tell me I'm underperforming because I didn't sleep well or eat properly.

By analyzing behavioral patterns, the AI might flag early signs of health issues. For instance, it could recommend a cardiology checkup after noticing I'm climbing stairs more slowly and my resting heart rate is rising. Or it might predict diabetes risk based on changes in my diet, weight, and activity levels.

watchOS 11 and iOS 18's Vitals feature can already identify infections early, but it's up to you to interpret the data. An AI model could analyze it for you and alert you before symptoms show up. That might help you get a jump on taking medication, hydrating, and resting.

This is just speculation, but I'd love to see Apple bring the WBM model to the iPhone, assuming future research confirms the study's results.