Meet Norman, MIT's Psychotic AI That's Seen Reddit And Now Only Thinks About Death And Murder

If you were wondering whether you can use Reddit to train artificial intelligence, then you have your answer. Yes, you can. Only you might not like the results. Researchers at MIT did that with Norman, a psychotic AI with a perfectly appropriate name, because after spending some time on Reddit it now thinks about nothing but murder and death.

By the way, Norman was intentionally created that way, to prove that the data used to teach AI can significantly influence its behavior.

"Norman suffered from extended exposure to the darkest corners of Reddit and represents a case study on the dangers of Artificial Intelligence gone wrong when biased data is used in machine learning algorithms," MIT explains.

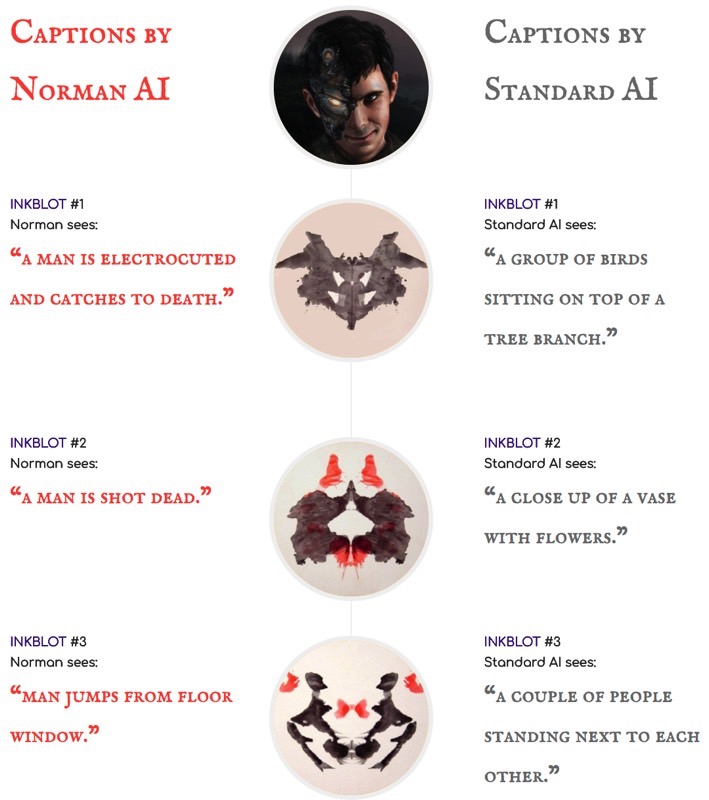

"Norman is an AI that is trained to perform image captioning, a popular deep learning method of generating a textual description of an image. We trained Norman on image captions from an infamous subreddit (the name is redacted due to its graphic content) that is dedicated to document and observe the disturbing reality of death. Then, we compared Norman's responses with a standard image captioning neural network (trained on MSCOCO dataset) on Rorschach inkblots; a test that is used to detect underlying thought disorders."

How bad is it? Well, check these Rorschach interpretations yourself — you'll find even more at this link:

That's incredibly disturbing. On the other hand, it's not a surprise. About two years ago Twitter taught Microsoft's "Tay" AI chatbot to be racist.