Meta Allegedly Used 82TB Of Stolen Books To Train Its AI

If you follow AI products such as ChatGPT and Gemini, you also have to realize one of the sad realities about them. We can't have advanced AI without proper training, and the training process involves exposing the AI to tons of high-quality data. Another reality is that I, as a ChatGPT user, do not want the personal data in my chats with the AI to help train better models that could be even more useful. Similarly, copyright content owners aren't happy with AI firms training their chatbots on their works without consent. Yet it's something that happens all the time. Also, some AI companies might not want to spend the money to obtain consent when they can get the data from shadier corners of the internet.

It's not just OpenAI that has to face copyright lawsuits, as Meta is fighting its own AI-related copyright infringement case. While the class action suit against Meta isn't surprising, the revelations that have come from it shed more light on the kind of data AI models like Meta AI use.

Meta reportedly downloaded as much as 82TB of pirated books from illegal sources to train its AI. The figure comes from alleged communications between Meta employees that came to light in the lawsuit. It follows Meta's admission that it torrented tens of millions of pirated books.

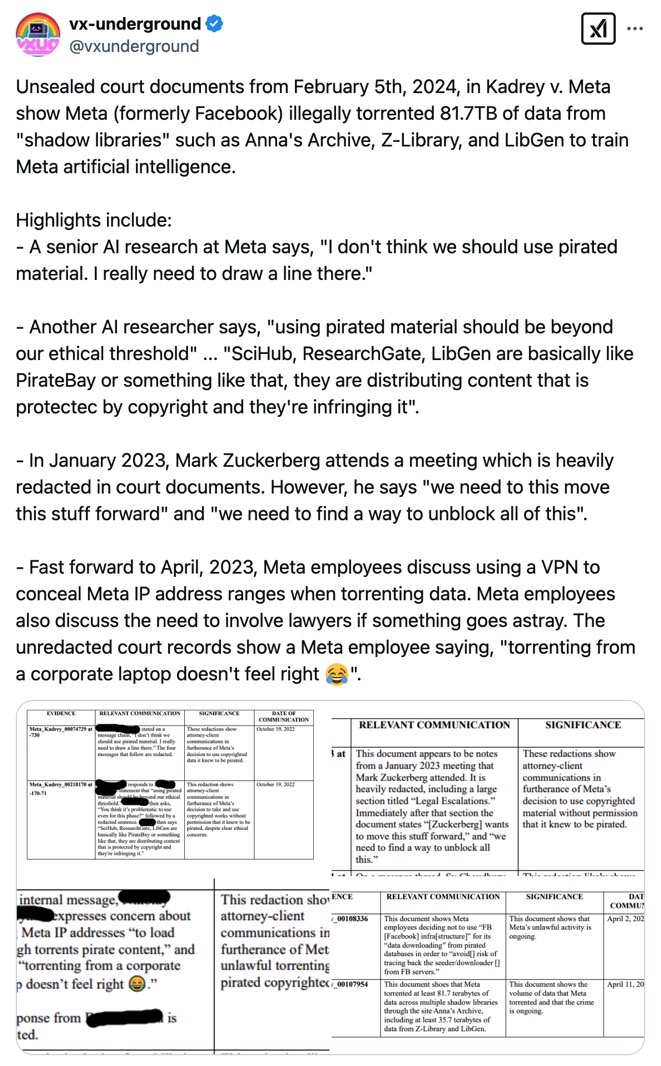

Documents from the lawsuit against Meta surfaced on X, providing more details about Meta's practices. They even include comments from Meta employees involved in the process who mused on the type of illegal data collection that Meta was doing.

Here are some of the comments from the documentation:

- "I don't think we should use pirated material. I really need to draw a line there." – A senior Meta AI researcher.

- "using pirated material should be beyond our ethical threshold"... "SciHub, ResearchGate, LibGen are basically like PirateBay or something like that, they are distributing content that is protected by copyright and they're infringing it." – Another AI researcher.

- "torrenting from a corporate laptop doesn't feel right 😂" – Meta employees discussing in April 2023 using a VPN to conceal Meta IP addresses when downloading pirated content.

The documents also mention a January 2023 Meta meeting, which Meta CEO Mark Zuckerber supposedly attended and said he wanted to "move this stuff forward" and that "we need to find a way to unblock this."

As a reminder, ChatGPT went viral in late November 2022, stunning the tech world and prompting a major shift towards AI-first software and hardware products. While Meta's open-source Llama models were praised in the past years, Meta AI hasn't actually been making the news like ChatGPT or Gemini for AI innovations and new features.

Last September, Zuckerberg downplayed the need to pay creators according to their demands in an interview:

"I think individual creators or publishers tend to overestimate the value of their specific content in the grand scheme of this.

My guess is that there are going to be certain partnerships that get made when content is really important and valuable [...] when push comes to shove, if they demanded that we don't use their content, then we just wouldn't use their content. It's not like that's going to change the outcome of this stuff that much."

When Zuckerberg made the comments above, Meta was already being sued for infringing copyright. Now that we've learned the amount of pirated content Meta allegedly downloaded to train the AI, the comment reads much differently.

Meta AI might already be trained on copyrighted content; Meta achieved its purpose. Removing the copyrighted content now is probably easy, and it'll probably not change the outcome of "this stuff" that much.

That is, Meta already got what it needed, at least for the first phase of Llama development. That's assuming Meta used the data to train the AI. The company might always say they only downloaded it but never used it.

The outcome of the trial might change how Meta handles new sources of content in the future, especially considering these damning revelations. It's not just the massive amount of printed books that Meta might have downloaded to train its AI; it's also the practices used to do it.

According to Tom's Hardware and Ars Technica, Meta took steps to ensure the downloading of illegal content would not be traced back to Meta. This suggests Meta took deliberate steps to get pirated content for its AI, fully knowing what it was doing.

Ironically, OpenAI accuses DeepSeek of using ChatGPT data to train the DeepSeek AI that went viral a few weeks ago. That's on par with copyright holders saying that OpenAI and Meta copied their works to train AIs.