'Look Around' Cars For Apple Maps Will Help Train Apple's AI

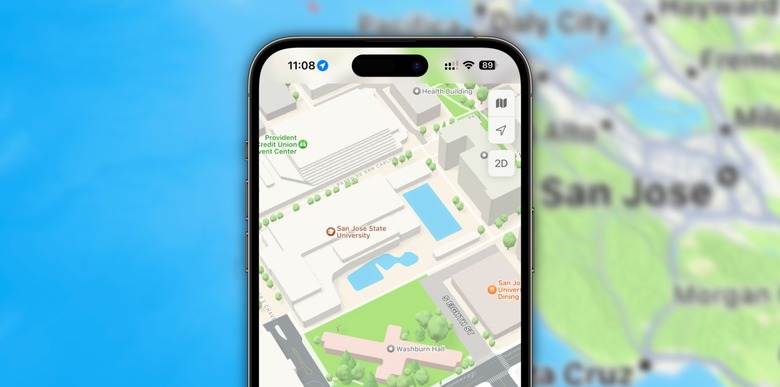

The cars responsible for collecting photos and 3D scans for Apple Maps' Look Around feature now have a new task: help train Apple Intelligence models. As spotted by 9to5Mac, Apple's equivalent of Google Street View will now capture images to improve some of the company's AI functions.

In a webpage, Apple explains that its Maps Image Collection practices will also be used to train generative AI models, which include Image Playground and Clean Up. Ultimately, these captures could help Apple's AI create more realistic images, or they could help in any number of other ways.

The Apple Maps Image Collection page now states the following: "In addition to improving Apple Maps and the algorithms that blur faces and license plates in images published in Look Around feature, Apple also will use blurred imagery collected during surveys conducted beginning in March 2025 to develop and improve other Apple products and services. This includes using data to train models powering Apple products and services, including models related to image recognition, creation, and enhancement."

Apple's Image Collection practice has existed for over a decade now. Apple uses vehicle surveys, iPhones, iPads, and other devices to collect data from locations and help keep its maps up-to-date.

Like Google, Apple already blurs faces and license plates from its published Look Around images. Still, it's also possible to ask the company to request that a face, license plate, or your own house be blurred.

Interestingly, 9to5Mac noticed this change in the Look Around data collection a few weeks after Apple announced its long-awaited Siri revamp had been indefinitely postponed. Expected for the coming year, Apple had to apologize for unveiling a breakthrough feature that was nothing but a concept.

While the company rearranges its executives to work on improving Siri and Apple Intelligence, we know the firm is also focusing on other AI tasks to make its platform more updated.