I Want One Of These AI-Powered Humanoid Robots To Help Me Around The House

I'm a longtime ChatGPT user, and I can't imagine using the internet without access to some sort of AI to help me out. AI acting as an assistant is what I need from ChatGPT and other programs, and I can't wait for AI agents to get better and easier to use.

But I've also started considering the more distant future, one where I would need the AI to have an actual body. As I grow older, I will want a humanoid robot around the house to help me with all sorts of tasks, including ones that might be too difficult for me. I'll also want an AI home robot to free more of my time, as the robot gets to handle some of my chores.

It's not just wishful thinking, as various companies are studying such technologies. Figure is one of them, and the company just unveiled a brand new AI model for humanoid robots that will let them do exactly what I want. Helix is the name of the Vision-Language-Action (VLA) AI model, and it already seems to be a breakthrough for home robotics.

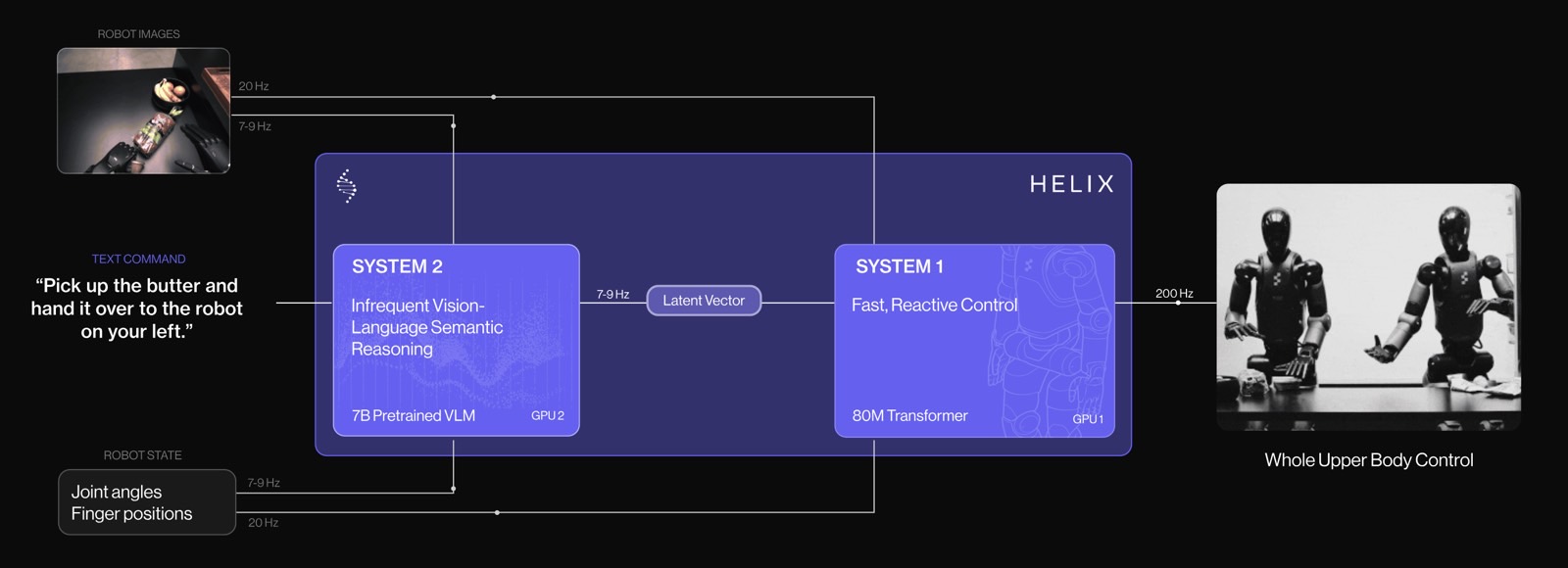

Helix combines two AI models, each running on a GPU inside the robot, to understand commands and perform actions. One AI (S2) handles natural language and visual inputs to understand commands, like having to pick up an object from the table. The other AI in this combo (S1) converts the information from S2 into precise, real-time control of the robot's body and limbs to actually perform the task.

What's amazing about Helix is that it took about 500 hours of training, and the resulting model lets robots handle objects they've never seen and determine whether care is needed.

Creating robots for the home is much more challenging than making robots for highly controlled environments like manufacturing lines. Home robots would have to take into account the ever-changing landscape of a household. There's all sorts of clutter around the house. You have different furniture to account for, and each home has a particular design.

That is, home robots can't be trained to a general concept of a household. They have to adapt to what they see in the household they're deployed.

Also, they have to understand natural language and then execute commands according to those spoken prompts.

These are the problems the Figure team set to fix, and that's how they developed the Helix model.

Unlike previous systems, the Figure engineers decided to create a unified model that features a single neural network that can handle both visual and language input interpretation and then the execution of robotic moves based on that command. The AI also allows the robot to adapt to various scenarios and work with other robots that might be in its vicinity.

Figure combined two AIs to create the Helix Vision-Language-Action model. S2 is the high-level thinking system that runs at a lower frequency. This model will understand my commands while also getting visual inputs from its environment. Telling the robot to pick up a glass would be enough for the AI to understand it's handling a fragile object, even if it never used a glass during training.

The S1 is the AI that controls the robotic movements of the fingers, arm, and torso to execute the command that S2 analyzed. S1 runs at a much faster frequency than S2, allowing the robot to adapt in real time to real-life scenarios. For example, maybe the robot has to adapt the position of the torso while also moving an arm so its fingers can reach the glass it has to move from a dishwasher to a drawer.

To achieve this breakthrough, the Figure engineers put the Helix AI through 500 hours of training featuring "high quality supervised data." That's just a fraction of the previous VLA datasets, smaller than 5%. The training also involves the AI analyzing videos of robot actions and then trying to determine the command given to the robot to perform those actions.

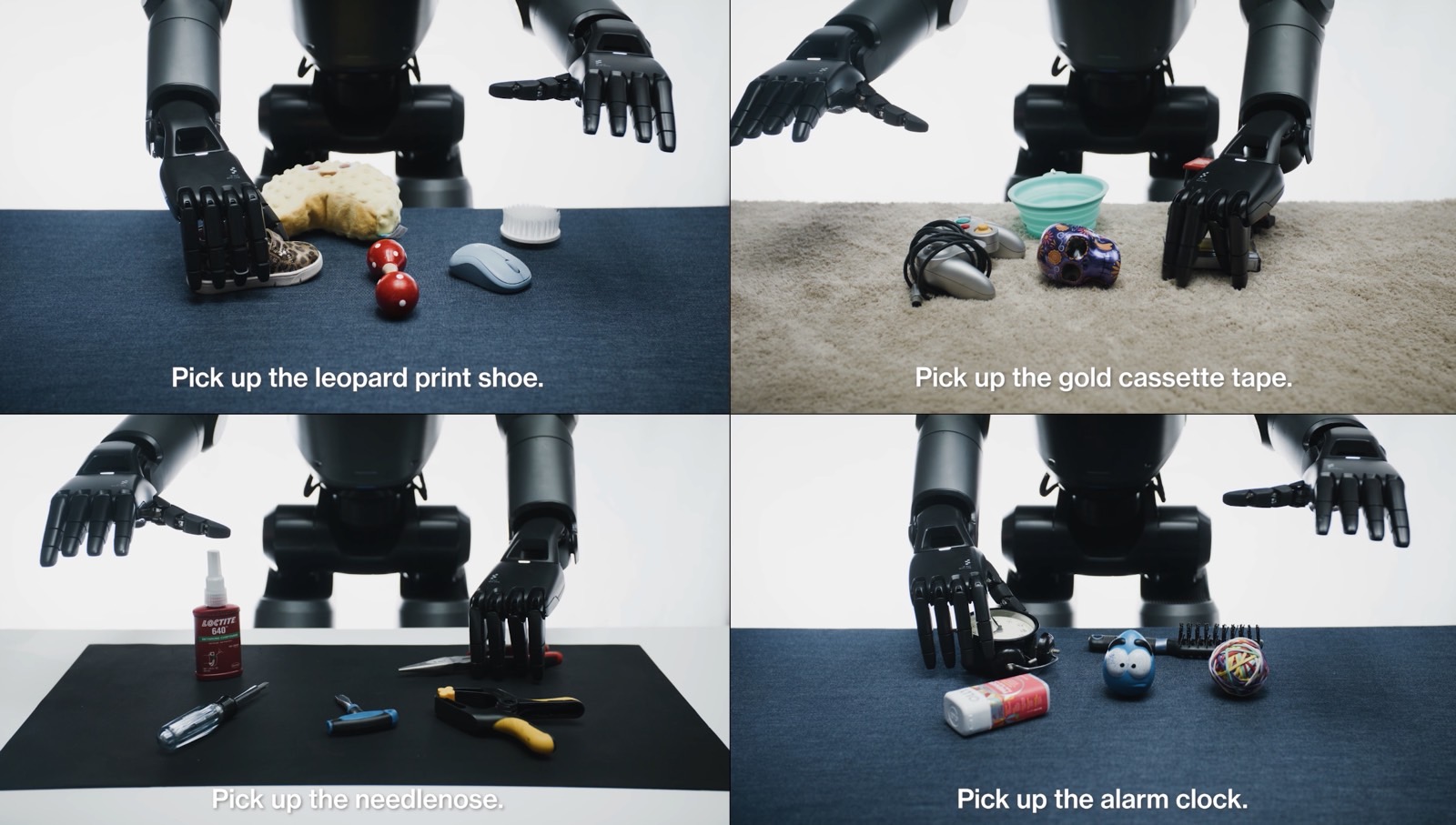

This approach allows the Helix AI to learn how to recognize and handle a variety of objects found around the home without needing to actually train to pick up those objects. The videos that Figure shared show the robot being able to pick up a specific object from a cluttered table by simply understanding speech and analyzing its surroundings.

That also involves adapting the necessary movements to perform the action. It's one thing for the robot to pick up a toy and another to pick up a glass. While the robot can't feel objects like humans do, its training taught it to handle objects depending on their fragility. The robots can also pick up smaller objects from a pile, adapting to their size.

The Helix AI can also be used to have two humanoid robots working in unison at home. There may be scenarios where you'll want two robots to assist you.

In the examples Figure released, we see one robot adapting to the movements of another, while also taking into account a command that instructed it to pick up an object from a different robot. The two robots coordinate without having to be pre-programmed to do so.

As exciting as the Figure robots and the Helix AI might seem, there's no telling when they're going to be available commercially or how much one robot will cost. Even Figure says the technology they've developed only scratches the surface of what's possible. The next step is scaling Helix "by 1,000x and beyond."

Meanwhile, you can visit this link to learn more details about the Helix AI, complete with videos of Figure robots in action.