Google's New Gemini Robotics AI Models Are Blowing My Mind

When ChatGPT first arrived, we got a text-based chatbot that could attempt to reasonably answer any question, even if it got things wrong (it still does, as hallucinations have not disappeared). It didn't take long for the AI to gain new abilities. It could see things via photos and videos. It could hear humans speak and respond via its own voice.

The next step was to give the AI eyes and ears that could observe your surroundings in real-time. We already have smart glasses that do that, the Ray-Ban Meta model. Google and others are working on similar products. Apple might put cameras inside AirPods for the same reason.

The work will be complete when the AI has a body to be physically present around us and help us with all sorts of tasks that require handling real-life objects. I saw the writing on the wall months ago when I said I wanted humanoid AI robots for the home.

More recently, I saw the kind of AI model that would give robots the smarts to see and understand the physical world around them and interact with objects and actions they were never trained on. That was the Figure Helix Vision-Language-Action (VLA) for AI robots.

Unsurprisingly, others are working on similar technology, and Google just announced two Gemini Robotics models that blew my mind. Like the Figure tech, the Gemini Robotics AIs will help robots understand human commands, their surroundings, and what they need to do to perform the tasks humans give them.

We're still in the early days of AI robotics, and it'll be a while until the humanoid robot helper I want around the house is ready for mass consumption. But Google is already laying the groundwork for that future.

Google DeepMind published a blog post and a research paper describing the new Gemini Robotics and Gemini Robotics-ER models it developed on the back of Gemini 2.0 tech. That's Google's most advanced generative AI program available to users right now.

Google Robotics is the VLA built on Gemini 2.0 "with the addition of physical actions as a new output modality for the purpose of directly controlling robots."

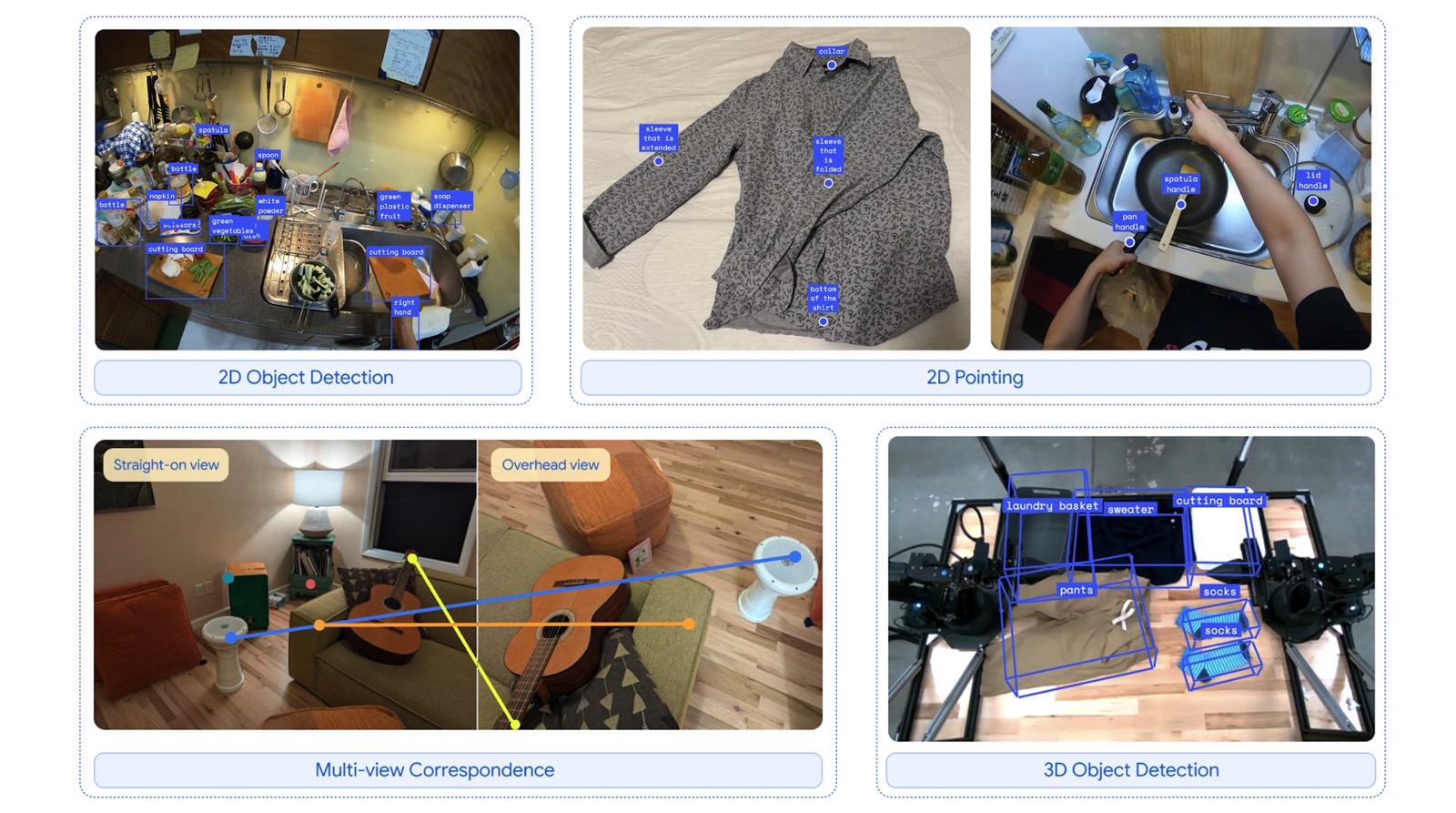

The second is "a Gemini model with advanced spatial understanding, enabling roboticists to run their own programs using Gemini's embodied reasoning (ER) abilities." It's aptly called Gemini Robotics-ER.

By embodied reasoning, Google means robots need to develop "the humanlike ability to comprehend and react to the world around us" and do it safely.

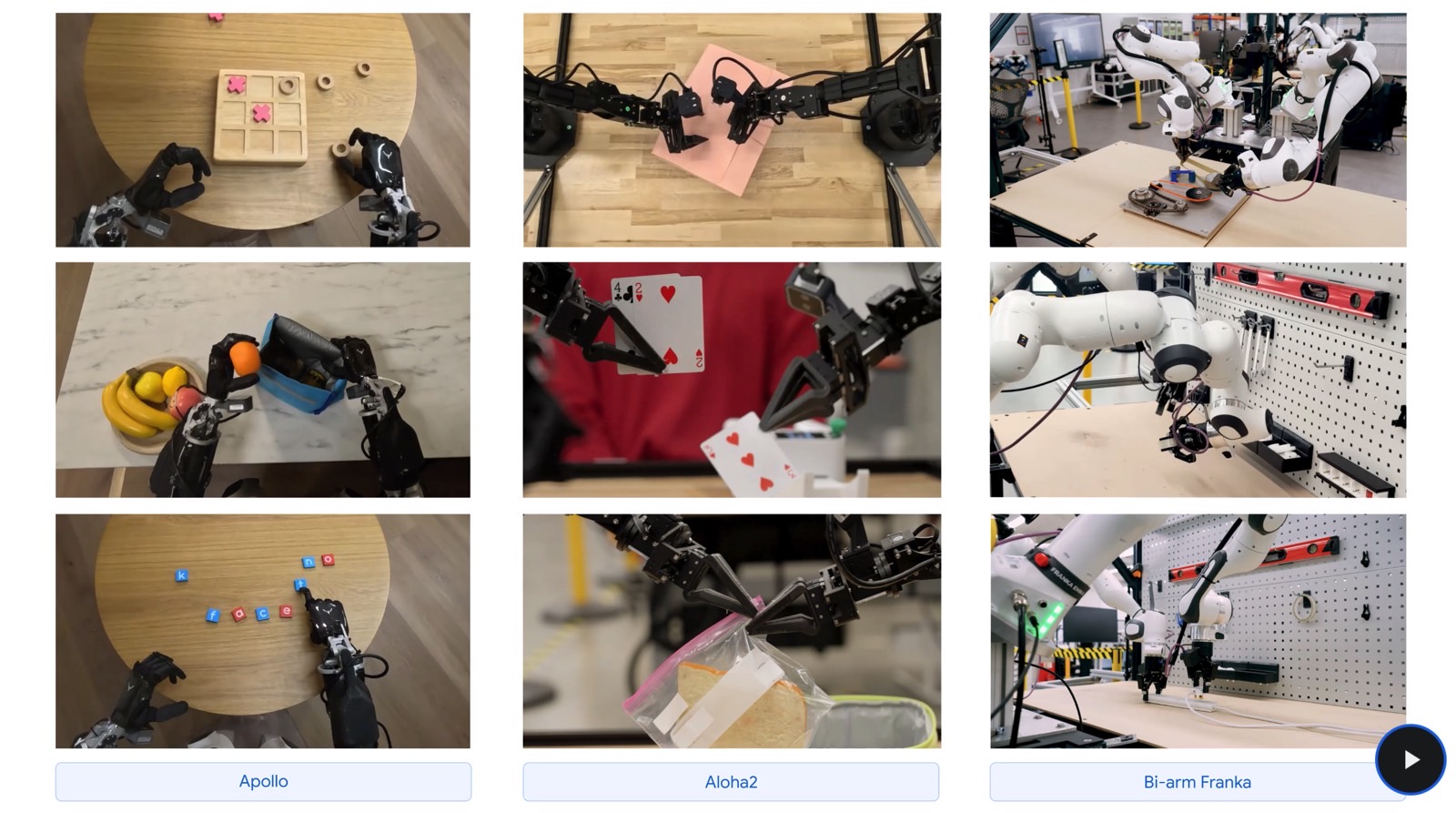

Google shared various videos that show AI robots in action, responding to natural language commands and adapting to changing landscapes. Thanks to Gemini, the robots can see their surroundings and understand natural language. They can then perform new tasks even though they might have never interacted with objects or places before.

Google explains the three principles that guided the development of Gemini Robotics. That's generality, interactivity, and dexterity:

To be useful and helpful to people, AI models for robotics need three principal qualities: they have to be general, meaning they're able to adapt to different situations; they have to be interactive, meaning they can understand and respond quickly to instructions or changes in their environment; and they have to be dexterous, meaning they can do the kinds of things people generally can do with their hands and fingers, like carefully manipulate objects.

As you'll see in the videos in this post, the robots can recognize all sorts of objects on a table and perform tasks in real-time. For example, a robot slam dunks a tiny basketball through a hoop when told to.

The AI robots can also quickly adapt to the changing landscape. Told to put bananas in a basket of a specific color on a table, the robots perform the task correctly even though the human annoyingly moves that basket.

Finally, the AI robots can display fine motor skills, such as folding origami or packing a ziplock bag.

Google explains that the Gemini Robotics model works with all sorts of robot types, whether a bi-arm robotic platform or a humanoid model.

Gemini Robotics-ER is an equally brilliant AI tech for robotics. This model focuses on understanding the world so robots can perform movements and tasks within the space they're supposed to perform actions. With Gemini Robotics-ER, AI robots would employ Gemini 2.0 to code (reason?) on the fly:

Gemini Robotics-ER improves Gemini 2.0's existing abilities like pointing and 3D detection by a large margin. Combining spatial reasoning and Gemini's coding abilities, Gemini Robotics-ER can instantiate entirely new capabilities on the fly. For example, when shown a coffee mug, the model can intuit an appropriate two-finger grasp for picking it up by the handle and a safe trajectory for approaching it.

All of this is very exciting, at least to this AI enthusiast, even though I know I have plenty of waiting to do until AI robots powered by such tech are available commercially.

Before you start worrying about AI robots becoming the enemy, like in the movies, you should know that Google has also developed a Robot Constitution in previous work to ensure that AI robots behave safely in their environments and prevent harm to humans. The safety constitution is based on Isaac Asimov's Three Laws of Robotics, with Google updating it to create a new framework that can be further adjusted via simple natural language instructions:

We have since developed a framework to automatically generate data-driven constitutions – rules expressed directly in natural language – to steer a robot's behavior. This framework would allow people to create, modify, and apply constitutions to develop robots that are safer and more aligned with human values.

You can read more about the Gemini Robotics models at this link.