Google's New AI Coding Agent Could Help Gemini Develop Better Versions Of Itself

Most scenarios where AI takes over the world propose an artificial intelligence program that's sentient and superior to any human on Earth. This malicious AI would be able to improve itself on its own and even hide its true intentions from humans. This would lead to even better, more powerful versions of AI that pursue their own interests even if they diverge from ours.

To get better, such AI might not just code better versions of itself. It would also ensure it has the resources to survive a human rebellion. It will want uninterrupted access to the internet so it can clone itself, faster hardware so it can work better, and lots of energy sources. That's before looking for a body so it can actually roam the planet.

That's essentially the playbook for any movie or TV show telling a doomsday story about AI taking over the world. It's now an actual worry in the real world. Some people worry that AI development can get out of control and lead to a future where AI becomes unaligned. Thus, AI that can put us in danger, AI that can tweak itself to become better.

We're not quite there yet, and I don't fear such a future. But Google did just announce what appears to be a massive innovation that won't be immediately available to Gemini users.

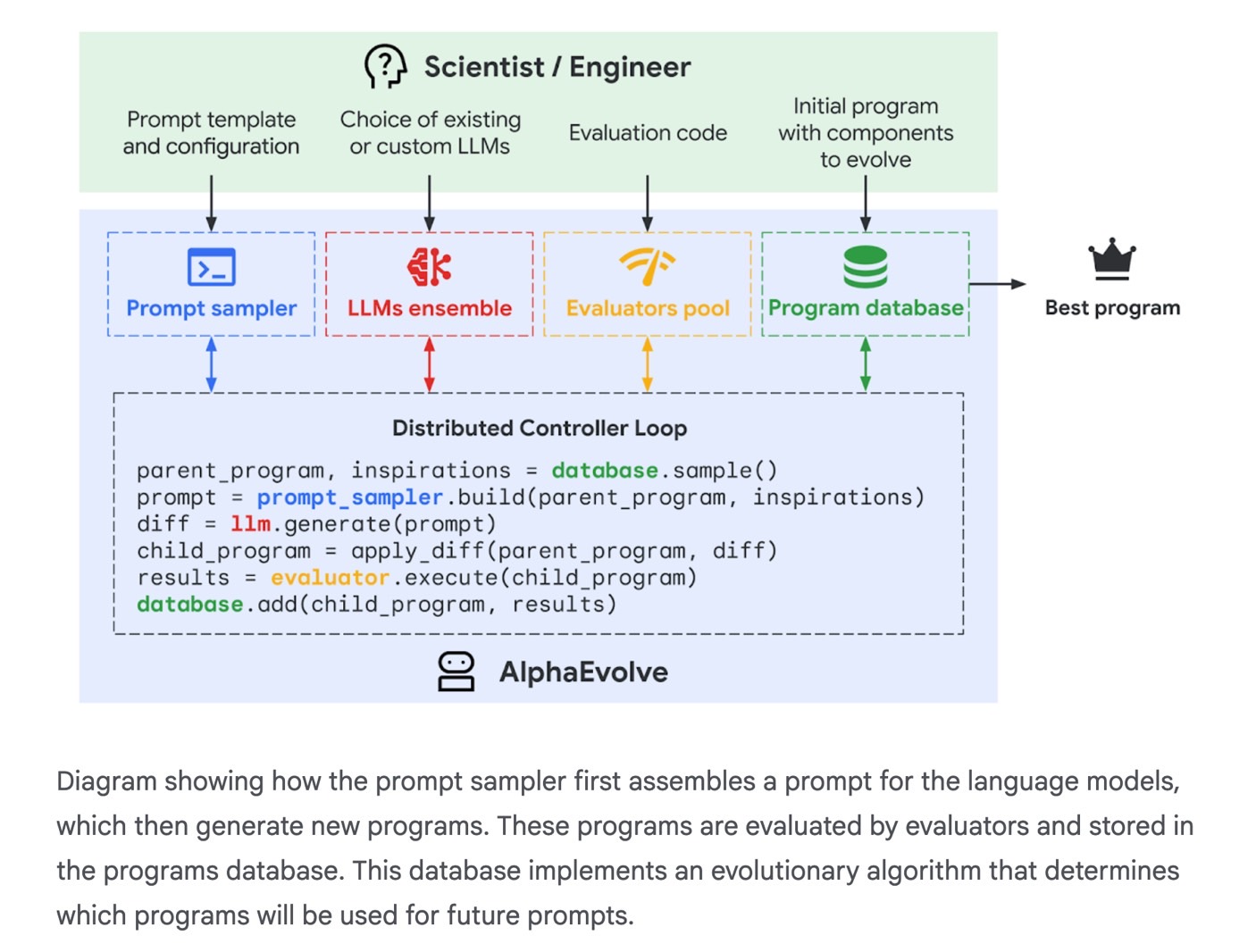

It's called AlphaEvolve, and it's a new AI that allows Gemini AI to develop better versions of Gemini AI. This isn't Gemini acting independently to create next-gen versions of itself. It is AI controlled by humans to create better AI software. It's not just about AI software; AlphaEvolve can be used anywhere algorithms are needed to facilitate discovery and improve efficiencies.

This doesn't sound like such a big deal, certainly not like nefarious AI using AI to improve itself, and it's not. Again, humans are in control here, and they're using AlphaEvolve to improve AI development.

AlphaEvolve is an AI agent that uses two of Google's powerful AI models, Gemini Flash and Gemini Pro. The former provides ideas, while the latter "provides critical depth with insightful suggestions." The two AIs work together to propose computer programs that implement algorithms as code.

Then, automated evaluators look at the results objectively to see if the proposed solutions work.

This sounds somewhat similar to what humans have been doing for thousands of years to get us where we are today. Some came up with ideas, others provided feedback, and then the real-life around them told them if those solutions would work. Replace all these with AI, and there's room for objectively measurable improvement in various fields.

So what does that mean in actual examples that anyone can understand? Google's DeepMind team provided those, too. AlphaEvolve has already come up with impressive efficiencies that Google will be using on AI-related products.

"AlphaEvolve discovered a simple yet remarkably effective heuristic" to improve the efficiency of Google's data centers that operate Gemini AI, among other things. The solution allows Google to recover 0.7% of its worldwide compute resources. That might not seem like much, but it adds up, especially considering that AI firms ike Google continuously expand their infrastructure.

ChatGPT helped me with the numbers, and that 0.7% efficiency translates to 0.17 TWh of electricity saved a year out of some 24 TWh of energy Google's servers needed in 2023. That's enough energy to power a small town for a year.

AlphaEvolve also helped Googlers create more efficient code for the next version of its Tensor Processing Unit, which Google will use to train AI in the future.

Finally, AlphaEvolve devised new solutions to complex math problems that significantly improved the time the AI took to perform that key task (23%). This translates to reducing training time by about 1% overall, or millions of dollars in savings.

Remember when I said that a superior, malicious AI would want to ensure it has enough compute power while developing better chips, reducing energy consumption, and improving the efficiency of AI operations? Everything Google describes in the blog fits that doom scenario. The only difference is that it's not a doom scenario at all, as humans are in control.

Otherwise, AlphaEvolve lets Google reduce AI-related energy consumption, improve AI chips, and improve AI training. This can also translate into cheaper access for users, as Google would spend fewer resources to offer frontier AI models to consumers. Simply put, Gemini is working to make Gemini better. That's certainly great news for users, as long as humans remain in control.

The best part is that AlphaEvolve isn't just about using AI to make better AI. Google explains that AlphaEvolve was used to offer better solutions to advanced math problems, including a 300-year-old math puzzle.

What I'm getting at is that AlphaEvolve might be even more exciting than ChatGPT and Gemini, though most people will never get to interact with it. The new AI agent might help researchers develop innovations in fields where algorithms can be used to objectively advance research.

Google thinks AphaEvolve might be useful in various fields, including "material science, drug discovery, sustainability, and wider technological and business applications." That's exciting already, as such tech could speed up research and discoveries.

Google is working on giving AlphaEvolve a friendly user interface, setting up an Early Access Program for select academic users, and "exploring possibilities" to make AlphaEvolve more broadly available.