Google's Fix For The Fake AI Photo Problem It Created Still Isn't Good Enough

When I first saw the photos of Donald Trump working in a McDonald's a few days ago, the first thing I thought of was generative AI. I thought someone had created the fake images using a tool like Google's Gemini before scrolling away to something else. I saw only later that the images were real.

The experience above is how someone like me, a fan of genAI products like ChatGPT, Gemini, and Apple Intelligence, browses the web. I've started assuming that the stuff my eyes see isn't necessarily real and requires fact-checking. I only do that if I'm truly interested in something. Otherwise, I scroll away.

But then, regular mortals who have never used genAI products or are not aware of how easy it is to create fake images using Google's Pixel 9 phones might not be aware of the problem. They might still believe anything they see online.

That's why the fake images that Google lets you create with Magic Editor in Google Photos or the Pixel 9 Reimagine feature are so dangerous. They can be used to create misleading narratives that might always go viral on social media.

Thankfully, Google is aware of the problem it created, and it's starting to fix it. We knew this Google Photos AI transparency feature was in the works. Google is ready to start rolling it out.

Next week, Google Photos will display information showing that a photo has been edited with Google AI. That's a great step forward, yes. But it's a fix people like me will be aware of rather than regular mortals. The AI-edited fake photos will show no visible watermark to indicate a picture was created with Gemini and is, therefore, fake.

Google explained in a blog post the new changes. These go beyond having descriptions in metadata concerning the use of AI.

"Photos edited with tools like Magic Editor, Magic Eraser and Zoom Enhance already include metadata based on technical standards from The International Press Telecommunications Council (IPTC) to indicate that they've been edited using generative AI," Google says.

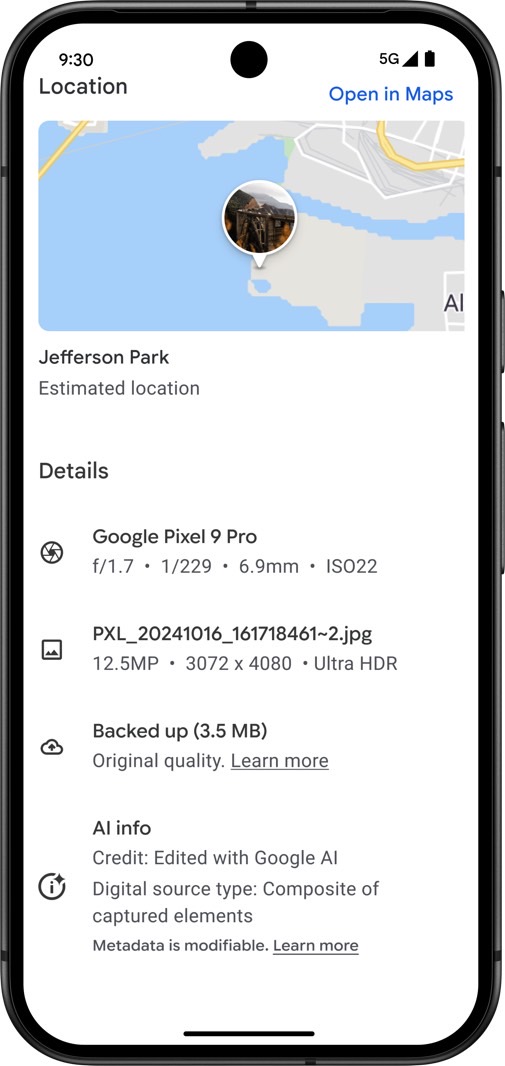

The next step is presenting the AI information in the Details section of Google Photos. That's where you'd see information like the file name, location, and backup status. Hopefully, people will start looking for those details whenever looking at images via Google Photos.

Google also says it'll use IPTC metadata "to indicate when an image is composed of elements from different photos using non-generative features." This applies to certain Pixel features like Best Take (Pixel 8 and Pixel 9) and Add Me (Pixel 9). Using Best Take and Add Me leads to the creation of fake photos, no matter how impressive the technology might be.

The next step should be a visible watermark that you can't remove. That's something Samsung has tried to offer on its Galaxy AI phones that can manipulate imagery with genAI. Samsung's tool wasn't perfect either, but it's something.

Google says in the same blog that the "work is not done." Other improvements concerning the transparency of AI edits in photos are hopefully in the works.

The bigger problem here is that Google introduced these advanced AI abilities before actually deploying fixes like the one announced this week. The reason it did so is simple to guess. Google wanted to prove Gemini had these abilities ready. Its AI can generate images and edit existing photos with photo-realistic quality.

Comparatively, Apple chooses not to give the iPhone similar features via Apple Intelligence. Not because it can't, but because it's more cautious about its software generating lifelike fakes.