Google I/O 2018: The 10 Biggest Announcements

The annual Google I/O developer conference is Google's biggest event of the year by far. Unlike Apple, where the biggest event each year is the company's late-summer iPhone unveiling, Google is a software company first and foremost. At Google I/O each year, Google takes us on a journey through the company's efforts to push the boundaries of consumer technology. Google isn't a completely open book, of course, and there are plenty of secret projects being worked on behind closed doors. But the company is always quite open about its core focuses, and Google I/O 2018 was a showcase of all the key areas of concentration at Google.

Artificial intelligence was obviously among the stars of the show at Google I/O 2018, and Google Assistant will play an even more central role in Google's ecosystem than it already has over the past few years. We also got our first glimpse at the newly updated version of Android P, which is available to developers (and anyone else who wants to install it on his or her Pixel phone) beginning today. The company covered all that and more during its 90-minute Google I/O 2018 keynote presentation, and we've rounded up all of the most important announcements right here in this recap.

Smart Compose in Gmail

This is a nifty new feature in Gmail that uses machine learning to not just predict words users plan to type, but entire phrases. And we're not just talking about simple predictions like addresses, but entire phrases that are suggested based on context and user history. The feature will roll out to users in the next month.

Google Photos AI features

Google Photos is getting a ton of new features based on artificial intelligence and machine learning. For example, Google Photos can take an old restored black and white photo and not just convert it to color, but convert it to realistic color and touch it up in the process.

Google Assistant voices

The original Google Assistant voice was named Holly, and it was based on actual recordings. Moving forward, Google Assistant will get six new voices... including John Legend! Google is using WaveNet to make voices more realistic, and it hopes to ultimately perfect all accents and languages around the world. Google Assistant will support 30 different languages by the end of 2018.

Natural conversation

Google is making a ton of upgrades to Google Assistant revolving around natural conversation. For one, conversations can continue following an initial wake command ("Hey Google"). The new feature is called continued conversation and it'll be available in the coming weeks.

Multiple Actions support is coming to Google Assistant as well, allowing Google Assistant to handle multiple commands at one time.

Another new feature called "Pretty Please" will help young children learn politeness by responding with positive reinforcement when children say please. The feature will roll out later this year.

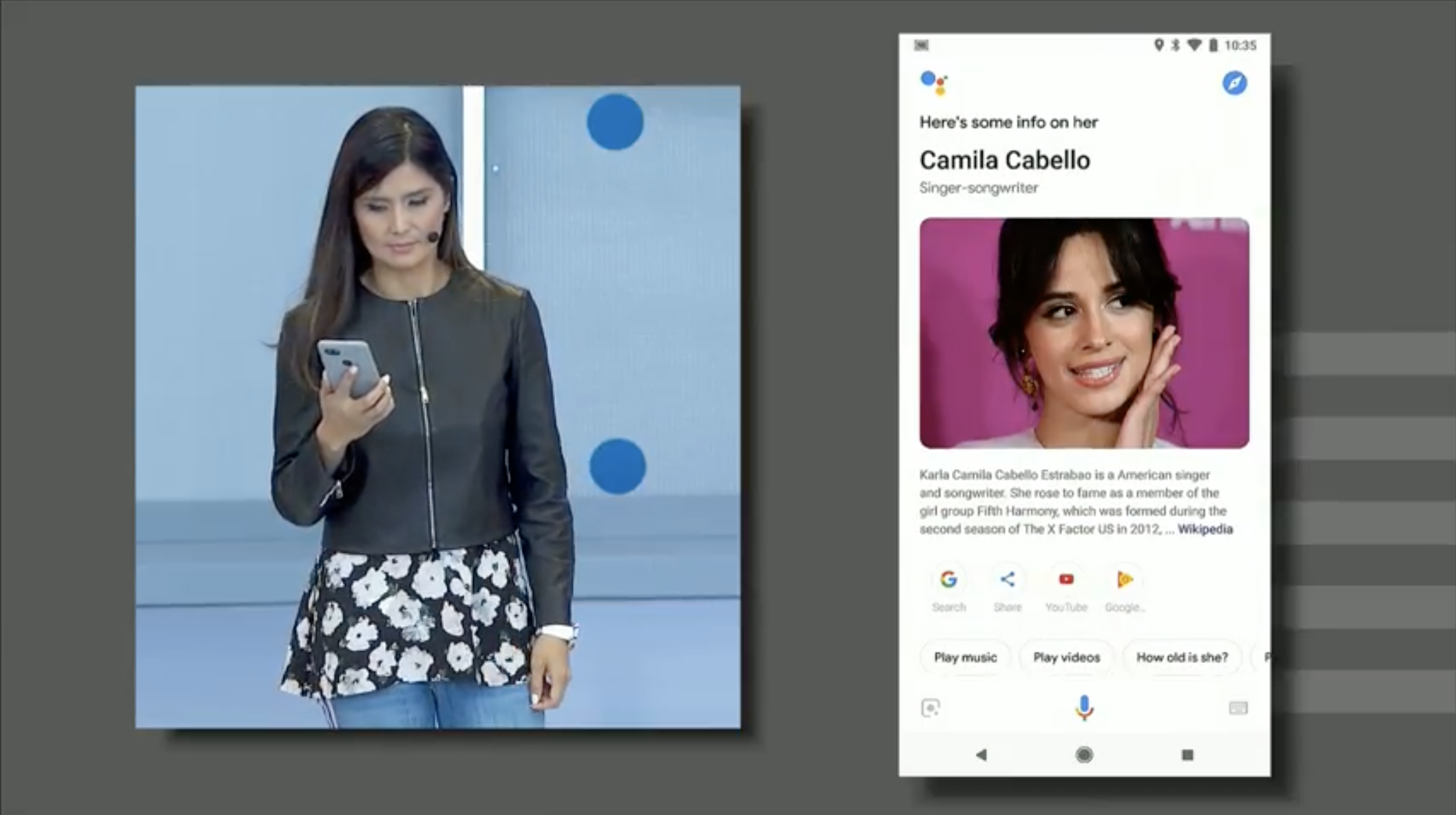

New visual canvas for Google Assistant

The first Smart Displays will be released in July, powered by Google Assistant. In order to power the experiences provided by Smart Displays, Google had to whip up a new visual interface for Assistant.

Also of note, Google Assistant's visual UI is getting an overhaul on mobile devices as well in 2018.

Swiping up in the Google app will show a snapshot of the user's entire day courtesy of Google Assistant. The new UI is coming to Android this summer and to iOS later this year.

Google Duplex

Using text to speech, deep learning, AI, and more, Google Assistant can be a real assistant. In a demo at I/O 2018, Google Assistant made a real call to a hair salon and had a back and forth conversation with an employee, ultimately booking an actual woman's haircut appointment in the time span requested by the user.

This is not a feature that will roll out anytime soon, but it's something Google is working hard to develop for both business and consumers. An initial version of the service that will call businesses to get store hours will roll out in the coming weeks, and the data collected will allow Google to update open and close hours under company profiles online.

Here's a demo video:

Google News

Google News is getting an overhaul that focuses on highlighting quality journalism. The revamp will make it easier for users to keep up with the news by showing a briefing at the top with five important stories. Local news will be highlighted as well, and the Google News app will constantly evolve and learn a user's preferences as he or she uses the app.

Videos from YouTube and elsewhere will be showcased more prominently, and a new feature called Newscasts are like Instagram stories, but for news.

The refreshed Google News will also take steps to help users understand the full scope of a story, showcasing a variety of sources and formats. The new feature, which is called "Full Coverage," will also help by providing related stories, background, timelines of key related events, and more.

Finally, a new Newsstand section lets users follow specific publications, and they can even subscribe to paid news services right inside the app. Paid subscriptions will make content available not just in the Google News app, but on the publisher's website and elsewhere as well.

The updated Google News app is rolling out on the web, iOS, and Android beginning today, and it will be completely rolled out by the end of next week.

Android P

Google had already released the first build of Android P for developers, but on Tuesday the company discussed a number of new Android P features that fall into three core categories.

Intelligence

Google partnered with DeepMind to create a feature called Adaptive Battery. It uses machine learning to determine which apps you use frequently and which ones you use only sporadically, and it restricts background processes for seldom used apps in order to save battery live.

Another new feature called Adaptive Brightness learns a user's brightness preferences in different ambient lighting scenarios to improve auto-brightness settings.

App Actions is a new feature in Android P that predicts actions based on a user's usage patterns. It helps users get to their next task more quickly. For example, if you search for a movie in Google, you might get an App Action that offers to open Fandango so you can buy tickets.

Slices is another new feature that allows developers to take a small piece of their apps — or "slice" — that can be rendered in different places. For example, a Google search for hotels might open a slice from Booking.com that lets users begin the booking process without leaving the search screen. Think of it as a widget, but inside another app instead of on the home screen.

Simplicity

Google wants to help technology fade to the background so that it gets out of the user's way.

First, Android P's navigation has been overhauled. Swipe up on a small home button at the bottom and a new app switcher will open. Swipe up again and the app drawer will open. The new app switcher is now horizontal, and it looks a lot like the iPhone app switcher in iOS 11.

Also appreciated is a new rotation button that lets users choose which apps can auto-rotate and which ones cannot.

Digital wellbeing

Android P brings some important changes to Android that focus on wellbeing.

There's a new dashboard that shows users exactly how their spent their day on their phone. It'll show you which apps you use and for how long, and it provides other important info as well. Controls will be available to help users limit the amount of time they spend in certain apps.

An enhanced Do Not Disturb mode will stop visual notifications as well as audio notifications and vibrations. There's also a new "shush" feature that automatically enables Do Not Disturb when a phone is turned face down on a table. Important contacts will still be able to call even when the new Do Not Disturb mode is enabled.

There's also a new wind-down mode that fades the display to grayscale when someone uses his or her phone late at night before bed.

Google announced a new Android P Beta program just like Apple's public iOS beta program. It allows end users to try Android P on their phones beginning today.

Google Maps

A new "For You" tab in Google Maps shows you new businesses in your area as well as restaurants that are trending around you. Google also added a new "Your Match" score to display the likelihood of you liking a new restaurant based on your historical ratings.

Have trouble choosing a restaurant when you go out in a group? A long-press on any restaurant will add it to a new short list, and you can then share that list with friends. They can add other options, and the group can then choose a restaurant from the group list.

These new features will roll out to Maps this summer.

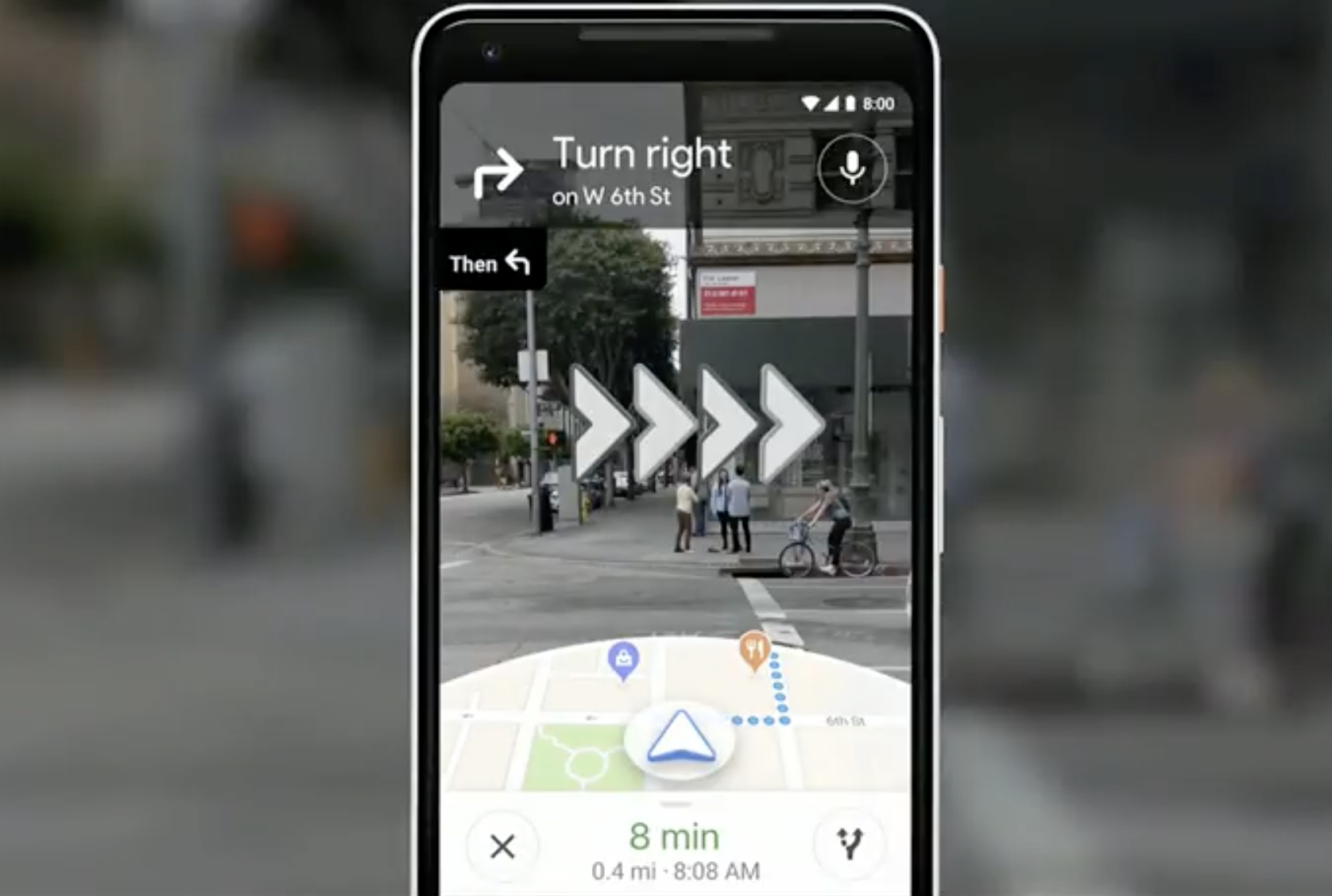

Computer Vision

A bit further down the road, Google is working on a fascinating new feature that combines computer vision courtesy of the camera with Google Maps Street View to create an AR experience in Google Maps.

Google Lens is also coming to additional devices in the coming weeks, and there are new features coming as well.

Lens can now understand words, and you can copy and paste words on a sign or a piece of paper to the phone's clipboard. You can also get context — for example, Google Lens can see a dish on a menu and tell you the ingredients.

A new shopping features let you point your camera at an item to get prices and reviews. And finally, Google Lens now works in real time to constant scan items in the camera frame to give information and, soon, to overlay live results on items in your camera's view.

BONUS: Waymo self-driving taxi service

Waymo is the only company that currently has a fleet of fully self-driving cars with no driver needed in the driver's seat. On Tuesday, Waymo announced a new self-driving taxi service that will soon launch in Phoenix, Arizona. Customers will be able to hail autonomous cars with no one in the driver's seat, and use those self-driving cars to travel to any local destination. The service will launch before the end of 2018.