Google Gemma 3 Is A New Open-Source AI That Can Run On A Single GPU

One of the reasons DeepSeek went viral a few weeks ago is the Chinese AI firm's decision to release this sophisticated model under an open-source license. This means anyone can download the entire model, use it as they see fit, and ignore the DeepSeek mobile and desktop apps. Open-source AI can also help developers create new apps and tools without having to develop the underlying AI model themselves.

DeepSeek isn't the only company making high-end open-source AI models. Meta did it with Llama, and OpenAI plans to make some of its AI models open-source in the future. Then there's Google, which just released Gemma 3, its next-gen open-source AI that's built on the same tech as Gemini.

Gemma 3 comes about a year after Google released the previous version, and you can download it right now, whether you're a developer looking to create new AI apps or an AI fan interested in running AI models locally. One of the highlights of Gemma 3 is the efficiency gains. Google says the AI model can run on a single GPU or TPU, which is a massive advantage over rivals.

In a blog post, the company boasts that Gemma 2 has already topped 100 million downloads, with a "vibrant community" having created more than 60,000 Gemma variants. Google even set up a Gemmaverse to explore models from the community.

All these existing Gemma users will be thrilled to hear about the Gemma 3 upgrades. The new model is built on the same tech that powers Google's proprietary Gemini 2.0 models, which are available right now to consumers on the web and mobile phones.

Thanks to Google's optimizations, Gemma 3 will also run directly on phones, laptops, and workstations. But optimizing Gemma 3 to require fewer resources than rivals doesn't mean it'll be less capable.

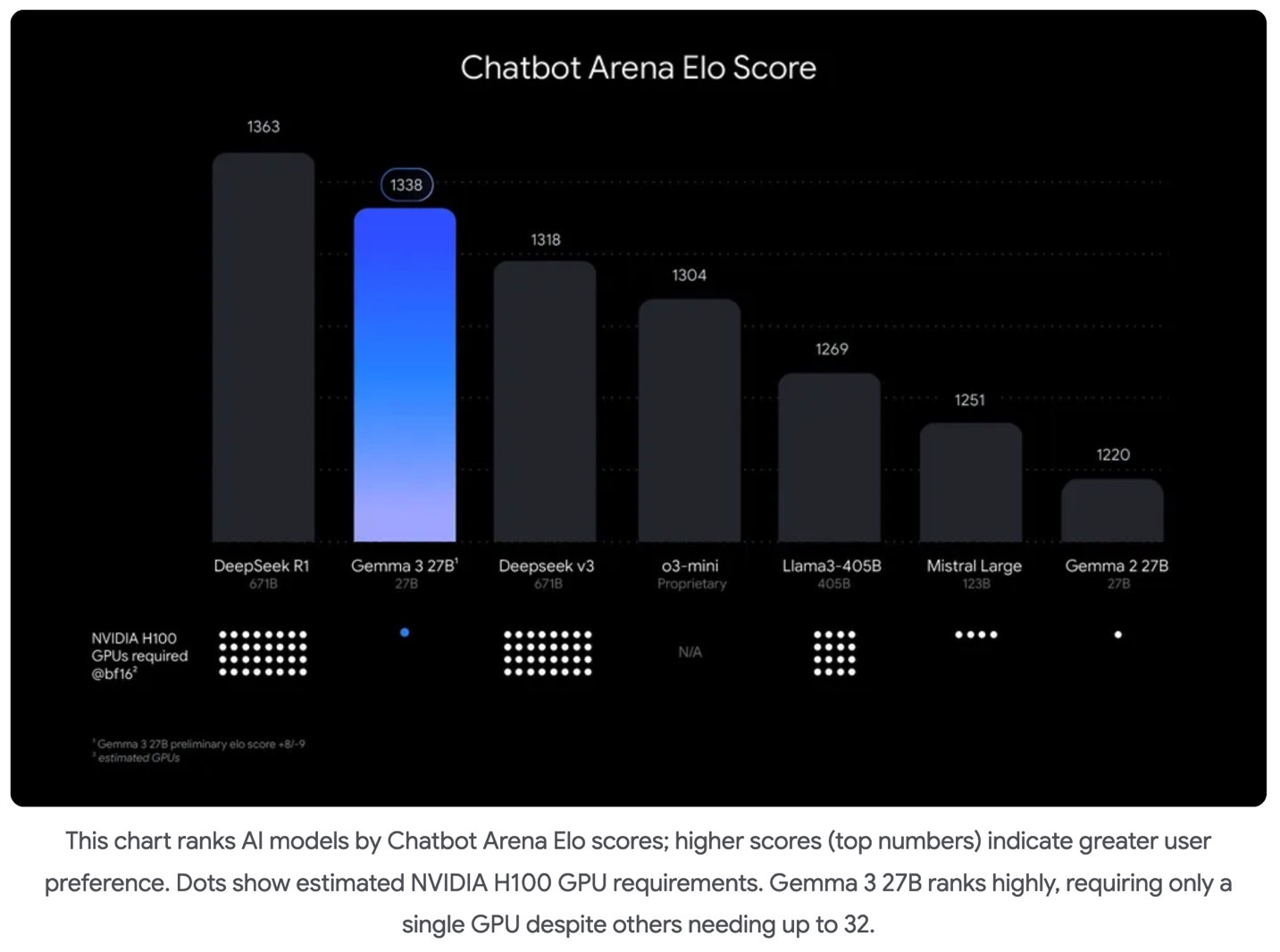

Google says Gemma 3 offers "state-of-the-art performance for its size, outperforming Llama-405B, DeepSeek-V3, and o3-mini in preliminary human preference evaluations on LMArena's leaderboard."

Google also compared Gemma 3 and other AI models, including DeepSeek versions, ChatGPT o3-mini, Llama, and Mistral Large, which show that Gemma 3 27B offers the best performance/resource ratio, as seen above.

Gemma 3 is available in four sizes: 1B, 4B, 12B, and 27B. It offers a context window of 128K tokens, an upgrade from Gemma 2's 80K window.

More important is the multimodal support coming to Gemma 3-based apps. The AI can process text, images, and short videos, which is a big upgrade over Gemma 2.

The new model also supports more languages. Out of the box, it supports 35 languages but has pretrained support for over 140 languages.

Finally, Gemma 3 has built-in safety features to prevent misuse and abuse. Google also introduced a new ShieldGemma 2 4B image safety checker that will handle image safety:

ShieldGemma 2 provides a ready-made solution for image safety, outputting safety labels across three safety categories: dangerous content, sexually explicit, and violence. Developers can further customize ShieldGemma for their safety needs and users. ShieldGemma 2 is open and built to give flexibility and control, leveraging the performance and efficiency of the Gemma 3 architecture to promote responsible AI development.

Interested users can try Gemma 3 directly in the browser via Google AI Studio. Gemma 3 downloads are also available from Hugging Face, Ollama, and Kaggle. You'll find all the links in Google's blog over here.