Google Gemini 1.5 Is So Smart, It Taught Itself A Rare Language From A Grammar Manual

I was wondering why Google came out with the big Gemini rebrand and Gemini Advanced subscription last week. The announcements seemingly came out of the blue. To be fair, this is the norm with AI product launches and upgrades these days. I said at the time they felt like the kind of announcements Google would make at I/O 2024. But Google didn't want to wait three more months for the big Gemini rollout.

A week later, I now have my answer. Google just unveiled Gemini 1.5, the next-gen large language model from Google that comes just a few months after the initial Gemini reveal. And Gemini 1.5 already sounds like a mind-blowing upgrade that will put tremendous pressure on OpenAI and ChatGPT.

What is Gemini 1.5?

Gemini 1.5 Pro, the free version that most people will get, is already as good as Gemini 1.0 Ultra, according to Google. The latter is the high-end Gemini model that costs $20 per month via Google Advanced (and Google One).

Gemini 1.5 will support a context window of up to 1 million tokens. It is good enough to put massive documents and even large videos in it while you ask the chatbot for information. It's also able to learn from context. Google says that Gemini 1.5 can teach itself a rare language after reading a grammar book the same way a person would learn that language, only much faster.

I'll also say that Google's Gemini demos have improved dramatically from a few months ago. Back then, Google made us think Gemini was a product ahead of its time by implying that AI can see what you see and respond in real time.

The new demos, which you'll see in this post, show exactly what interacting with Gemini would look and feel like. Google also mentions whether the video is sped up to replicate real-life conditions.

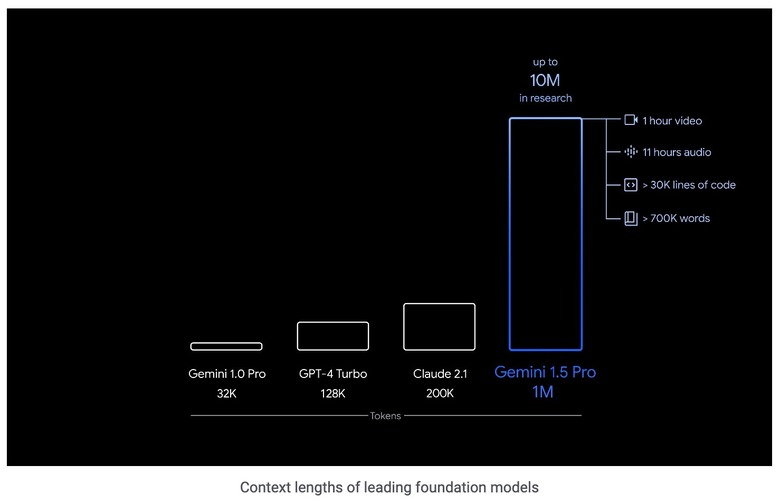

The biggest upgrade for Gemini 1.5 Pro is the support for 1 million tokens, of course. As you can see above, Google says that's enough for AI to process over 700,000 words, over 30,000 lines of code, 11 hours of audio, or 1 hour of video content.

Google says it went up to 10 million tokens while researching Gemini. Such an upgrade will only come much later down the road, if ever.

New demos

When the free Gemini 1.5 Pro version launches, it'll only support about an eighth of that or 128,000 tokens. The paid versions will go up to 1 million. Meanwhile, developers and enterprise customers will get access to the best version (1 million tokens) while Gemini 1.5 Pro is in testing.

Gemini 1.5 is also more efficient, as it features a new Mixture-of-Experts (MoE) architecture. MoE means that the Gemini will only use parts of its LLM to answer queries than the entire model. This should lead to faster performance for the end user. But it's also a big upgrade for reducing the computation required to process the AI requests.

Google also offered a few examples of what Gemini 1.5 Pro can do. In one test, Google uploaded the 402-page transcripts from Apollo 11's mission to the moon and told it to find funny exchanges in it. Gemini 1.5 Pro complied.

In a different test, Google uploaded a 44-minute silent Buster Keaton movie to Gemini 1.5 Pro. The AI was then asked to find the scene where someone removes a note from someone else's pocket and reproduce the text on it.

It did.

In another example, Google testers uploaded a hand-drawn schematic of a scene and asked Gemini to find it. It did that as well, as you can see below.

The previous tests used over 325,000 tokens and almost 700,000 tokens. In a third example, Google imported 100,000 lines of code, or over 815,000 tokens, and asked the AI to perform certain actions related to animation-related code. Gemini 1.5 Pro offered examples for learning about character animation, identified code that powers certain animations, and helped write code to control playback in the animation.

The testers also gave the AI an image of mountainous terrain from the code, asking Gemini to help change it and make it flatter. Asked to tweak specific code, Gemini AI delivered the changes.

When can you use Gemini 1.5 Pro?

Google doesn't have a video showing Gemini 1.5 Pro teaching itself a rare language from a grammar book. But Gemini 1.5 Pro can do that thanks to its "in-context learning" skills. That means it can learn a new skill from the information you put in a long prompt without fine-tuning. Here's Google's example:

When given a grammar manual for Kalamang, a language with fewer than 200 speakers worldwide, the model learns to translate English to Kalamang at a similar level to a person learning from the same content.

Developers and enterprises can start testing Gemini 1.5 Pro right now via the AI Studio and Vertex AI products. The public rollout for Gemini 1.5 Pro (128,000 tokens) will follow "soon." Google will also announce pricing tiers for those users who want even more context, all the way up to 1 million.

OpenAI just announced a big memory upgrade for ChatGPT, but it feels minor compared with Gemini 1.5. I can't wait to see how OpenAI responds to Google's Gemini move.