Google Debuts 2 New AI Features Rolling Out Now For Android

Nearly every major technology company is participating in Global Accessibility Awareness Day (GAAD) by making its products and services more accessible, and Google is no exception. This Thursday, Google announced two new AI-powered improvements for Android that expand upon previously released features for the mobile OS.

TalkBack is more helpful than ever

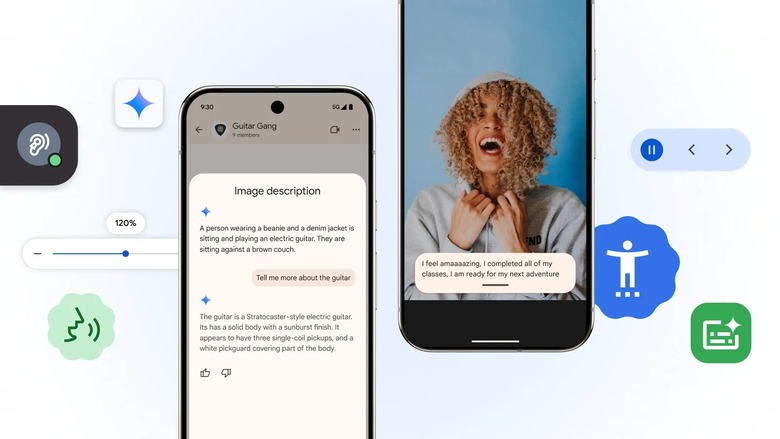

First up is an update to Android's screen reader TalkBack, which gained Gemini capabilities last year. Using the power of Google's Gemini, TalkBack was suddenly able to provide assistance to blind and low-vision Android users by offering more precise and detailed descriptions of images found online without accompanying alt text.

Today, TalkBack's Gemini integration is improving by allowing users to ask questions about the images they find. For example, if your friend sends you a picture of a car, you can ask TalkBack follow-up questions about the make and model. TalkBack can also now answer questions about everything on your device's screen at once.

Expressive Captions are more vivid

Another AI-powered feature Google introduced in 2024 was Expressive Captions, which shows users what is being said and how it's being communicated. This includes fully capitalized words, identification of verbal sounds like sighs, gasps, and grunts, and descriptions of ambient noises such as cheering or birds chirping.

In its newest update, Google added a duration feature to Expressive Captions, which provides even more context about a speaker's words. When a baseball player hits a long home run, you might see a caption that says, "That's waaaaaay out of here," and when a soccer player scores, you'll see the announcer shout, "Gooooooooal!" Additionally, Google says Expressive Captions will include more sound labels, such as whistling and throat clearing.

The latest version of the Expressive Captions feature is rolling out now in English in the US, UK, Canada, and Australia for devices running Android 15 and above.