Gemma 3n Is Google's Powerful Open-Source AI That Can Run On Phones

Google announced several exciting Gemini AI innovations at I/O 2025, significantly improving Gemini's abilities across the board and bringing the AI to more of its apps. But Gemini is a proprietary AI model that you can use only with a Google account. The AI Mode in Google Search might be an exception, but it doesn't offer full access to what Gemini can do.

Also, Gemini requires an internet connection most of the time. The AI handles data processing in the cloud for most tasks, especially complex ones. Gemini Nano, however, is a model designed to perform on-device tasks on smartphones.

If you're looking for Gemini tech in an open-source format or prefer on-device AI, check out Gemma 3n. This Google AI model was announced at I/O 2025. It's built on the same tech as Gemini Nano and is designed specifically to run on-device.

Google said in a blog post that it engineered a new "cutting-edge architecture" for the next generation of on-device AI. The architecture was optimized for "lightning-fast, multimodal AI, enabling truly personal and private experiences directly on your device," in partnership with Qualcomm, MediaTek, and Samsung.

Gemma 3n is the first open model built on this new architecture, and developers can start using it right away. That's the key detail here. You need to know what you're doing to use Gemma 3n. It's not an app you run on an iPhone or Android like Gemini. You'll have to install it on your computer or use it to develop your own apps that deliver on-device AI.

The partnerships mentioned above suggest that plenty of third parties will be interested in using Gemini-like AI tech via Gemma 3n to offer on-device AI experiences on phones, tablets, and laptops.

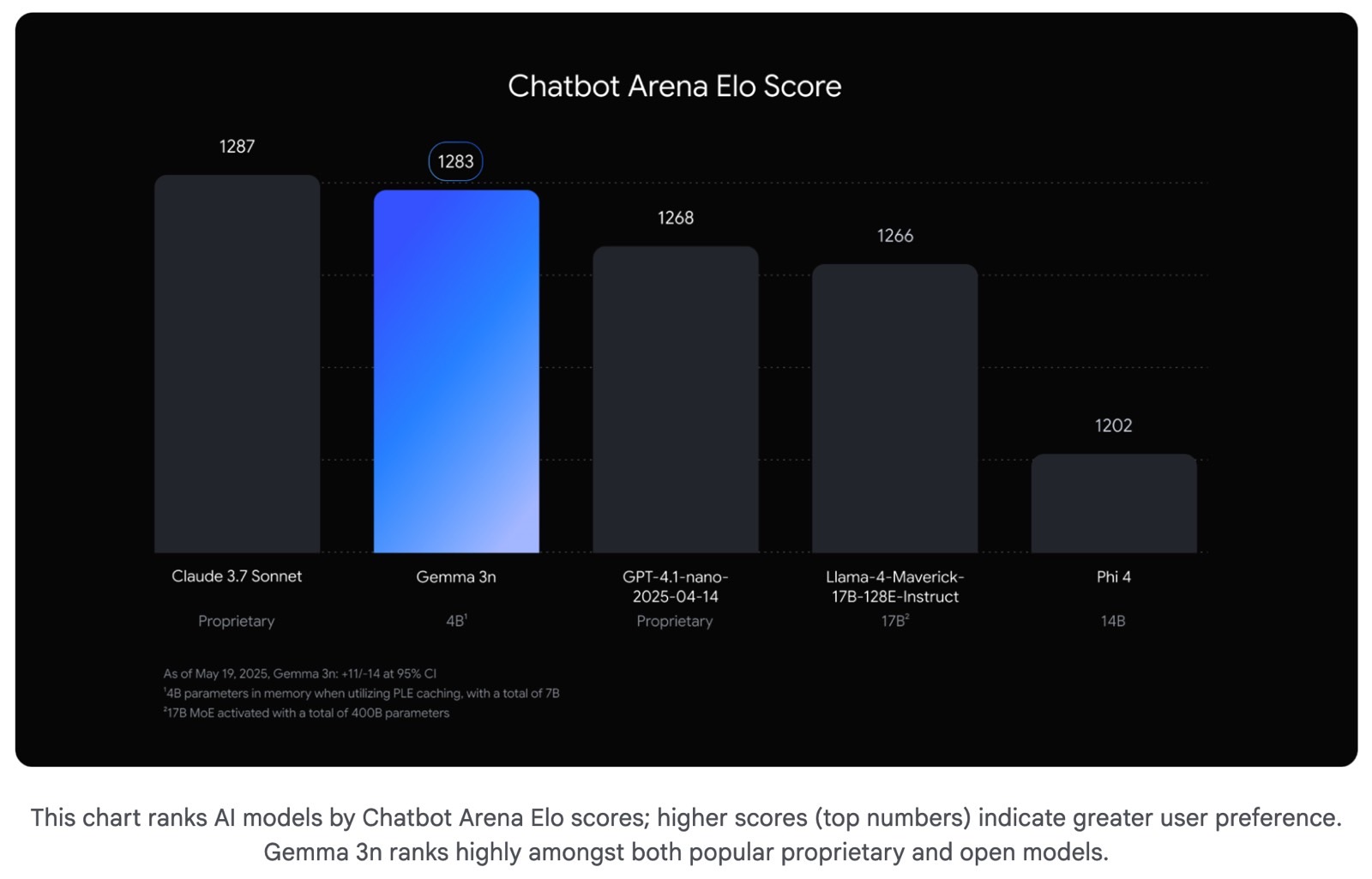

Google also says Gemma 3n is very efficient, allowing the AI to operate with just 2GB or 3GB of RAM. It's also very fast compared to other proprietary and open models. The Chatbot Arena Elo scores place it alongside Anthropic's Claude 3.7 Sonnet.

So, what can Gemma 3n do? As you'll see in the demo below, it's a multimodal model like Gemini. That means it can handle text and voice inputs and understand images, whether on the screen or through live video from the camera.

Gemma 3n can recognize written text, perform translations, and answer complex questions, including solving math problems in real-time.

Google's Gemma 3n experience resembles what Gemini and Gemini Live offer on mobile devices. The difference is that Gemma 3n is optimized to run on-device and is available for integration into apps or operating systems, rather than being a standalone app from Google.

For example, some Android vendors might use Gemma 3n to offer custom on-device features that don't depend on Google. Developers might want to add advanced AI support to their apps, and open models like Gemma 3n could be a great fit.

Then there's the wave of AI smart glasses expected to hit stores in the coming years. Some will come from Google and its partners and run Gemini. OpenAI is also working on its own ChatGPT hardware, and it's possible they're developing glasses too. Other vendors might prefer an open-source model to deliver high-end AI features, and Gemma 3n could be their choice.

Put simply, models like Gemma 3n could be key to the future of personal, on-device AI experiences, even if they don't get as much attention as the proprietary AIs they're built on.